The AI-Rich and the AI-Poor

The two social classes of the incoming AI Reformation

I. Misreading AI’s state of affairs

Artist Reid Southen, popular on X for his relentless criticism of generative AI on behalf of anti-AI artists, captioned the above collage with a dooming message:

Guys, they’re desperate. AI companies are starting to hike prices to offset their losses. Traditionally, this is only done after you’ve cornered the market. They’re so cooked.

His reading comes across as a reasonable one for three reasons.

The generative AI market isn’t yet mature. Startups remain immersed in fierce competition for funding, talent, and pioneer-minded clients. They fight to sell tokens (i.e. generated words) at a lower cost, pushing prices monotonously down, which doesn’t benefit them except as a form of dunk-based public relations.

Companies enjoy no tangible moat—coming-and-going employees control the knowledge supply and Nvidia monopolistically controls the hardware supply—except being the protegee of a tech giant. But that’s no differentiator when most startups are backed by either Microsoft, Google, Nvidia, Amazon, Apple, or some venture capital firm—sometimes several of them at once.

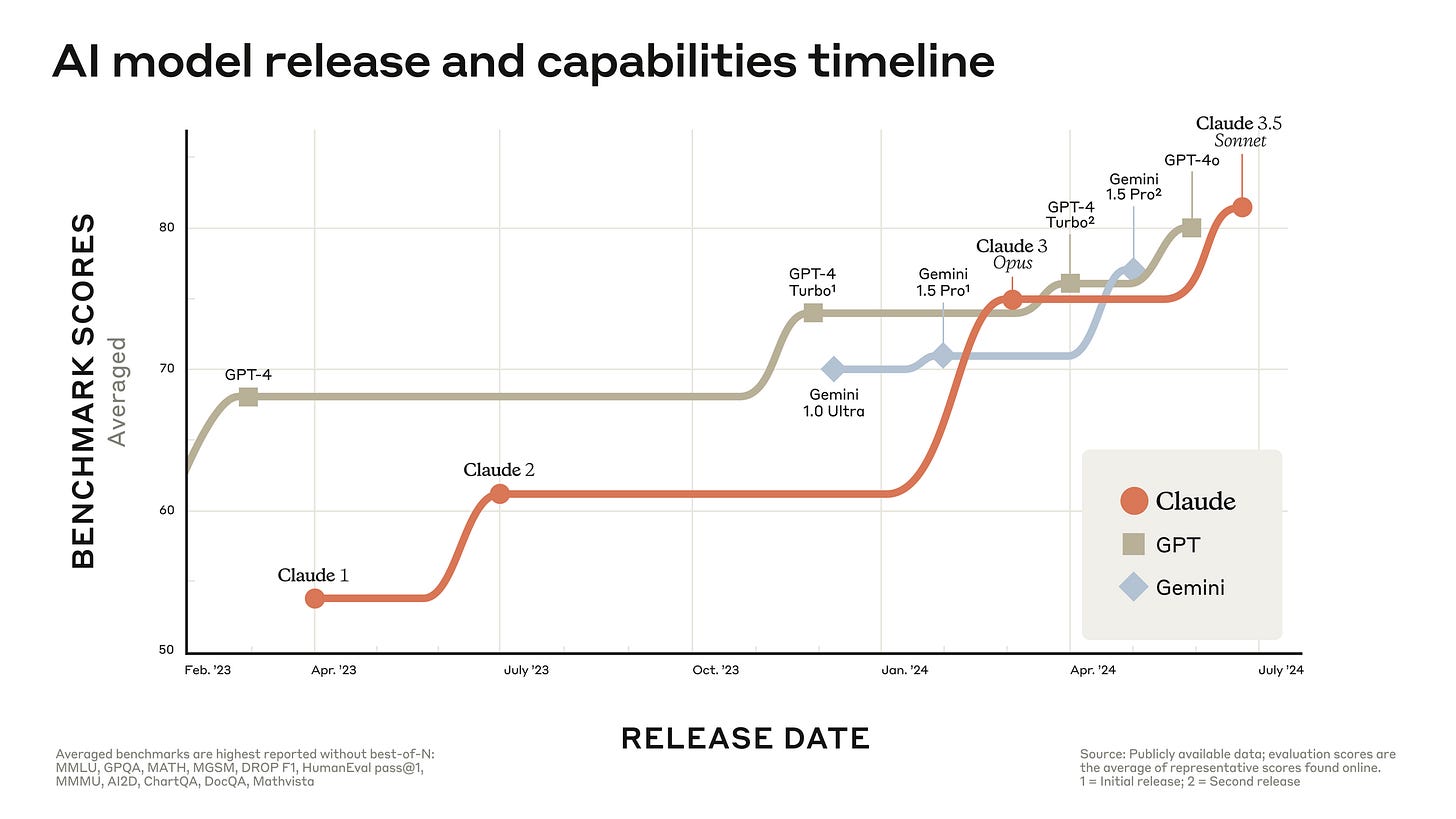

There’s no monopoly on the software side. AI models improve so fast that no one has managed to “corner the market”. Not even OpenAI, owner of the ChatGPT brand. What seemed in early 2023 like a definitive technical edge has turned, a year later, into the compelling life-size reflection of its rivals in the rearview mirror.

Even the startups that managed to capture a substantial share, like OpenAI and to a lesser degree Anthropic, are far from profitable. At 200 million active weekly users (11 million paid), ChatGPT, the flagship of the generative AI fleet, is not providing OpenAI with enough revenue to cover the capital expenditures (e.g. buying Nvidia GPUs) and the operational expenditures (e.g. running the chatbot itself on Microsoft Azure Cloud). Anthropic isn’t better off.

When your numbers are in red it makes sense to raise your prices if you believe the demand for your products can absorb the boost. It may appear “desperate” as Southen says, but it’s sensible nonetheless. I bet paid ChatGPT users would be willing to throw twice as much cash into the app that lays golden tokens. I would.

But 10x? 100x!? Those are the numbers Southen is schadenfreuding about. Those are the numbers OpenAI is reportedly considering in private conversations: up to $2000/month subscriptions. Well, my friend, if 11 million paid users and some of the biggest patrons in the world aren’t enough to keep you competitive without hiking prices 100-fold, perhaps the tech you sell is simply unmarketable.

That is, at least, the conclusion we reach if we follow Southen’s seemingly reasonable reading: AI companies are “cooked” because they’re planning to greedily over-raise the prices of their products to cover costs.

But he’s wrong. Twice.

First, the numbers are off. OpenAI doesn’t need to increase 100x its current revenue to break even. Not 10x or 5x.1

Here’s some napkin math. The information reported that “OpenAI’s AI training and inference costs could reach $7 billion this year [and] staffing costs could go as high as $1.5 billion.” That’s $8.5 billion. They also reported that OpenAI revenue doubled to $3.4 billion in 2024 and updated the figure earlier this month to $4 billion. Both are unconfirmed but informed estimates; surely not off by an order of magnitude. That’s a total of $4.5 billion in operating losses.

2-exing the revenue—easier said than done—should suffice.

Also raising money.

That’s OpenAI’s plan. The new funding round (up to $6.5 billion) could include Apple and Nvidia—together with Microsoft (OpenAI’s main benefactor and beneficiary) making the big tech trio—as well as the UAE. Paraphrasing Bruce Wayne, with the amount of money OpenAI would raise from its pals, it won’t need another cent.

So yeah, either by revenue numbers or by taking into account OpenAI’s short-term fundraising plans, the price hiking as a mechanism to offset losses doesn’t make sense. You can laugh off generative AI for being still unprofitable (despite the hype) but the hypothesis lacks explanatory power.

Up to this point, I’ve merely debunked a bad analysis of why OpenAI could want to raise prices. Is there a sensible hypothesis as to why they are doing it after all?

Yes. There’s one. The reason why Southen is doubly wrong: A super expensive pricing tier up to the four digits reveals that AI companies—OpenAI especially—are not “cooked” but cooking something. This story isn’t about them looking backward in fear of costs but forward in conviction of their vision.

Contrary to what Southen believes, it’s good news—for those who can afford it.

II. Good ol’ generative AI

When I wrote the first draft of this post, Strawberry was a rumor. Today, it’s the materialization of a new AI paradigm in the shape of the OpenAI o1 model series. I wrote a 7,000-word piece on it so I won’t go into any details here. I’ll just advance that the “something” companies were cooking is ready to be served and the hypothesis I intended to put forward, pre-confirmed.

But before I explain how o1, the first AI that can “reason”, alters the business equation, let’s get back to ChatGPT.

ChatGPT is expensive to train and run but not so much.2 It is also a primitive tool, like its equals. No one would pay $2000 a month to use GPT-4 or Claude or Gemini as they are today—chatbots that sometimes know facts, can’t solve simple riddles half of the time, and make inhumanly stupid mistakes on tasks kids pass. They’re useful if you learn to navigate their flaws and the constant hyperbole coming from their creators, but they’re incomplete, imperfect, immature tech.

So what’s the relationship between chatbots and “reasoners”?3 Chatbots are the first phase of a long journey to general AI (AGI, also called human-level AI). Whether you believe AGI is possible or not, and whether you believe it will happen soon or not, companies are certainly making strides toward that goal. As they move, the terrain they walk through changes and so does the landscape: language, reason, agency, invention... It turns out that OpenAI changed biomes last week with o1—from basic generative AI to reasoning AI.

OpenAI o1 is not perfect—often stumbling where its predecessors also failed—but it shouldn’t be disregarded on the basis of its current limitations. The change, rather than of utility, is theoretical.4 Unlike ChatGPT, the o1 model takes time to answer. Because it “thinks”. It’s not a chatbot. At least not just a chatbot. Its existence inaugurates what they call the “reasoning paradigm.”5 It generates stuff but its value lies in what it doesn’t generate.6

OpenAI o1 transcends the generative AI label. It transcends ChatGPT.

We have to reformulate our entire mental model of what AI is and what it can do. It will be hard to get this point across to the general populace because the AI community largely failed to make clear the differences between generative AI and the broader field.7 We’re now burdened by an unnecessary synonymization. Many people have this simplified view that goes: AI = generative AI = ChatGPT. The establishment of a perception-breaking paradigm will come as a surprise to most.8

It’s weird to say it like this after such crazy two years but generative AI is, from now on, not the state of the art. Our heuristics, predictions, and happy ideas about the present and future of AI are suddenly outdated—including the commonly accepted but wrong presumption that these tools will always be affordable.

III. New product, new pricing

Shortsighted folks have interpreted OpenAI’s reported price hike as a desperate move to cover costs because they’re applying a standard framing to scientific discovery. That’s why Reid Southen read the news wrong in the first place: It has nothing to do with existing products or existing paradigms.

Just to be clear, ChatGPT—the GPT-4-based tool you’ve been using for the past two years—will still get cheaper over time, not more expensive. That’s been the trend and will continue to be. Infrastructure and post-training are optimized, reducing operation expenditures and allowing companies to reduce the price-per-word to ~zero.9

But o1 is a different beast. It may solve a problem no one has solved before, like how to decrease the net amount of entropy in the universe. Just kidding. But perhaps something easier, just like Google DeepMind’s AlphaFold has been doing. And if not o1, a successor built on the same paradigm. That’s more costly but also more valuable. That’s what matters to OpenAI’s pricing division. That’s what Sam Altman—and soon also Dario Amodei and Demis Hassabis—will intend to sell, if we’re unlucky, as four-digit subscription services (or, if we are lucky, as three-digit subscription services).10

Getting into specifics, here’s my take on why OpenAI may have mentioned a price as high as $2000/month (which I don’t believe will be imposed on everyone): The pricing tiers will be defined according to the time users want the model to spend on each problem. It could be that simple. Do you want five minutes because you’re debugging a decently sized program? That’s $50/month (or perhaps its pay-as-you-go equivalent). Say you’re a geneticist trying to figure out some obscure gen-disease links and need half an hour for a particularly complex case. That’d be $300/month. Then up to $1000 and $2000 a month for the most challenging tasks.1112

The details are still in the air but the hints are already engraved into the ground. This—a new paradigm, a new product, a new pricing—is the right reading.

IV. Stuck in the past tense

Besides a faulty superficial analysis of the business and progress of AI, Reid Southen made a worse mistake. Let it be the cautionary tale I want to share in this section.

He, and his peers, bought the belief that AI wouldn’t get very far.13 Either for legal reasons, financial droughts, or technical hurdles, they never expected OpenAI to achieve something like o1—or ChatGPT, for that matter. They’ve spent these years in too deep their dismissive attitude that they can no longer see beyond their criticism. If you’re like them, let me tell you that it will happen again. And then again. Correct course.

Yes, existing AI tools have shortcomings, but judging technology from a static snapshot doesn’t end well. I hate to admit this but, in a way, the second-order effects of technology redeem even the most annoying of hypers. Cars began as “horseless carriages” and now the world’s intercity infrastructure is mostly highways and transport logistics revolves around their needs and possibilities. Scribes tried to protect their craft, their trade, their sustenance. But where would you be without the printing press? Surely not reading this.

Here’s a more recent example. Ted Chiang wants to believe AI art isn’t art. Eventually, however, we’ll come to see AI as a tool just like it happened with editing software, and computers before that, and, going further back, with photography. No matter how many clever arguments he may come up with to draw an invisible but reassuring line between these tools and AI, or how many essays he writes to deny this unbearable future: he’s helpless. Even if we fight today, the next generation will gratefully accept what they’ve been given. They will naturally forget that we ever cared.

The anti-AI crowd conflates—not referring to Chiang here—the somewhat shady practices of AI startups with an inherent limitation of the technology. “Why do they hype it so much if it works?” Good damn question! But let me tell you, the lack of a satisfactory answer isn’t proof that the regrettable behavior of tech CEOs reveals some ugly truth about AI’s value.

The unconditional skeptics like Southen, dressed as they are in their self-righteousness, will feel, without notice, that the ground under their feet is moving forward, leaving them stuck in the past.

“Generative AI isn’t this, generative AI isn’t that”—no, guys, generative AI was.

Artificial entities that talk, reason, and act in the world appeared impossible to them. Well, the seeds are planted. Now, with mud on their boots from trying to unearth a new excuse to back up their incessant irreverence, and a blindfold that prevents them from knowing where they should be digging, these people can only stand still, trapped in their own fictions and babbling nonsense about stalks that are already sprouting, without waiting or delay.

But I still don’t blame them. So many grifters have tried to get ahead of this AI bandwagon while it wasn’t even moving yet; their attempts at quickly cashing out made us get tired at the mere mention of those labels—AI reasoning, AI agents, AI assistants—despite them still being sci-fi.

AutoGPT, the first Devin version, the recently unveiled Sakana AI scientist, or the open source model Reflection-70B are all very clumsy attempts at solving a problem that required tech that didn’t exist (and even the yet-unreleased o1 will be a prototype more than a definitive tool). You can’t just fine-tune ChatGPT with a cute wrapper and call it a day. They know it, they always did, but that’s the best they could offer. They didn’t hesitate to do a disservice to the field in exchange for some selfish marketing gains that never materialized.

To do it right they needed more. More talent. More money. More ambition. More conviction. The few AI companies that meet those requirements—OpenAI, Google DeepMind, and Anthropic—brought us here. First to generative AI, then to reasoning AI. They are the ones. The leaders of the AI Reformation.

V. The AI Reformation

This is getting long so let’s do a quick recap for those of you who are still with me.

AI companies want to raise prices for their products.

The reason isn’t to cover the costs of existing products but to support new ones—more capable but also more expensive.

They’re the offspring of a new AI paradigm, the reasoning paradigm.

Those who insisted AI would never get this far will remain stuck in the past, unable to foresee or adapt to the future.

In a way, the generative AI era is over.14

But before the next era ensues the field will go through a double-edged reform.

This sword-shaped process will cut through the bullshit of a technical branch whose burden—in name and consequence—the broader field never deserved to bear. The generative facet was a somewhat necessary intermediate stop but never was it the final goal nor a desirable one. Merely, and I say this gleefully, a deviant enterprise soon to be surpassed by greater efforts. The reforming blade is thus intended for generative AI as itself the object of the cutting—no longer the leading paradigm—and as the regrettable source of an unwarranted contempt toward AI at large: It’ll slash both the encrusted skepticism and the unjustified hype. And I’m here for it.

But the reform is double-edged. The other blade is for us. For you and me and the vast majority: peasants of the new era.

It will cut through our pockets. Deep and clean. Because that kind of technology is not a chatbot—not as faulty, as simplistic, or as cheap. I’ve been throwing around the $2000/month number like it’s nothing but the implications are huge, even if it ends up being, say, $50 or $100 a month. That’s why Sam Altman is posing his dilemma as a question: How much are you willing to pay for a tool that can do this? Will you pay for this awesome new thing 100x what you paid for the old thing? What about 10x?

I don’t feel alluded to by those prices. And you may think, “No one will!” Wrong: As long as the outcome compensates the price, those who can afford it will pay. Or, as Noam Brown puts it, “what inference cost would we pay for a new cancer drug? Or for a proof of the Riemann Hypothesis?” Well, thousands. Dozens of thousands. Hundreds of thousands of dollars.

The kind of money not everyone has to spare.

This interstitial phase I’m calling the AI Reformation will establish a radically different paradigm for AI—not just technical or business, but a social one.

Until now, virtually anyone could use cutting-edge AI technology. For free. ChatGPT started as a free low-key research project. Didn’t it ever occur to you how CRAZY that was? The most advanced software in the palm of your hand, for months on end—free, intuitive, accessible.

And yet some people kept looking for issues and flaws to dunk on it without dedicating at least a comparable amount of time to explore the tool. The jaggedness of AI ability didn’t help, sure. The landscape, abrupt; at times inscrutable, at times plain and dull. Only the adventurous who dared to dream saw the potential behind the apparent simplicity. The rest, I kinda feel pity: AI’s jaggedness got the best of them. Instead of looking far out at the capes, they were looking inward, at the bays. Instead of awing at the highest mountaintops they were fixated on the deepest of valleys and basins.

Well, jagged or not, AI won’t be free anymore. You had the chance. Did you take it? Because that’s over. It won’t be $20/month either. That’s over too.

From now on—and for the first time since AI was established as a consumer industry—this technology will slice the social terrain in two with a bottomless abyss in between and, as a result, two social classes will emerge.

The AI-rich and the AI-poor.