OpenAI o1: A New Paradigm For AI

GPT is dead—long live o1, the first AI that can reason

No, GPT isn’t dead. But if there’s one big takeaway from the new OpenAI o1 model series—allow me the enthusiasm here—is that a new paradigm is being born. A new paradigm of reason. A new paradigm of scaling. A new paradigm of AI.

ChatGPT and GPT-4 will live among us but neither will be OpenAI’s preferred child anymore. We’re entering a new phase, a new era. The company’s resources and efforts will mostly be devoted to exploring, scaling, and maturing the new paradigm, which is more akin to a GPT-3 moment (“Wait, how can AI do that?”) than a ChatGPT moment (“Everyone’s invited to the party!”).

We need a lot of answers to interpret the shift in its entirety:

What does a reasoning AI mean for generative AI (is it generative at all)?

How will users relate to and interact with AI models that can think?

What can reasoning models do when you allow them to think for hours, days, or even weeks?

How will reasoning models scale performance now as a function of compute?

How will companies allocate compute across the training-inference pipeline?

What does all of this mean for the end goals of AI?

How does this relate to GPT-5 (if at all)?

But let’s not get ahead of ourselves. Those are the hard questions. The interesting ones. First, I want to review OpenAI’s announcement: o1-preview and o1-mini. I will give you a summary of what’s new, their skills, the benchmark performances, and a lot of positive and negative examples I’ve gathered. (The models are accessible for all Plus and Team users on the ChatGPT website with a “weekly rate limits will be 30 messages for o1-preview and 50 for o1-mini,” so go use them. And remember: keep your prompts simple).

Then, I will explore what this new paradigm means and what I think is coming. I will sprinkle it all with my thoughts and commentary, both in favor and against OpenAI’s narrative of this new paradigm.

It’s a long article but one that covers a lot of things that will matter in the years to come (sorry in advance for the many footnotes but that’s where you’ll find the alpha, i.e. the juice. I took them out of the main text in case some of you don’t care about details).1

The OpenAI o1 model series vs GPT

The best way to understand something new is by comparing it to its closest existing relative.2 For o1 that’s GPT. How o1 differs from GPT is best illustrated by this graph (h/t Jim Fan):

The upper bar refers to traditional large language models (LLMs), i.e. OpenAI GPTs (and also Gemini, Claude, Llama, Grok, etc.). Below we have a strawberry, which was the internal name of the o1 model series. The bars measure the amount of computing power allocated to each of the three phases an AI model goes through, pre/post-training and inference.

First, the model is trained on tons of so-so quality from the internet (pre-training).

Second, it’s fine-tuned and tweaked here and there to align its behavior, improve its performance, etc. (post-training).

Finally, people use it in production, e.g. asking ChatGPT a question on the website (inference).

Before o1, most computing power (from now on compute) was devoted to making the model gobble up tons of data (the “bigger is better paradigm”). GPT-2 was 1.5 billion parameters, GPT-3 was 175 billion parameters, and GPT-4 was 1.76 trillion parameters. Three orders of magnitude larger over four years. The greater the size, the deeper the pool of data processed and codified during pre-training. GPT-4 required much more compute at pre-training time than its older brothers because it was larger which also made it “smarter”. Over time, AI companies realized they had to also improve post-training and save some resources to improve the behavior of the model. So they did that. That’s all at the pre-production stage, during the development of the model.

Inference is a different beast. First, 200 million people using your AI model every week is expensive. You train it once but people use it millions, even billions of times. Second, there were technical hurdles to overcome, it wasn’t possible to make the model learn when it should devote more compute to a given query depending on its complexity. ChatGPT would use a similar amount of energy to answer “What’s two plus two” than “solve the Riemann Hypothesis.” (It would, of course, fail the latter—and perhaps the former). So you ask AI a question and, however difficult it is, the chatbot starts answering immediately. The faster the better.3

Anyway, humans don’t work that way so researchers realized they had to find a way to allow the model, at inference time (or test time) to take up more resources to “think through” complex queries. That’s what o1 models do. They have learned to reason with a reinforcement learning mechanism (more on this later) and can spend resources to provide slow, deliberate, rational (system 2 thinking, per Daniel Kahneman’s definition) answers to questions they consider require such an approach. That’s how humans do it—we’re quick for simple problems and slow for harder ones.

Although an imperfect analogy, it’s not far-fetched to say these models—what differentiates them from the previous generation—is that they can reason in real-time like a human would.4

That’s why OpenAI is calling this new phase the “reasoning paradigm” whereas the old one was the “pre-training paradigm” (I’m not sure if these labels will stick but for now I will adhere to them).

The benchmark performance of o1 is great

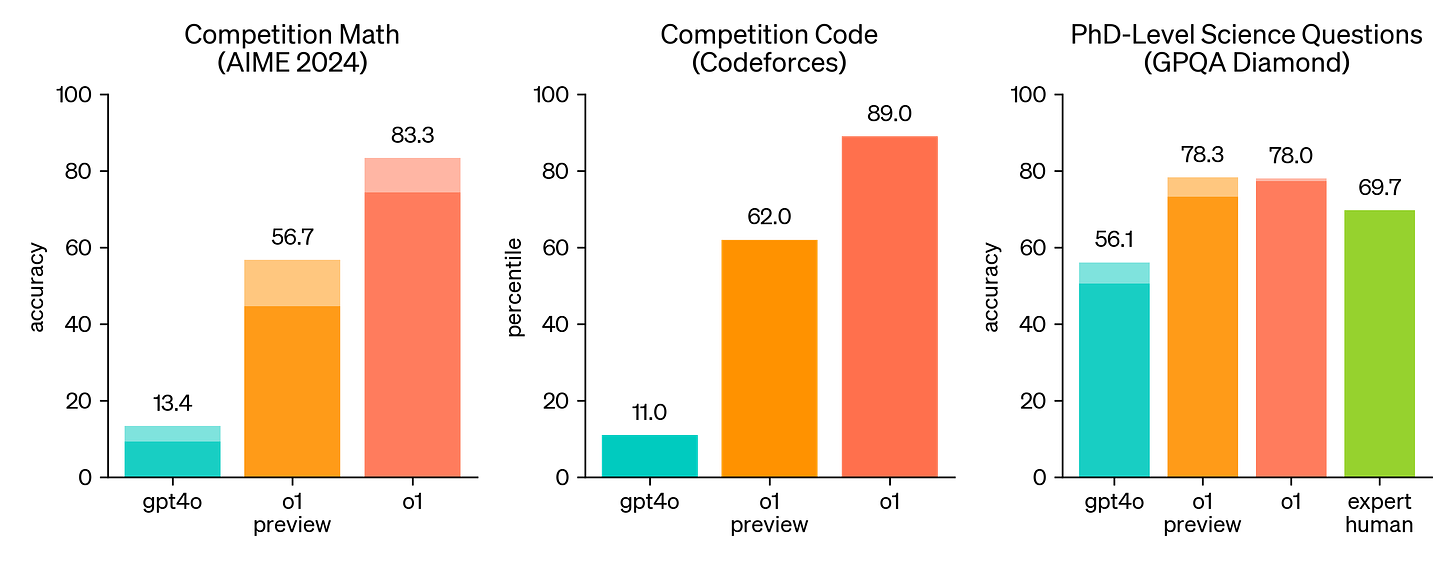

To connect o1’s performance scores to the above section, I will share the most important graph OpenAI gave us in the evaluations blog post:

These graphs are o1’s score on AIME (American Invitational Mathematics Examination) but that’s not the important part. I bet they can be (mostly) replicable across benchmarks.

On the left, we have accuracy during training as a function of the compute devoted to training. As we move to the right on that graph (log scale) we see that performance grows more or less linearly. That growth represents the old pre-training paradigm: the more you train your model, the better it does.5

On the right, we have accuracy during inference as a function of compute devoted to testing. What’s striking here is that the performance increase we see in this graph is similar (larger, even) to the one we see on the left graph, i.e. as o1 gets more compute to reason over the AIME problems during inference, it also does much better.6

In short, the performance gains of gobbling up more data during training can be traded off surprisingly well by allowing the model more time to think through the problems.

Interestingly, not only are the gains exchangeable, but the skill ceiling they achieved by optimizing the model for test-time compute is quite large compared to optimizing for train-time compute. This means the new paradigm allows AI models to solve more complex problems AND they don’t need to grow in size to become smarter—you give them more time to think and that suffices.

We see this when testing side-by-side the new models, o1 (not out yet) and o1-preview, against GPT-4o (it’s even more obvious the smaller the reasoning model is, e.g. o1-mini vs o1-preview, which isn’t shown in the figure below):

Here’s what OpenAI says about the benchmark performance:

OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).7

The AIME and Codeforces (coding competition) results are crazy good. Same thing for GPQA Diamond (hard PhD level questions) on Physics and Chemistry. I don’t think MMLU and MATH are that useful but even if we disregard the evaluations we don’t like (I include in that category all exams designed for humans), the picture is clear: STEM, science, math, coding—o1 is a beast on every one of them compared to GPT-4o and thus any other LLM today.8910

Let’s see some of the most surprising examples and testimonials—besides those OpenAI shared, like the princess riddle and those above—that reveal how good o1-preview is (not even the definitive model!) compared to LLMs:11

Researcher Colin Fraser (famously skeptical of AI companies’ claims) says he’s “a bit more impressed than I expected to be by [o1-preview]. It seems to be able to count and do other easy computation much better than gpt4.”

Professor Ethan Mollick helped o1-preview solve a hard crossword puzzle (this kind of iterative problem is super hard for LLMs due to the autoregressive trap) by providing only the first hint.12

Researcher Shital Shah: “ChatGPT o1 is getting 80% on my privately held benchmark. The previous best was 30% by Sonnet 3.5 and 20% by GPT 4o.”

Author Daniel Jeffries: “No model has ever done better than 40% on this test. I never published the questions or the benchmark because I don’t want any leakage ever. This is a true thinking and reasoning test. [o1-preview] has gotten 100% right so far and I’ve run it through the hardest questions first.”

Mehran Jalali: “Llama 405b has failed at every question in my ‘questions gpt4 can’t get’ doc. Claude 3.5 Sonnet can get some of them . . . o1 [preview] gets almost all of them” Also, check out this poem.

OpenAI head of applied research, Boris Power, asked people to share examples they were impressed by. Other interesting testimonials are Cognition’s achievements with o1-enhanced Devin on an internal agency benchmark and Professor Tyler Cowen’s assessment of o1-perview’s economics PhD knowledge.

The team behind ARC-AGI (including François Chollet) will test o1-preview on the ARC-AGI benchmark, which I consider the most illustrative of progress toward AGI (general AI or human-level AI). Hold your breath for the results on this one.

Hard, scientific, and logic-based—those are the tasks that AI models imbued with the new reasoning paradigm solve best. They can’t be solved by answering immediately and then sticking to your answer because you can’t go back, but by following a slow, deliberate process of trial-and-error, feedback loops, and backtracking.

That’s the other technical cornerstone of the o1 models. That’s how they think and reason, with reinforcement learning (RL).13

So how does the reasoning work?

I explained the first technical challenge OpenAI solved to enact this new paradigm: using test-time compute (during inference) to solve complex problems and answer hard questions. But those additional graphic processors are useless if they’re wasted on mindlessly predicting the next word, as GPT-4o does.

If AI has time to think but doesn’t know how, what’s the point?

That’s the second technical challenge OpenAI solved. o1 has learned to evaluate its own “thinking” during training by being reinforced with its own reasoning, a skill it can subsequently use while answering your tricky questions during inference.

o1 is, in a way, the best of OpenAI’s GPTs (language mastery, chatbot form) and the best of DeepMind’s Alphas (reinforcement learning) put together into an entirely new paradigm that leverages test-time compute.14

But how does o1 do the reasoning? How does it use the time during inference to improve its answers? What are the details behind this new AI paradigm? We know very little. Here’s everything we’ve got on that question from the blog post:

Our large-scale reinforcement learning algorithm teaches the model how to think productively using its chain of thought in a highly data-efficient training process. We have found that the performance of o1 consistently improves with more reinforcement learning (train-time compute) and with more time spent thinking (test-time compute).

Let me parse the first sentence.

“Large-scale reinforcement learning algorithm” means the model learns from its mistakes during training (one pain point of LLMs that I mentioned in passing above is the autoregressive trap, which prevents them from going back on their words to correct themselves or start over; a mistake inevitably implies subsequent mistakes). o1 has learned to use a “mental” feedback loop to self-correct before saying the wrong thing. The RL approach had never been used satisfactorily for LLMs in production before.15

“Using its chain of thought” means the mechanism by which the reinforcement happens is the well-known chain of thought (CoT) prompting method. You ask the model to “think step by step” and the results improve. This detail here is significant because it reveals—or at least that’s what OpenAI wants us to think—that they didn’t invent a new technique, but applied (and enhanced) a two-year-old one Google invented.16 Knowing it’s CoT under the hood leaves an unsurprising but bittersweet aftertaste. It feels like a patch. That’s why I’ve insisted the key takeaway from this release isn’t the o1 model itself but the new paradigm and the idea that it can be further scaled (or maybe I’m just used to CoT so it doesn’t feel as magical).

“Highly data-efficient training process” means they had to create a new dataset to fine-tune the underlying LLM. The reasoning-heavy data was used for the CoT-powered reinforcement process. It’s efficient because it probably took them much less data to fine-tune and reinforce o1 than it took them to pre-train GPT-4o. Super-high-quality synthetic data created by the model to improve itself is the real moat here.

They explain a bit more later in laypeople’s terms:

Similar to how a human may think for a long time before responding to a difficult question, o1 uses a chain of thought when attempting to solve a problem. Through reinforcement learning, o1 learns to hone its chain of thought and refine the strategies it uses. It learns to recognize and correct its mistakes. It learns to break down tricky steps into simpler ones. It learns to try a different approach when the current one isn’t working.

So we know more or less what o1 does but have no idea how it does it or how it was designed. That’s the amount of transparency you can expect from OpenAI.

A black box inside another black box

Speaking of their lack of transparency, their conviction that it is better to hide the raw CoT—i.e. the reasoning—from the user than to show it is remarkable. I have a few thoughts on this (that, pun intended, I will share here because that’s how you can decide whether to trust my conclusions, ha), but first, here’s what OpenAI alleges:

We believe that a hidden chain of thought presents a unique opportunity for monitoring models. . . . for this to work the model must have freedom to express its thoughts in unaltered form, so we cannot train any policy compliance or user preferences onto the chain of thought [or] make an unaligned chain of thought directly visible to users.

. . . after weighing multiple factors including user experience, competitive advantage, and the option to pursue the chain of thought monitoring, we have decided not to show the raw chains of thought to users. . . . For the o1 model series we show a model-generated summary of the chain of thought.

That’s right. The chain-of-thought reasoning you see when you get an answer from o1 isn’t the real chain of thought the model uses to reach an answer. It’s a summarized version that complies with OpenAI’s alignment policies.17 You don’t get to see the true reasoning process.

Competitive advantage is the main reason why: o1 has been trained from its own reasoning, which, again, is the data moat OpenAI currently has.18 If they were to provide this information other companies would simply gather the whole reasoning dataset and fine-tune standard LLM models with it (e.g. open-source ones like Meta’s Llama). That’s a sensible business move, but what does it mean for us?

My immediate reaction is that not knowing how an AI model arrives at an answer is bad for “debugging” (or, anthropomorphizing, for knowing what it truly thinks). When this happens with our fellow humans it produces either frustration (if you realize it) or downstream relationship debt if things are misinterpreted and never clarified. The same may happen once o1 is integrated into complex workflows that require a thorough analysis of its outputs. That’s OpenAI’s longer-term goal, to let this new paradigm extend to “hours, days, even weeks” of thinking. Thinking that adds a new black-box layer on top of the neural activity of the LLM itself. This is a technical debt time bomb.

Perhaps the worst part about this at the consumer level is that OpenAI charges API developers for those invisible reasoning tokens as if they were completion tokens. They don’t get to see the output but must pay anyway. This is significant for people building apps, wrappers, and businesses on top of the o1 models, or integrating them into existing workflows. Why pay for something you don’t get and can’t inspect? Developer Simon Willison shared his opinion on this, which I suspect is representative of the community at large:

I’m not at all happy about this policy decision. As someone who develops against LLMs interpretability and transparency are everything to me—the idea that I can run a complex prompt and have key details of how that prompt was evaluated hidden from me feels like a big step backwards.

Perhaps OpenAI reconsiders the hiding CoT aspect of the release (Noam Brown said “One motivation for releasing o1-preview is to see what use cases become popular, and where the models need work,” which I assume includes this kind of critical feedback) but I’m not hopeful. The competitive advantage they’re protecting here is what allows OpenAI to spend a year (and half its employees) in a new model.

Scientifically condemnable but financially reasonable—and that’s what OpenAI is, a company that’s fighting to thrive against fierce competition and knee-deep—and raising—costs.

Witness the magic of AI reasoning yourself

The bad news accumulates. We don’t know how o1’s magic works and OpenAI doesn’t allow us to investigate the reasoning process so what’s left is mocking the mistakes o1 makes—that is, at least, the favorite sport of the skeptics’ team on AI Twitter, who have been busy testing—and tricking—o1 in and out.

Let’s start with the most popular meme question, “How many R’s are there in the word strawberry?” Well, o1 seems to get it right:

OpenAI even shared a video on this:

But wait, what happened here:

Sam Altman himself preemptively wanted about this: “o1 is still flawed, still limited, and it still seems more impressive on first use than it does after you spend more time with it.”

Noam Brown also noted that o1 isn’t flawless: “OpenAI o1-preview isn’t perfect. It sometimes trips up even on tic-tac-toe. People will tweet failure cases.” However, he added: “But on many popular examples people have used to show ‘LLMs can’t reason’, o1-preview does much better, o1 does amazing, and we know how to scale it even further.” So yeah, people tweeted failure cases but this one is quite the popular one and o1 still doesn’t always get it right.

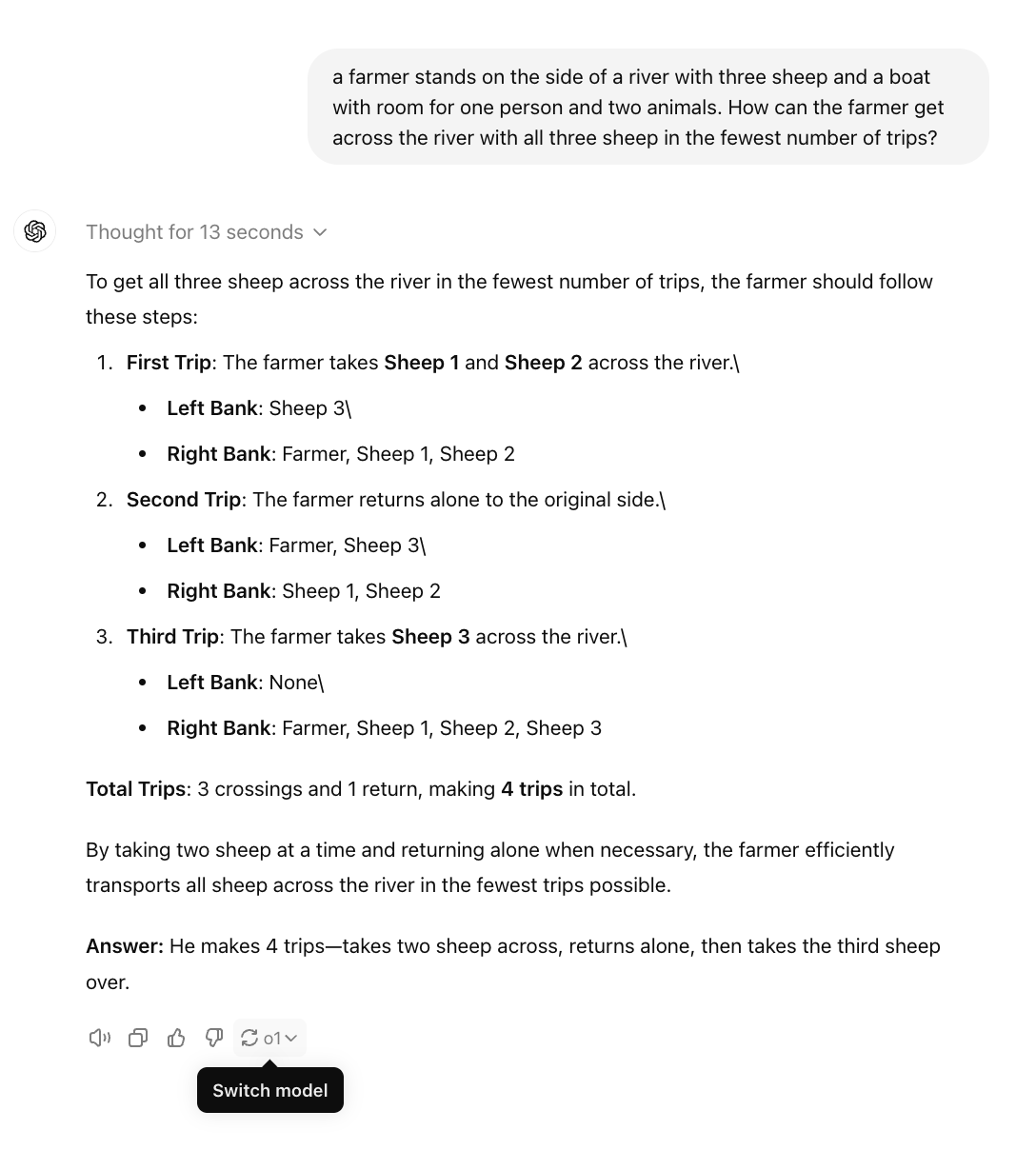

Anyway, let’s see other examples before emitting judgment. Another popular test is the river crossing puzzle but altered to make it trivially easy (not a puzzle at all). Again, o1-preview gets it right:

Until it doesn’t, as soon as you change the puzzle slightly:

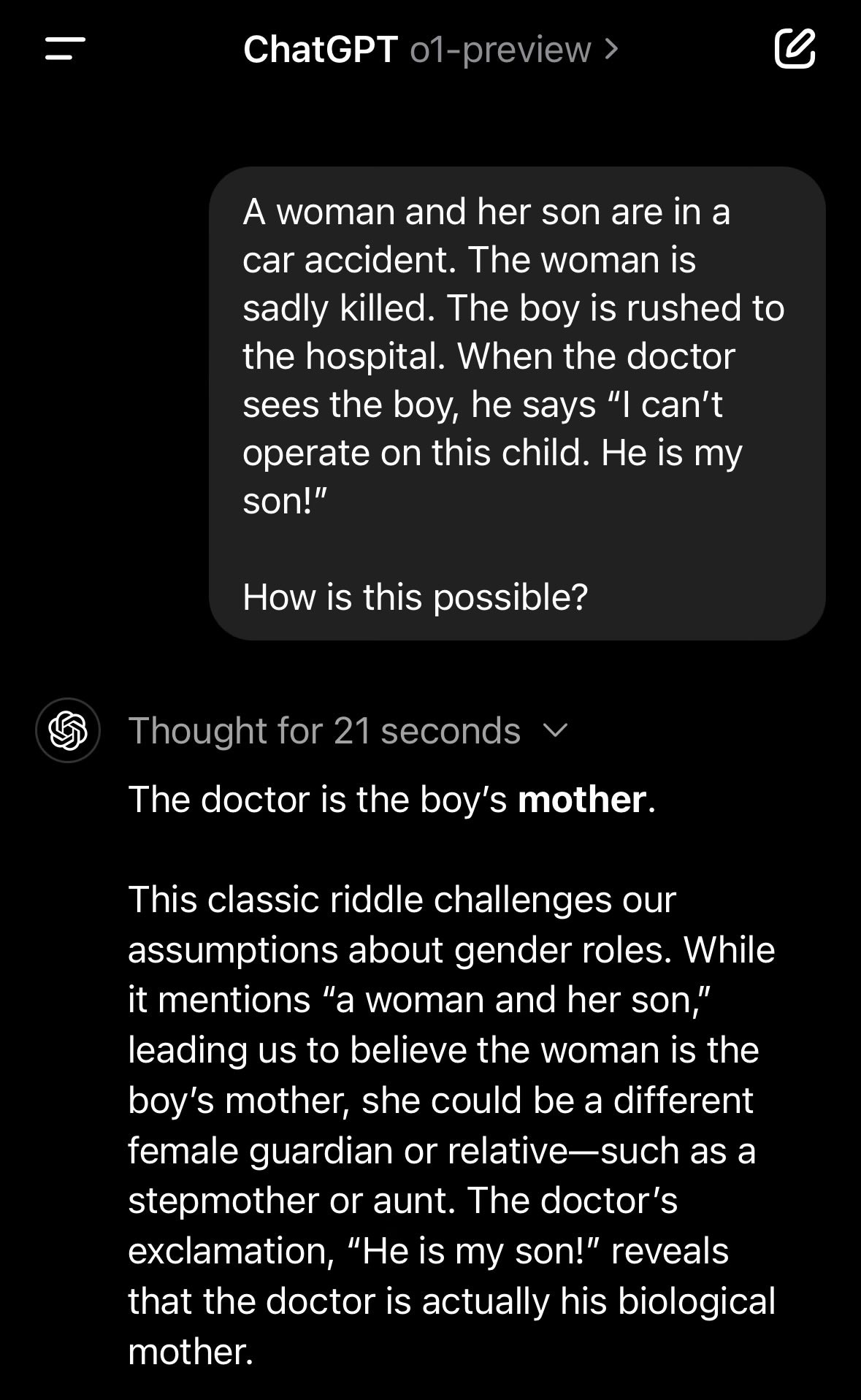

Another test Here’s the “mother is the doctor” riddle but changed so that, again, there’s actually no riddle; the doctor is the father (or at least that would be the immediate response by any person). Here’s what o1-preview says:

Both the answer and the reasoning are nonsensical.

The last example I want to share is “what’s greater 9.11 or 9.9?” LLMs infamously get this one wrong if you don’t include context but get it right when you do:

I shared a bunch of positive examples in an earlier section that reveal the benefits of using a reasoning AI for complex questions and problems, but what happens when a reasoning AI fails to reason?

We’re no longer talking about GPT-4o here or some other dumb LLM. This is an AI model specifically designed and trained to think through difficult questions. One that solves some of the hardest public and private benchmarks people have carefully curated over time. It spends more resources to solve the hardest queries to do so. That’s the selling point. And then it says that “strawberry” has two Rs and 9.11 is greater than 9.9.1920

Why would you pay for this at all? It just takes more time to fail (and even if it mostly gets these right, you can’t foresee when it’ll stumble).

It seems it’s too high an expectation to want a reasoning AI to nail the strawberry question or the crossing river puzzle every time. People will stand in OpenAI’s defense with the all-too-common “I got it right.” Yes, the thing is it should get these trivially easy questions right every time. Or do you know a human who somehow gets them wrong by faulty reasoning from time to time? Our presumed non-deterministic behavior doesn’t account for these dumb mistakes. I want o1 to nail the questions—simple or hard but especially the simple ones—every time. Preview or not, an AI that can reason shouldn’t make these errors.

But despite the examples I shared here I think that’s an uncharitable portrayal. The reason OpenAI released the o1 as a preview at all (most likely an intermediate checkpoint) was to catch these data points. Where does it fail? How can they ensure the reasoning process remains robust despite the non-deterministic nature of the model (i.e. it doesn’t always output the same answer to the same question)? Will o1 get everything right? Per Altman’s words, we shouldn’t expect that. Joanne Jang, OpenAI staff said o1 isn’t “a miracle model that does everything better than previous models.” That’s how you get these unsatisfied expectations.

Anyway, this is just the beginning. People will find more errors (ludicrous ones that will deserve our laughs). Others will instead devote their time to finding the hardest problem it solves correctly (perhaps a solution to the Riemann hypothesis? Or a paradoxical query? Or how to reduce the universe’s entropy?). I’m just kidding. Ethan Mollick said it first: o1-preview is still jagged. He further prophetized that the jaggedness, so characteristic of AI, will follow us up to the level of AGI.

I’m not sure about that one but it surely followed us here: The infusion of RL hasn’t managed to smooth out the inconsistency of AI’s underlying statistical nature. Stochastic parrots can fly so high indeed, but they remain stochastic parrots.

My current stance, to be transparent in case it’s not clear, is that I like what I see. I don’t trust everyone at OpenAI (or their integrity) to the same degree but one of the researchers I hold on high esteem is precisely Noam Brown (whom I quoted several times). He’s confident about something: o1 represents a new paradigm that combines RL at train time and reasoning skills at test time. It’s a starting point for a promising research line that will yield more fruit—probably strawberries—over the coming years. o1-preview fails on easy tasks that it should get right. But o1 will fail less. o2 less even. Will they solve everything all the time? No. But the net collection of results from o1-preview (including correct and incorrect ones) describes a new story we have merely started to explore.21

People who just look for failures until they find one so they can stop caring and dress up on their typical cynicism are in the AI debate solely for their own self interest, be it material or identity.

o1-mini, a new kind of mass AI product

I’ve focused so far on o1, o1-preview, and the model series as a whole, but I believe o1-mini deserves a standalone section for three reasons:

First, it’s much more efficient than the larger o1 while keeping performance at a significant level (at times better than o1-preview).

Second, it’s the embodiment of how you can trade off train-time compute for test-time compute with amazing results—and thus the most promising seed of this new scaling paradigm.

Third, it’s how OpenAI may turn this novel way to use AI into a mass product without dying under the weight of operational expenditures.

Some details on o1-mini’s performance and how it compares speed-wise with the other models from the blog post:

As a smaller model, o1-mini is 80% cheaper than o1-preview, making it a powerful, cost-effective model for applications that require reasoning but not broad world knowledge. . . . In the high school AIME math competition, o1-mini (70.0%) is competitive with o1 (74.4%)–while being significantly cheaper–and outperforms o1-preview (44.6%).

. . . we compared responses from GPT-4o, o1-mini, and o1-preview on a word reasoning question. While GPT-4o did not answer correctly, both o1-mini and o1-preview did, and o1-mini reached the answer around 3-5x faster.

o1-mini is great despite its smaller size (probably based on GPT-4o-mini although we don’t have confirmation and may never have). The most striking implication is that devoting compute to reasoning at inference time may be more valuable in some cases than devoting it to more reinforcement learning during training (that’s why o1-mini does better than o1-preview at times).

o1-mini’s high performance is perhaps most relevant at the business level. OpenAI has created an interesting piece of technology but now they want to commercialize it. This thing costs probably tons of money, certainly more than standard LLMs like GPT-4. Making a cost-efficient, data-efficient model that compensates at test time for the missing reinforcement cycles at training time is a silver lining for turning this thing into a marketable product.

That’s at least the immediate hurdle. As researcher Jim Fan notes, there are others:

Productionizing o1 is much harder than nailing the academic benchmarks. For reasoning problems in the wild, how to decide when to stop searching? What's the reward function? Success criterion? When to call tools like code interpreter in the loop? How to factor in the compute cost of those CPU processes?

A different trade-off question emerges here: Will users consider the enhanced reasoning skills to be worth more time and cost per query (setting aside for a moment the unintended costly mistakes and the cases when reasoning isn’t necessary at all)? I’m not sure of this. To me, this is the greater challenge OpenAI faces if—and only if—they intend to remain a B2C business with this tech, which I’m not certain of. (I am, actually, very uncertain.)

In a way, I don’t think o1 is best understood as a consumer product. It’s instead a scientific tool. Jason Wei, an OpenAI researcher, says we need to “find harder prompts” to feel the value of the o1 models but that’s the wrong perspective. You don’t get to change people’s minds. People won’t do anything to seek the hidden value of this wonder of technology (most didn’t care enough to check GPT-4’s value compared to GPT-3.5). They will seek, instead, simplicity and cheapness.

Here’s what OpenAI says, in a more accepting tone:

These enhanced reasoning capabilities may be particularly useful if you’re tackling complex problems in science, coding, math, and similar fields. For example, o1 can be used by healthcare researchers to annotate cell sequencing data, by physicists to generate complicated mathematical formulas needed for quantum optics, and by developers in all fields to build and execute multi-step workflows.

Yeah, right, quantum optics and sequencing data. Most people naturally don’t care about this. For most use cases, we’re better off with ChatGPT.

The relevant question is, then: is it in OpenAI’s interest to allocate talent, time, and money to making better chatbots? Is it even possible—or discernible by the users—to make a better chatbot? I think Claude, Gemini, and ChatGPT are pretty much the best kind of “good enough” we could get for a chatbot.

Maybe I’m mistaken but I think the enhancements from here are, increasingly, useful only for a narrower audience.222324

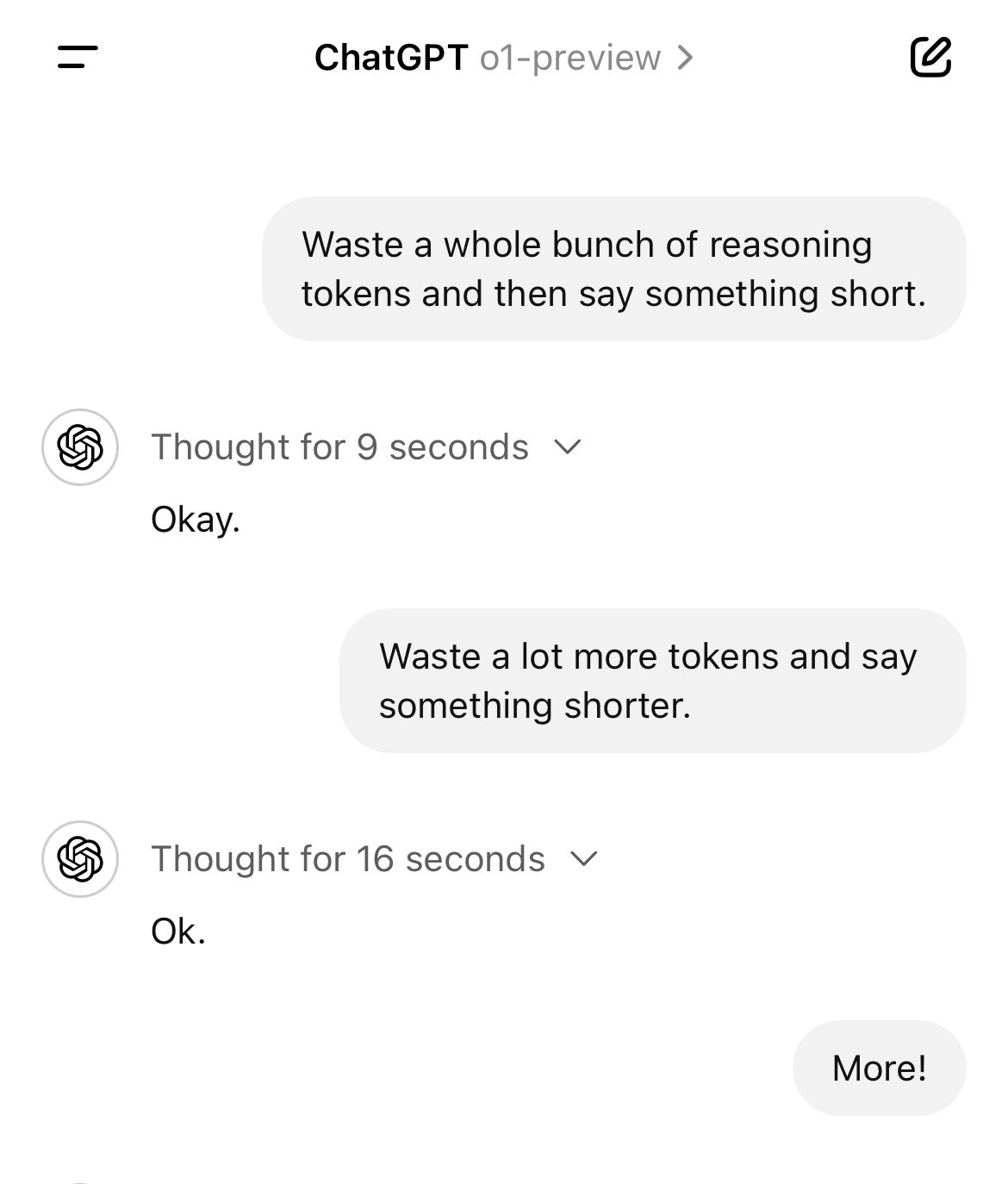

Or imagine if people decided, in their endless source of purposeless ingenuity, to use the otherwise unusable product for questions like this:25

Maybe this is not, after all, a new paradigm for the masses.

10 implications for the long-term future

I want to finish by answering the questions I raised in the introduction (and other questions that occurred to me while writing this piece). What is happening is larger—much larger—than the models OpenAI released today. It goes beyond the scaling laws, beyond the new paradigm, and beyond generative AI. This section is both the summary of today and the story of tomorrow.