GPT-5 Has Brought the AI Industry to a Turning Point

9 implications for the future

It’s been 10 days since OpenAI released GPT-5 and I have some thoughts to share with you about where the AI industry is going (I promised there would be an “implications” post; this is it). The aspect I like the most about being a geographical outsider to AI circles—Spain is quite different from the Bay Area—is that I can say things as I see them—I can afford to keep a sober view, whether AI surges or crashes.

In broad terms, GPT-5 is neither as bad as detractors say nor as good as OpenAI staffers say. But the quality of its responses and its benchmark performances are of little importance. GPT-5 is, primarily, an inflection point in the industry’s aspirations. Or rather, an inflection point in its reckoning with the hard reality of artificial general intelligence (AGI). Here are 9 thoughts to help us figure out what this means.

I. OpenAI’s goal is to become a monopoly, not AGI

OpenAI’s current goal is not AGI but to become a monopoly on text chatbots (not multimodal models, apparently, given Sora Turbo’s flop, ChatGPT’s forgettable image quality, and OpenAI’s complete absence in the “world models” research space). In this sense, Meta CEO Mark Zuckerberg’s “personal superintelligence”—which is taking a weird shape before our eyes—is OpenAI CEO Sam Altman’s biggest threat (and not Google DeepMind’s Gemini or Anthropic’s Claude as many think).

GPT-5 was a release, as we learned from Altman’s statements on Nikhil Kamath’s podcast, with the Indian market in mind, which is poised to become the company’s largest “in the not very distant future.” OpenAI is, then, captured by “the tyranny of the marginal user”; the main target is those laggards who have yet to log into ChatGPT. This strategy often leads to preventable failures that affect the current userbase (e.g., why does a $500 billion valuation startup serving 700 million weekly users issue a global model update overnight and without warning?)

In the short term, OpenAI needs to find a satisfactory answer to this question: What does a hypothetical new user in a new market want?

My answer mirrors GPT-5’s top features, which reinforces the idea that the release was intended to fuel OpenAI’s monopolistic pretensions: UI/UX simplicity, a higher floor of capabilities (less making stuff up, less lying to users, accesibility to reasoning models), but hardly a higher ceiling (not many people care about IMO gold math skills or a solution to the Riemann hypothesis).

OpenAI will try to advance the state of the art, but only insofar as it manages to reduce inference costs as it expands to promising markets and increases consumer revenue, growing into the biggest player—the single player—in an industry that will stabilize shortly, but only at a bubble’s whim.

II. GPT-5 is a product intended to reduce costs

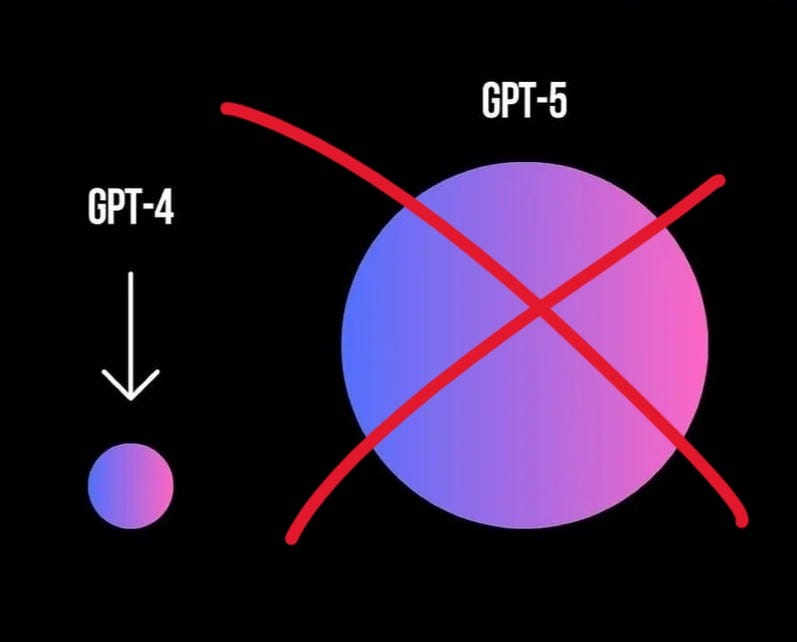

In line with the first point, GPT-5 is a product intended first and foremost to reduce inference costs. It is allegedly smaller than GPT-4o/o3 (it smells smaller). Otherwise, to give you a better reason, OpenAI wouldn’t allow more messages on GPT-5 Thinking mode than it allowed on o3 for Plus users paying $20/month. All those charts you used to see circulating online about the size of GPT-5? All wrong.

But, why does OpenAI prefer to reduce costs rather than improve capabilities? Because they’re struggling to serve 700 million people weekly.

This reminds me of the DeepSeek strategy: When the bottleneck is compute—make no mistake, OpenAI is not algorithmically or even data bottlenecked but GPU bottlenecked—you should devote your resources to optimizing infrastructure, silicon (chips/GPUs), compiler stacks, training throughput, and inference latency, and other “horrible trade-offs” as Altman calls them.

The fact that OpenAI has so many Nvidia GPUs and is still short on compute suggests a bad prospect for scale maximalists: how much will you have to grow your processing capacity to reach AGI if such dumb models require millions upon millions of chips? They will say, “But GPT-5 being smaller and better is a good sign,” and I will respond, echoing computer scientist Grady Booch’s main criticism of large language models: “Or maybe you have the wrong architecture.”

III. The automatic model routing system is controversial

The main technical novelty that GPT-5 brings (that we know of; there could be more important things about how it was trained, but because OpenAI is so opaque, we don’t know) is the automatic model routing system.

Despite the initial pushback, I agree with roon (an OpenAI staffer) that it’s a better option to have a trained router decide which question goes to which model rather than the user choosing. Two reasons, one user-side and another company-side:

It simplifies the user experience, making it easier for casual users to taste the better models; the majority of users have a hard time discerning between models’ capabilities or even basic knowledge of the existence of a tiered hierarchy. Altman shared how much the percentage of free users who interact with reasoning models each day grew after the GPT-5 release, and it surprised me more than any other data point about GPT-5: from <1% to 7%! Less than 1% were using o3 at all.

There’s a lot of wasted tokens in using the wrong model (or in asking o3 to reason how many Rs there are in “strawberry,” only to mock the answer by posting it on Twitter). A trained automatic router will relay each query to the correct model unless the user specifically asks for a quick answer (after all, this is a pattern-matching task, for which AI is perfectly suited and humans aren’t).

GPT-5 is an inference optimization + userbase homogeneization release, both of which further OpenAI’s goal of becoming commercially unkillable.