Google’s Genie 3 Is What Science Fiction Looks Like

The most interesting AI release in 2025

I love to remind my readers that Google DeepMind is winning (yes, despite GPT-5, or even because of it). It is the unicorn I've been betting on for a long while in this race. But, until recently, it was the underdog—it’s had to battle OpenAI’s sly leadership for years (and its slier tricks). Google DeepMind’s CEO Demis Hassabis was forced out of his comfort zone, made of research papers, and into the cutthroat world of VC-subsidized products. It's not his forte, but like his AI models, he learns quickly.

The other day, I read him say, “If I’d had my way, we would have left [AI] in the lab for longer and done more things like AlphaFold, maybe cured cancer or something like that,” in an interview with The Guardian. So I feel the satisfaction of being right in my betting, but also the vicarious pride of being on the right side of history—I’m a simple man, I love the occasional witty jab at the expense of the bad guys.

Other times, the jabs and the battles and the racing are mute. These other times, Google DeepMind gives us something that speaks for itself. Something beyond OpenAI’s superficial marketing shenanigans. Something that, despite the wild products the AI Industry has released over the past five years, still surprises me. “But it is what it is,” Hassabis tells the Guardian, grudgingly. “And there’s some benefits to that.” Today, thinking back on his words, I realize he was enjoying a kind of poetic justice: He was thinking of Genie 3.

Genie 3’s promo video release starts like this: “What you’re seeing are not games or videos, they’re worlds.” Google DeepMind is making two things clear right away: “this is a big deal” and “please, take it with a grain of salt.” So we will do both.

There's no better way to understand what Genie 3 is, what the implications are, and what it can do than to read the blog post. (There's no paper out yet, but you can always check related work by Jürgen Schmidhuber and David Ha, GameNGen by Google Research, HunyuanWorld 1.0 by the fellows at Hunyuan, and, of course, the earlier iterations of Genie, 1 and 2.) I’ll comment the key parts.

What the hell is a world model

This paragraph summarizes what you need to know at a high level:

Genie 3 [is] a general purpose world model that can generate an unprecedented diversity of interactive environments. Given a text prompt, Genie 3 can generate dynamic worlds that you can navigate in real time at 24 frames per second, retaining consistency for a few minutes at a resolution of 720p.

Let's parse it: “General-purpose” means that the model inside Genie 3 was not fine-tuned for one task but trained to allow different environments and behaviors, and facilitate the emergence of new capabilities; Genie 3 is trained on a video dataset and generates interactive frames from text inputs. That's it. This is the standard approach for pretty much all modern AI models. (Both OpenAI and Google's IMO-gold models are trained in this way in contrast of being fine-tuned to solve hard math problems.)

“World model” means, as Google DeepMind uses it, “an AI system that creates worlds by rendering interactive frames,” but there's a more traditional definition, which is “the internal latent representation humans and animals’ brains encode of how reality works.” I think one of their research goals is to make them synonymous; that is, to let the latter emerge spontaneously by doing the former.

So far, we have evidence that this doesn't happen: AI models are great at generating accurate predictions (of words, pixels, orbital trajectories) but terrible at encoding a robust and self-consistent set of ground laws. (Large language models, LLMs, encode syntax, semantics and other laws of grammar, but that’s easier than, say, encoding the laws that govern fluid dynamics or the Newtonian mechanics that describe and explain planet trajectories around the sun.) AI pundits believe scaling LLMs will eventually bypass these obstacles and they might be right; we don’t know. For now, let's not confuse the two definitions.

An “interactive environment” is basically a video game: you can perceive it, but also act on it. This is a qualitative upgrade from LLMs like ChatGPT, Claude, Gemini, Grok or even image/video models (Imagen 4 or Veo 3). Multimodality, as it's understood in the context of LLMs, includes image and video as inputs and outputs, but not action-effect input-output pairs. That's new and, as far as I know, Google DeepMind is the only AI lab working on it as part of its artificial general intelligence (AGI) strategy (I should perhaps include the Chinese lab, Tencent Hunyuan, in this camp). So Genie 3 is, in a way, a proto-engine for generating video games on-the-fly. As we will see, its uses extend to robotics and agentic research as well, which is arguably more valuable but not nearly as fun!

Real-time interactive generation = the future of AI

Now, let's see the specifications (we're still on that short paragraph I quoted above, the information density is super high!). We can only compare Genie 3 with Genie 2 because there's nothing else out there beyond a couple of websites. Genie 3 doesn’t allow image inputs yet (it apparently allows video inputs), whereas Genie 2 worked with text + images. Is this a step back? Not really: Genie 3 generates the world and the action-effect pairs in real time, and that’s computationally costly, meaning that making it work is a challenge in both a technical and a commercial sense. The significance and future implications of real-time interactive world generation can’t be overstated: In Genie 2, you had to input the sequence of action commands previous to generation, whereas Genie 3 feels like an actual video game (examples soon!).

In Genie 3, the world renders itself as you move or rotate or jump. You can even create new events by prompting a new input, or, if you interact with an object that has suddenly appeared in view, unprompted (it happens), the physical effects are also generated in real time (this means that the pixels and the corresponding physical reality those pixels represent are generated at the same time). Here's what Google DeepMind has to say about this technical victory:

Achieving a high degree of controllability and real-time interactivity in Genie 3 required significant technical breakthroughs. During the auto-regressive generation of each frame, the model has to take into account the previously generated trajectory that grows with time. For example, if the user is revisiting a location after a minute, the model has to refer back to the relevant information from a minute ago. To achieve real-time interactivity, this computation must happen multiple times per second in response to new user inputs as they arrive.

In one sentence: Generating interactive worlds in real time is harder than generating non-interactive videos in real time (e.g., Veo 3) and harder than making interactive worlds in a precomputed fashion with hard-coded rules (e.g., standard video games).

More specs: Genie 3 works at 24 frames per second (will eventually jump to 60, which is the minimum gamers will accept), retains consistency—i.e., memory of what has happened so far—for a few minutes (Genie 2 worked for 10-20 seconds at a time), and all of that at a 720p resolution.

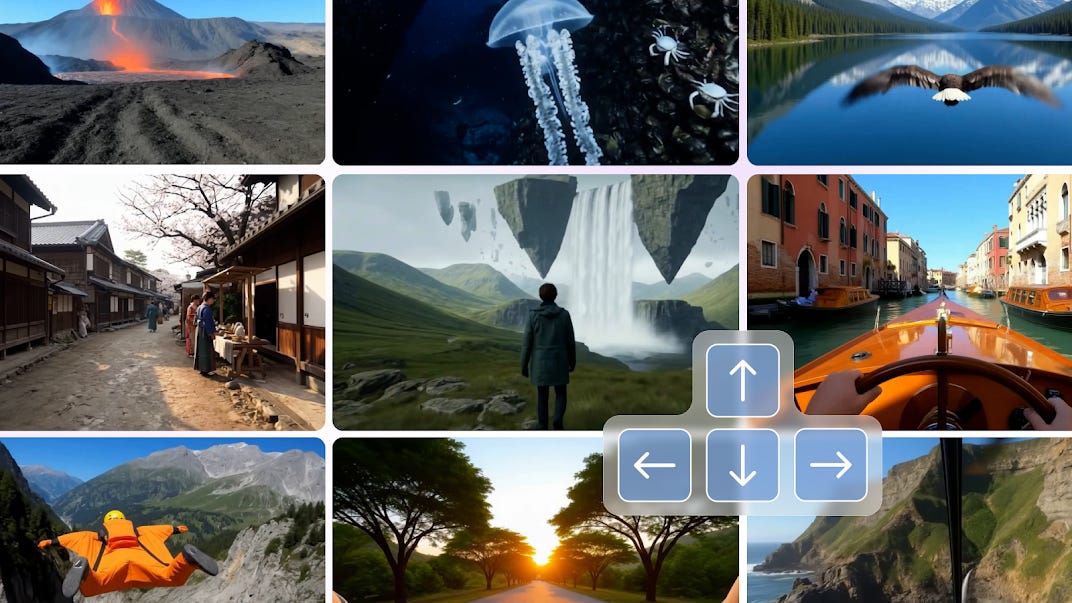

Eight impressive examples of Genie 3’s capabilities

Ok, enough words; this is a world modeling AI—let's see a few visual examples with the reasons why I found them impressive.

(Don’t get too excited just yet: Genie 3 is still a research preview, with no production pipeline in sight. Only a handful of appointed insiders have access, and it may be a while before we can try at home, though Hassabis is already hinting at possible release ideas.)