The Controversial SB-1047 AI Bill Has Silicon Valley on High Alert

Experts are divided over whether it's a necessary action or the worst mistake for AI

I started this week’s top picks by deep-diving into the debate on the SB-1047 AI bill for California. When I looked up I was at 1500 words. This was too important to cut into a tiny segment of a longer review so today is a Weekly Top Picks of just one pick. I want to do the full review anyway so I may publish it tomorrow with the other entries.

The SB-1047 bill—which is set to become law on September 30th if everything goes according to plan and Governor Gavin Newsom signs it—could decide the future of Silicon Valley. Some say it’s for the better. Others say it’s the worst mistake California could make on AI in the two years since OpenAI released ChatGPT.

Democrat Sen. Scott Wiener introduced the bill in February 2024 to regulate the frontier AI space in California, taking inspiration from Joe Biden’s Executive Order on Artificial Intelligence. Wiener describes the bill as “legislation to ensure the safe development of large-scale artificial intelligence systems by establishing clear, predictable, common-sense safety standards for developers of the largest and most powerful AI systems.”

I’ve read it (the latest version after amendments). It isn’t hard even for someone like me who knows little about the law in general and much less about regulations specific to technology in California. I urge you to go read it even if you’re not a law expert or an AI expert. This bill, if successful, will turn into a precedent for other regulations inside and outside the US. Many of you are Californians (actually a striking 25%!) but even those of you who aren’t will get a lot of value from taking a peek.

Anyway, here’s a bite-sized summary with the details I consider more salient according to the state of the art (if I got something wrong, let me know):

Developers (devs, understood as people training AI models affected by the bill) have to do extensive internal safety tests (including “unsafe post-training modifications”) for models they train or fine-tune that are covered by the bill, which before 2027 includes:

Models that cost above $100M to train.

Models that require above 10^26 FLOP of computing power to train.

Models that cost above $10M or above 3x10^25 FLOP to fine-tune as long as the pre-trained model was already a covered model.

Derivatives of these models (e.g. copies and improved models).

Developers have to create and implement a full-on safety and security protocol (as a detailed operation manual for other devs and users):

It’s focused on “taking reasonable care” that nothing goes wrong.

Devs have to conduct annual reviews accounting for changes.

From 2026 onwards, they have to retain third-party auditors.

They have to submit annual statements of compliance.

Developers have to implement a kill switch for the full shutdown of covered models (including caring for the repercussions of the shutdown but not concerning derivatives of models not in possession of a developer).

The attorney general will have the power to take legal action against non-compliant developers or if incidents aren’t reported in time or form.

Additional protections to whistleblowers (much-needed lately).

Silicon Valley, the birthplace of AI and generative AI will undergo a profound transformation if the bill passes. These last two years of rapid pace of progress and bold entrepreneurship spirit will remain a memory of the past. Some industry players will consider relocating but Weiner ensured this wouldn’t be a good choice: The bill affects companies headquartered in California but also any company that does business there.

There’s a silver lining, though. The threshold numbers that define whether an AI model is “covered” by the bill are quite high. $100M and 10^26 FLOP are figures beyond the ambition of most players, especially open-source indie developers and small labs. That’s why some people say the law would neither harm startups nor innovation—it’d only affect the biggest, wealthiest companies, like Google and Meta.

However, two-year-old GPT-4 already cost >$100M and 10^26 FLOP may not be that expensive if we consider the rate of progress. In light of this, some prominent voices in the industry and academia say the bill will indeed stifle innovation.

Among those who oppose the bill, we have the leading labs—Google, Meta, and OpenAI. Anthropic initially opposed it as well—to the surprise of many given its history of support for safety AI-focused regulation—but after sending a letter with amendments to Wiener, the startup decided that the updated bill’s “benefits likely outweigh its costs.”

The big labs have the most explicit financial stake in the bill. They’re the absolute winners of the AI race and the ones with the highest odds of surviving any potential incoming bubble-bursting event. Any law limiting the rate of progress at the frontier is bad business for them. They have supported—even urged—national regulation on AI but suddenly a state bill is worse than nothing? It’s weird how their positioning seems to vindicate those who called them out on their kick-the-ladder tactics.

Did they want regulation or to stifle innovation to secure an unthreatening competition? Did they just want to hype up the technology they were selling by making press headlines every day? Did they genuinely call for federal lawmakers to act, or was it merely a virtue-signaling PR move they knew would fizzle out? Hard to say. But easy for me to dismiss their arguments; they have a lot to win by free-roaming an unaccountable space.

Bloomberg shared OpenAI’s response to Wiener, which focuses on the idea that any high-stakes AI regulation should be done at the federal level, not as a “patchwork of state laws”:

A federally-driven set of AI policies, rather than a patchwork of state laws, will foster innovation and position the U.S. to lead the development of global standards. As a result, we join other AI labs, developers, experts and members of California’s Congressional delegation in respectfully opposing SB 1047 and welcome the opportunity to outline some of our key concerns.1

Wiener responded: Congress won’t do anything. It’s fine to prefer federal law but if the alternative is nothing, then what? He concluded his response to the industry’s opposition to the bill with this sentence: “SB 1047 is a highly reasonable bill that asks large AI labs to do what they’ve already committed to doing, namely, test their large models for catastrophic safety risk.”

Your move, big labs.

But it’s not just industry.

My approach to the industry’s claims is to hear them and then ignore them—until someone else without covert interests expresses the same concerns in good faith.2 Then I’ll consider it worth listening to what they say.

That’s exactly what happened.

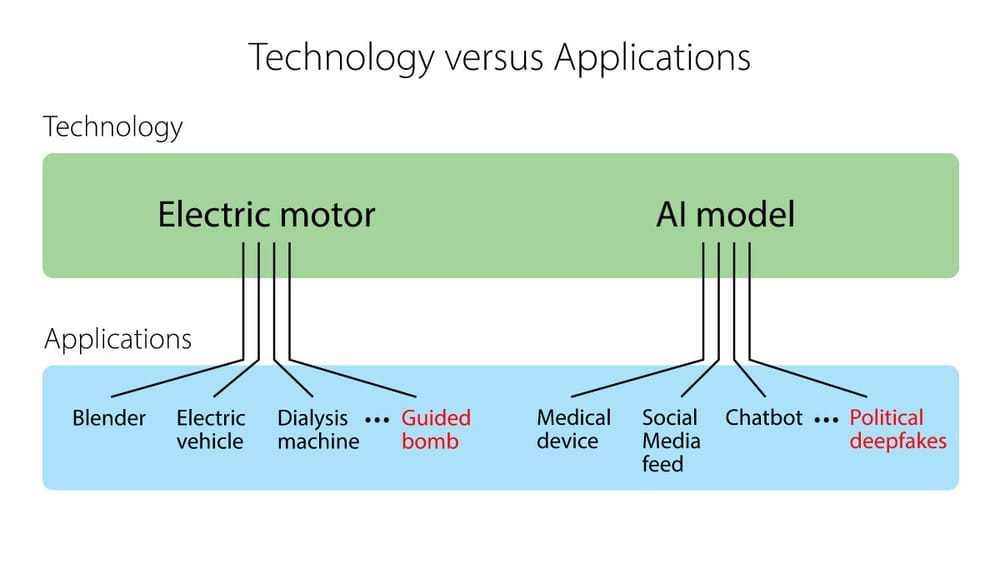

Individual voices mostly affiliated with academia (even Wiener’s party colleagues) oppose the bill. They think it’s “well-intended but ill-informed.” The reason? AI is a dual-use technology. It can be used for good and evil—like every other invention.

The main argument against this kind of sweeping regulation is that neither AI nor any other technology should be regulated so strongly at the technical level. Instead, governments should regulate how technology is applied. Developers should be free from the burden of downstream misuse by ill-intended actors and the burden of complying with the additional grudge of monitorization and extensive reporting.

Here’s Fei-Fei Li, Stanford professor and creator of ImageNet:

If passed into law, SB-1047 will harm our budding AI ecosystem, especially the parts of it that are already at a disadvantage to today’s tech giants: the public sector, academia, and “little tech.” SB-1047 will unnecessarily penalize developers, stifle our open-source community, and hamstring academic AI research, all while failing to address the very real issues it was authored to solve.

Here’s Andrew Ng, Stanford professor and cofounder of Google Brain:

There are many things wrong with this bill, but I’d like to focus here on just one: It defines an unreasonable “hazardous capability” designation that may make builders of large AI models liable if someone uses their models to do something that exceeds the bill’s definition of harm (such as causing $500 million in damage). That is practically impossible for any AI builder to ensure.

Yann LeCun, Jeremy Howard, and many others have made similar remarks.

“But, what about tort law?” says Ketan Ramakrishnan:

. . . there are reasonable debates to be had about when the manufacturer/distributor of a dangerous instrument is acting unreasonably and thus is, or ought to be, liable for negligently enabling its misuse. But “only the users are liable” isn’t the law, and shouldn’t be.

“Only the users of AI are liable” never made sense to me either.

If you develop a technology (that you know can be harmful) without taking any care of making it safe and someone misuses it, it’s unreasonable to expect the user to be the only liable party. Frontier AI models should require a manual and now companies building them will be forced to make one as a safety and security protocol. SB-1047 may stifle innovation by adding annoying work no one wants to do, but perhaps it was never a good thing to push innovation at any cost.

I think there’s some middle ground that’s reasonable for big labs, small labs, private companies, open source, and academia. It should be neither zero regulation nor “you will bear the entire burden of the thing you gave birth to.” Whether this bill hits the nail on the head is a matter for legal scholars and impartial AI experts to evaluate. I'll defer to their judgment on these considerations.

Among the academics who support the bill, we find Geoffrey Hinton and Yoshua Bengio who, together with Yann LeCun, are the “godfathers of AI,” some of the biggest names in AI today. They both have expressed concern about the future of the technology they helped invent and have explicitly favored regulation.

Here’s Geoffrey Hinton:

SB 1047 takes a very sensible approach to balance [the incredible promise and the risks of AI]. I am still passionate about the potential for AI to save lives through improvements in science and medicine, but it’s critical that we have legislation with real teeth to address the risks. California is a natural place for that to start, as it is the place this technology has taken off.

Here’s Yoshua Bengio:

We cannot let corporations grade their own homework and simply put out nice-sounding assurances. We don’t accept this in other technologies such as pharmaceuticals, aerospace, and food safety. Why should AI be treated differently? It is important to go from voluntary to legal commitments to level the competitive playing field among companies. I expect this bill to bolster public confidence in AI development at a time when many are questioning whether companies are acting responsibly.

My conclusion without a conclusion is that these debates are intricate and often resist clear-cut stances. Yet they’re crucial for an industry that’s largely unregulated and poised to profoundly shape our world. Ultimately, I think two questions underlie the entire conversation. I’d say people’s answers to the SB-1047 bill (and future laws) align quite well with how they respond to them:

Should the state have a say in how technological innovation happens?

Should we act more with an innovation-first or a safety-first mindset?

My personal view on OpenAI (and Sam Altman specifically) is that they want to become part of a national government-controlled project to transform AI into a geopolitical advantage, just like the Manhattan Project against the Nazis and then the Soviets. Accordingly, Altman’s views on AI and the bill live in between the commercially focused approach of Big Tech and the opinion that an AI superintelligence may kill us all if we build it unintentionally, which is closer to Anthropic’s position.

The debate around the neutrality of technology always finds analogies that both sides use to defend their position: a knife can be used both to cut and share bread or to kill someone, but it’s hard to imagine a positive use for a Kalashnikov. What is AI more similar to?

Good overview. The largest complaint I had heard (before amendments which I haven't taken a look at) was that it would stifle open-source innovation because open-source LLMs from large companies and their derivatives are impacted by the bill. This causes the bill to impact smaller companies using those open-source models more than it would large companies who can deal with the regulation.