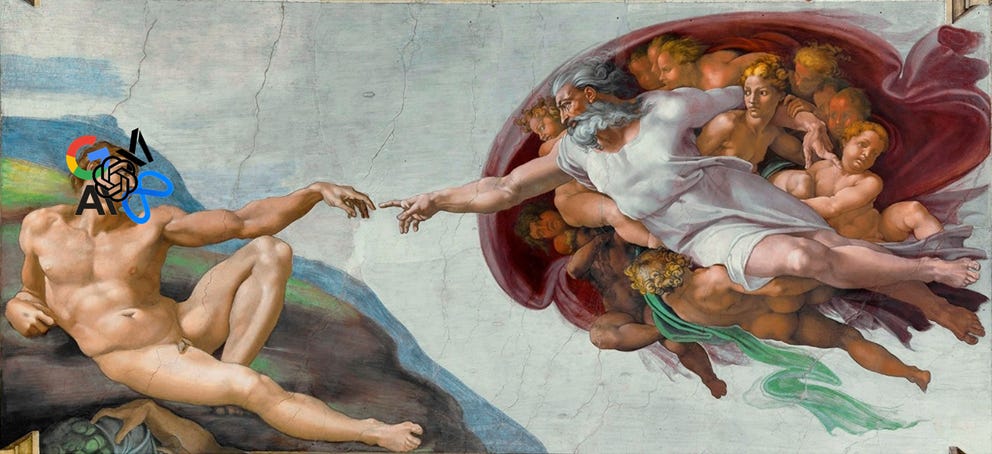

AI Companies Have Lost the Mandate of Heaven

I have bad news for the evangelists

Three stories. Three grim stories.

Everyone has reported on the first. Few have reported on the second. No one has reported on the third.

How they end will mark the AI industry for years.

I. The “scaling laws are done” story

The press has talked non-stop about the plateau of the scaling laws. Here’s The Information, Reuters, Bloomberg, Axios, and Insider (my article “GPTs Are Maxed Out” surprisingly precedes most of the commentary).

Here’s the gist of it: For a while, the AI industry trusted that more data and computing power would result in better AI without the need for algorithmic improvements or human ingenuity. This basic premise has received different names: the “Bitter Lesson,” the “Scaling Hypothesis,” or simply the “bigger is better” mantra. The scaling laws have quietly dictated the progress of deep learning, large language models, and the GPT boom of the last decade. When the universe smiles at you, you smile back and don’t ask questions.

Now, however, it seems the gods are frowning down upon the AI labs. They all have reported underwhelming performance from next-gen AI models, with a gloomy conclusion: Scaling isn’t enough.

Renowned AI scientist Ilya Sutskever, a leading authority in the field, confirmed it to Reuters. The press eagerly amplified his statement, reducing it to a simplified narrative—one that skeptics, critics, and detractors were quick to embrace, ignoring any nuance. Predictably, the empirical observation about scaling morphed into a meme: “Scaling doesn’t work, so AI doesn’t work, so AI companies are doomed, so we were right all along!”

Wrong! What Sutskever actually said—that Reuters correctly reported but people decided to ignore—is that “Scaling the right thing matters more now than ever.” What is not enough is just scaling applied to the pre-training phase. This caveat is key to understanding what failed and what didn’t, what the AI companies will do now, and what we can expect from their new strategy.

Scaling is far from over, only this particular way of scaling this specific thing is falling short of expectations.

So they go and find the next thing.

Is it bad news that the results from the pre-training scaling approach are plateauing? Well, yes. No doubt. But let’s not exaggerate to score a few “I told you so” points. As I wrote recently, extreme anti-hype is as bad as extreme hype.

II. The “de-scaling tricks are done” story

Scaling AI models to make them bigger isn’t the only trick on the expert’s sleeve. Some prefer to scale down big models to make them more affordable while keeping performance intact. GPT-poors don’t have $100 million to spare for a GPT-4-size monster so they’ve invented some tricks to compete with the AI aristocracy. One such technique is quantization.

Quantization is, in a way, the opposite mindset of “Bigger is better.” You could say quantized models swear by “Smaller isn’t worse.” Here’s how tech journalist Kyle Wiggers describes quantization in an accessible “explain like I’m five” manner:

In the context of AI, quantization refers to lowering the number of bits — the smallest units a computer can process — needed to represent information. Consider this analogy: When someone asks the time, you’d probably say “noon” — not “oh twelve hundred, one second, and four milliseconds.” That’s quantizing; both answers are correct, but one is slightly more precise. How much precision you actually need depends on the context.

So labs train large models and then quantize them down to make them cheaper to serve to users. The answers are different in terms of precision but not in terms of truthfulness. At least that’s what they thought because new research suggests extreme quantization—which could save GPU-poors a lot of money they don’t have—hurts truthfulness as a by-product of making AI models too imprecise. (Doing moderate quantization on ever-larger models also hurts performance.)

So scaling up is partially failing, scaling down is partially failing, and when you combine both they also fail. How can that be? The answer appears to be trivial: We’ve found both the ceiling and the floor of the current approach to AI.

I say “appears” because, again, the devil is in the details. Researchers have found the limits of quantization (i.e. post-training simplification) but not the limits of low-precision training (i.e. training the models on fewer bits of data per parameter). To keep Wiggers’ analogy, instead of saying “noon” to reduce costs after having learned to say “twelve hundred, one second, and four milliseconds,” this method makes AI models learn to say “noon” but nothing more precise than that.

The bad news is that it's better to have a few options rather than just one. What will happen when labs find the floor to low-precision training as well?

REMINDER: The Christmas Special offer—20% off for life—is running from Dec 1st to Jan 1st. Lock in your annual subscription now for just $40/year (or the price of a cup of coffee a month). Starting Jan 1st, The Algorithmic Bridge will move to $10/month or $100/year. If you’ve been thinking about upgrading, now’s the time.