AI Apps Need a Higher Minimum Age

They are not for kids

I didn’t expect to have to write this article. I thought that even if AI hasn’t found its common sense, we humans still had a bit left.

Then I came across this article by the New York Times:

I’ll start with what might seem like an argument against the title I’ve chosen—but only so you can see there’s no contradiction between the opinions I’ve shared before and the one I want to lay out here: I’m a firm believer in the idea that we should trust people to use new technologies as they see fit, especially when we’re talking about double-edged ones, like AI.

But I am not so far out of my mind as to think that my usual stance, which I used yesterday to incite some responsibility in college students, applies to kids under 13.

Society can’t just “trust” that right-out-of-infancy boys and girls will use it well or even that they have a sense of what “using it well” means in the first place. When a child wields a double-edged tool, one of the edges is oversized. You know which one. Older people have mature executive functions and a far more developed relationship with responsibility toward their future selves (that’s all AI is about, by the way).

A kid, even under parental control, restricted access, or stricter safety barriers, is easy prey for companies like Google or OpenAI.

Google argues an “AI for kids” can level the playing field for “vulnerable population,” but that’s just a PR motive, and the NYT wastes no time to make it clear: “Google and other A.I. chatbot developers are locked in a fierce competition to capture young users.” No fierce competition is ever a race to see which company makes a kids’ product safer or, well, less engaging.

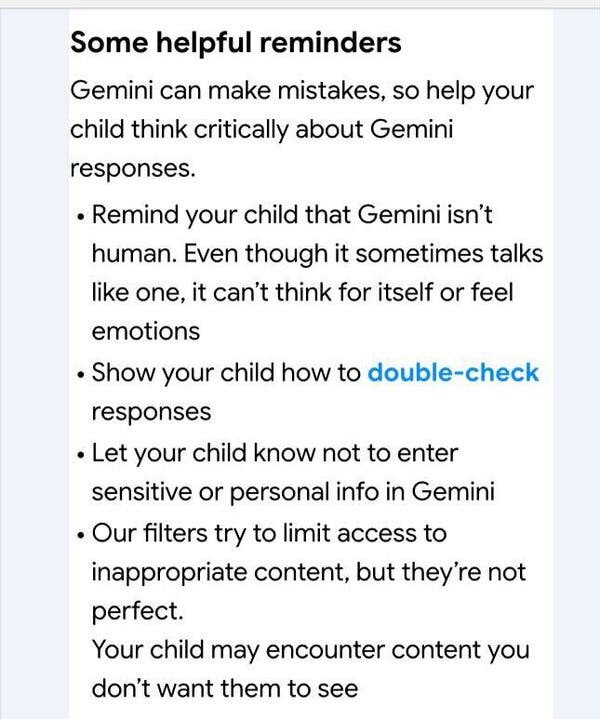

These companies have surely put the standard kids guardrails in place (Google says the service complies “with the federal children’s online privacy law”) but in case you’re new to this: no AI company—not Google nor OpenAI nor anyone else—has learned how to keep users or AI models from jumping over their containment or behavioral measures. AI is not a risk-free toy for kids. Google says “Gemini can make mistakes” and calls it a day.

Isn’t it easier to just not do this?

I’m not saying this is intentionally immoral, it’s just good business—companies spend billions a year to crack open the massive market of kids, who can’t be swayed through traditional marketing tactics because they have no money of their own—but it is morally wrong. Our stance toward the most vulnerable shouldn’t hinge on whether AI companies close a good deal.

If companies won’t do what’s right (even if that means a couple of billions less this quarter), then it’s up to institutions and governments—acting in the representation of the entire population—to decide whether morality becomes law. No business, no matter how profitable, should stand above the well-being of our children. No self-respecting libertarian should go so far as to defend these kinds of practices. Sometimes, it turns out, having centralized power with the ability to create and enforce policy is a good thing. This applies to social media, smartphones, amusement parks, driving, drinking—and it should also apply to AI apps.

I don’t think I’m proposing anything crazy. An age limit on ChatGPT makes sense once you realize that any specific age limit is necessarily arbitrary, since nothing magically changes the day we turn 18 or 21 (not to mention people mature at different rates, etc.). What matters is that the artificial separation we establish through regulation reflects a real distinction between people on one side and the other, even if that line isn’t as perfect as we’d like.

There are many reasons why kids under 13 shouldn’t have access to ChatGPT or whatever else. One of them is that AI models are, quite appropriately, black mirrors.

“Mirrors”, because their main personality trait is agreeableness (as revealed by that wild Rolling Stone piece or the recent ChatGPT update that got widely rejected for excess of sycophancy and which OpenAI had to roll back), and “black”—as in “black boxes”—because no one knows for sure what they can or can’t do and that causes us to overlook dangerous abilities that are just a good prompt away.

Kids need someone who pushes back against their still-forming ideas more than someone—they might not be able to tell there’s no one behind the screen—who validates whatever thoughts they happen to have. Maybe it’s not entirely fair to blame CharacterAI for the suicide of 14-year-old Sewel Setzer III this last October, but they can certainly be faulted for ever commercializing a product incapable of handling that kind of conversation—note the moral weight there—yet specifically targeting kids with engagement metrics.

When OpenAI o3 is capable of finding your house from just one image of your neighborhood, your child is in danger of being located by whoever’s on the other side of the chatbot (ChatGPT might be safe, not so much copycats that circulate the app store). Visual doxxing is not a common capability yet, but it will be. It’s always hard to know what kind of information a kid might reveal online, but we’re talking about pulling an address out of a picture. Not even adults are ready to prevent that.

In case you’re willing to side with Google on the basis that parental control is enough, let me gently say that you don’t spend enough time online. Digital native kids are good at bypassing things. Much better than their parents. If a kid wants to access something, chances are high they’ll find a way. Friends exist. Reddit exists. ChatGPT exists (or have we forgotten about how easy it is to make it jailbreak itself?)

And notice: to illustrate the kind of dangers I’m talking about, I’ve purposefully picked recent news stories. It’s happening today, as I write these lines.

AI apps need a higher minimum age requirement. Of course, kids can also bypass age limits. This is not intended for them, but for companies like Google, to force them to not facewash their attempts to capture a profitable and untapped market by saying “parents are in control” or “special guardrails are in place,” and instead simply not do it. Humans have certainly lost their common sense if we can’t agree that not everything should be turned into a market.

Let’s not repeat the mistakes we made. Look where they got us.

Let’s not forget Meta’s recent screw up in the kids+AI arena:

https://www.wsj.com/tech/ai/meta-ai-chatbots-sex-a25311bf?st=5N9kEa&reflink=article_copyURL_share

What safeguards does OpenAI have against this for ChatGPT and how are they tested? What stops a kid from engaging in explicit conversations with these apps?

And just… why? Why TF are we doing this?

Thanks for this post Alberto and I appreciate the visceral reaction; too many people are already numb. We've seen what social media (and specifically, the drive to maximize engagement) has done to the younger generation. We're about to turbocharge that with AI. Age limits aren't a panacea but they're a necessary starting point. Kudos to Australia for being the first with a law for a minimum age for social media (the age is 16, not high enough but it's a start). Unfettered access to AI models at 12, powered by companies focused on out-competing everyone else so they can be the first $10T company...yikes! Sure AI has benefits but in its current form the potential for harm with kids, especially because it's uncontrollable and unpredictable, is not worth the risk. I don't want the government controlling my life. But if the incentives aren't aligned with our interests (as individuals and as a society) then we need a few guardrails unless and until we can subdue the misaligned incentives.