Weekly Top Picks #114: Anthropic's Moment

Anthropic's exponential $$$ / Anthropic v. Pentagon / AI fatigue / Gemini 3 Deep Think & Seedance / AI safety is not enough / Slop cannons / Claude's soul

Hey there, I’m Alberto! 👋 Each week, I publish long-form AI analysis covering culture, philosophy, and business for The Algorithmic Bridge. Paid subscribers also get Monday news commentary and Friday how-to guides. I publish occasional extra articles. If you’d like to become a paid subscriber, here’s a button for that:

I’m making three small changes to the Weekly Top Picks column (I’ll be iterating as I see fit and according to your input):

I’ve renamed “Money & Power” as “Money & Business” to avoid ambiguity

I’ve removed the sections of “best essay” and “best note” until I decide whether I can do a bit more with those than just sharing them

I’ve switched the “Rules & Regulations” section for “Philosophy” (at the end). There are plenty of people covering the legal and regulatory aspects of AI with way better preparation than I. Besides, it’s terribly boring. Philosophy is more speculative and future-focused, which I like

THE WEEK IN AI AT A GLANCE

Money & Business: Anthropic raised $30 billion; Dario Amodei tells Dwarkesh he’s 90% on AGI within a decade but can’t buy the compute to match.

Geopolitics: The Pentagon may cut ties with Anthropic over two red lines: no mass surveillance and no autonomous weapons.

Work & Workers: An HBR study and a developer’s personal account arrive at the same conclusion: “AI tools don’t reduce work, they intensify it.”

Products & Capabilities: Gemini 3 Deep Think tops ARC-AGI-2; Chollet, the benchmark’s creator and longtime LLM “skeptic,” says “AGI ~2030.”

Trust & Safety: There’s a gap between what the field of AI safety measures and what’s already happening unsupervised on the open internet

Culture & Society: Slop cannons and turbo brains: the case for getting good at what you want AI to help you with.

Philosophy: The WSJ profiled the philosopher shaping Claude’s soul; the questions her work raises matter for the entire world.

THE WEEK IN THE ALGORITHMIC BRIDGE

(PAID) Weekly Top Picks #113: As bad as the Industrial Revolution / Nature: AGI is here / Moltbook was fake (of course) / Don’t obsess over what AI can or can’t do: find your authentic self

(PAID) Why Smart People Can’t Agree on Whether AI Is a Revolution or a Toy: The gap between enthusiasts and skeptics isn't about intelligence but about living different lives. You don’t argue someone out of their own experience.

MONEY & BUSINESS

The exponential is real, at least in the spreadsheet

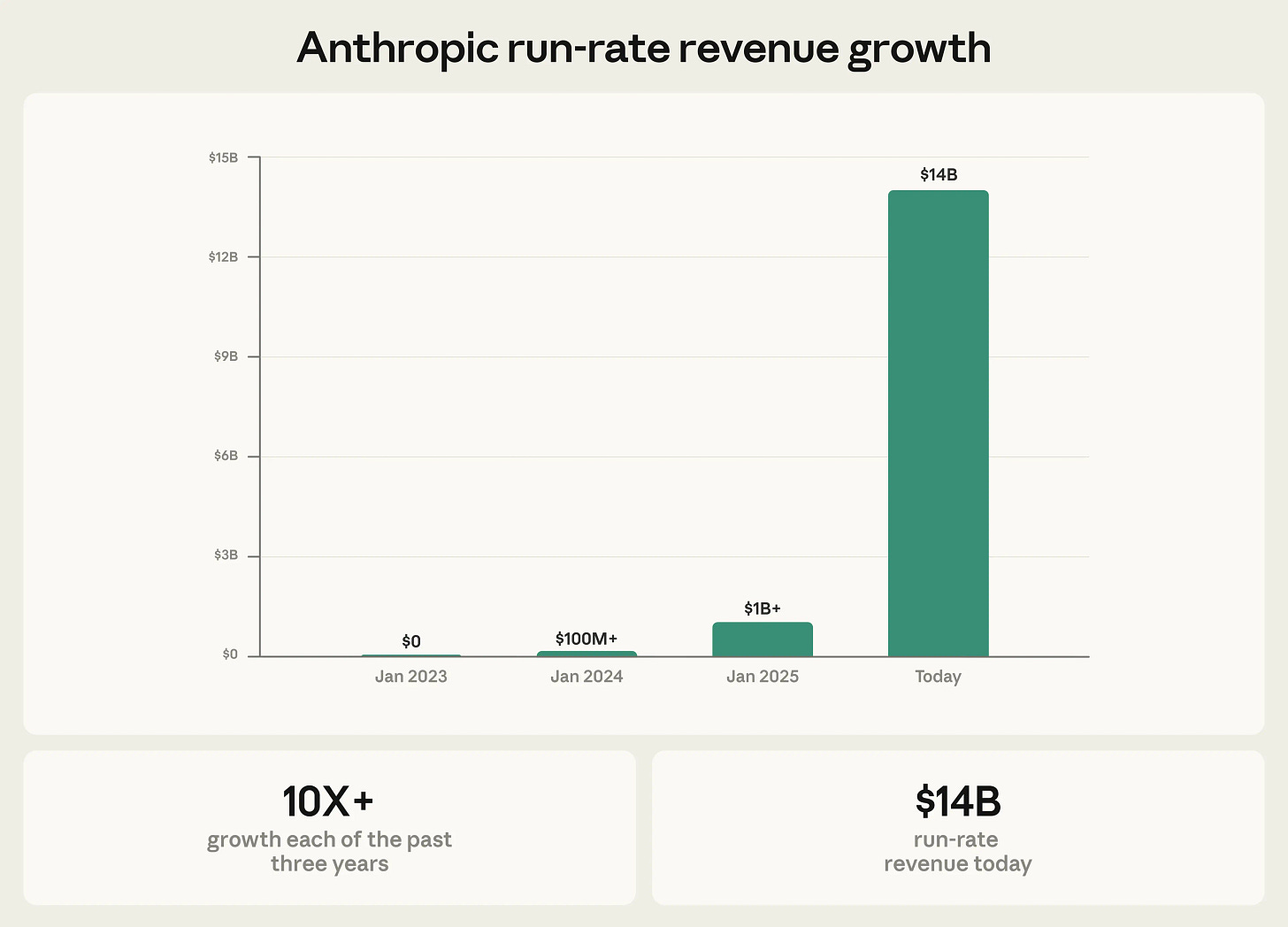

Anthropic raised $30 billion in Series G funding this week at a $380 billion post-money valuation, with run-rate revenue at $14 billion, having grown 10x annually for three consecutive years. Claude Code alone has crossed $2.5 billion in run-rate revenue, more than doubling since January 1:

Semianalysis estimated that 4% of all public GitHub commits worldwide are now authored by Claude Code, double the share from one month prior:

4% of GitHub public commits are being authored by Claude Code right now. At the current trajectory, we believe that Claude Code will be 20%+ of all daily commits by the end of 2026. While you blinked, AI consumed all of software development.

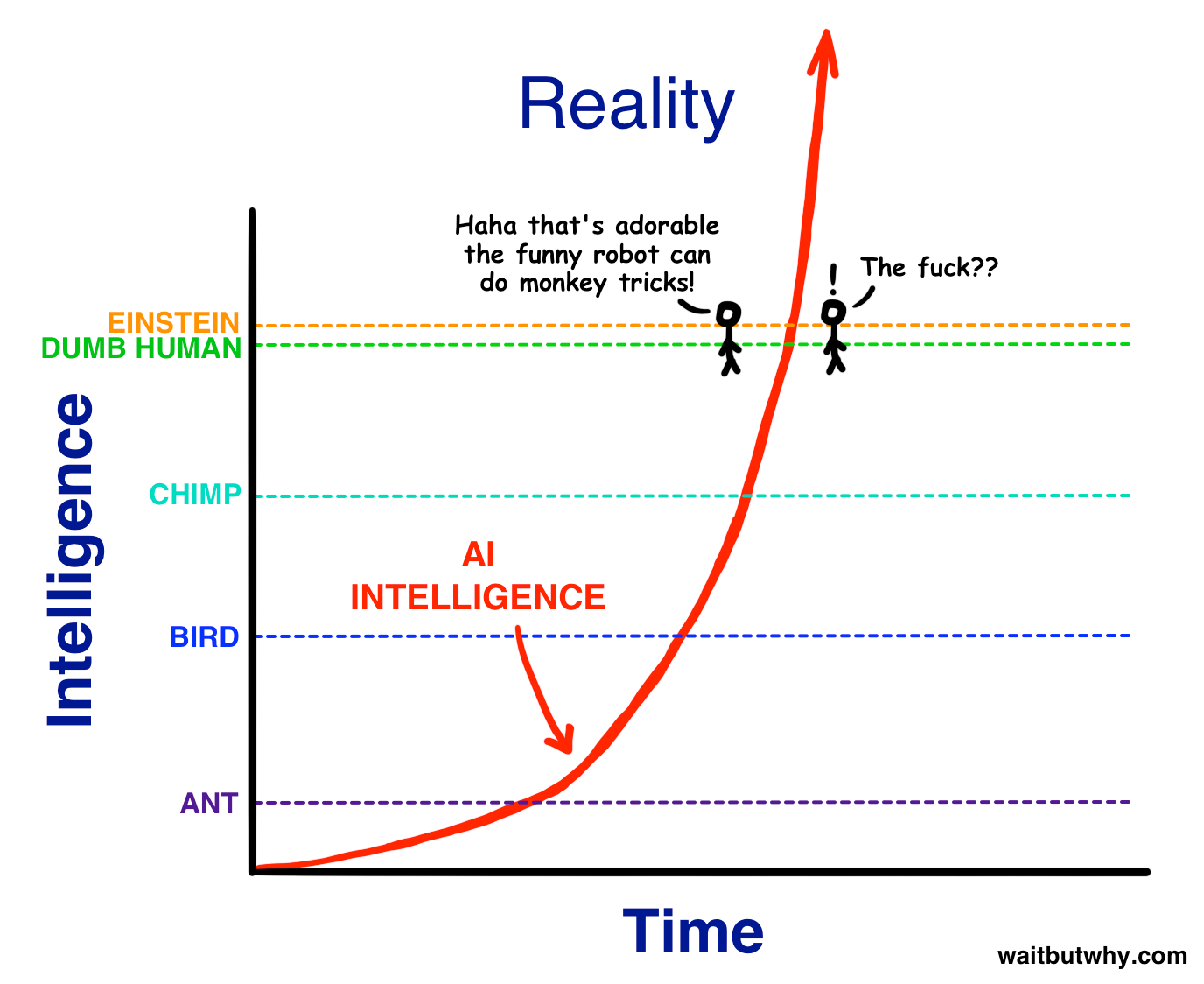

In a conversation with Dwarkesh Patel, Dario Amodei said this: “What has been the most surprising thing is the lack of public recognition of how close we are to the end of the exponential.” He puts 90% confidence on reaching a “country of geniuses in a data center” within ten years, and roughly 50/50 on one to three years. I’d say the reason why people are not “paying attention” is simple, actually: most people are witnessing the exponential unfold slightly to the left, which makes it feel like we’ve just hit the “adorable funny robot doing monkey tricks” stage:

The timeline prediction isn’t news—AI CEOs predict exponentials like one predicts cold in winter—but the tension with how Anthropic actually behaves makes it interesting: if you believe AGI is one to three years out, why not spend every dollar on compute? That was Dwarkesh’s question. Amodei’s answer: The company can’t commit to $1 trillion in 2027 compute because if the timeline slips by even a year, “there’s no hedge on earth that could stop me from going bankrupt.”

The gap between what the CEO believes about the technology and what the CFO can responsibly spend tells you something about where they actually are. And about how unstable and vulnerable to forecasting mistakes the industry as a whole is (that’s why the bubble talk has gotten so much visibility).

Amodei also sketched the competitive endgame: three or four players, high barriers to entry, differentiated products: cloud economics, not commoditization. “Everyone knows Claude is good at different things than GPT is good at, than Gemini is good at.” Whether that differentiation survives is another question. He acknowledged Anthropic is “not perfectly good at preventing some of these other companies from using our models internally.” It’s not for lack of trying, though.

Anthropic is, in other words, a company that believes it is building God and yet is worried about the electricity bill. That’s both prudence and cognitive dissonance at the same time, which is something we might see more of as things get weirder and we get closer to the end of the exponential with our fragile flesh and blood suits.

Sources: Anthropic, Semianalysis, Dwarkesh Podcast, Wired

GEOPOLITICS

The Pentagon doesn’t like Anthropic’s red lines

Axios reported this week that the Pentagon may end its partnership with Anthropic. The military wants AI labs to make their models available for “all lawful purposes,” and Anthropic won’t agree. The company maintains two hard limits: no mass surveillance of Americans and no fully autonomous weaponry. The other three labs working with the Pentagon (OpenAI, Google, xAI) have been more accommodating; one has reportedly already accepted the military’s terms.

The relationship deteriorated further over the operation to capture Venezuela’s Nicolás Maduro, conducted through Anthropic’s partnership with Palantir. According to Axios, a senior administration official claimed an Anthropic executive contacted Palantir to ask whether Claude had been used in the raid, implying disapproval because the operation involved lethal force. Anthropic denied this, saying its conversations with the Pentagon have focused on policy questions around its two hard limits and do not relate to specific operations.

The story has the texture of a pressure campaign: anonymous official, specific framing of Anthropic as the difficult partner, emphasis on competitors’ flexibility. Is the industry ganging on the Anthropic safety-focused AI-rights-matter weirdos? I don’t know. But whether it works will say something about how durable safety commitments are when the customer is the Department of War.

Sources: Axios

WORK & WORKERS

Using AI at work saves you time, so now you have time to do more work

A study published in Harvard Business Review this week followed 200 employees at a tech company for eight months and found that AI tools didn’t reduce work as would be expected but instead intensified it. Workers moved faster, took on a broader scope, and bled work into lunch breaks and evenings, all without being asked. As one engineer put it: “You had thought that maybe, oh, because you could be more productive with AI, then you save some time, you can work less. But then really, you don’t work less. You just work the same amount or even more.”

I find this to be true in my own life. Being self-employed is always like this, so I don’t really feel such a stark difference, but I realize that I personally see AI, especially the latest tools, as enablers for everything I didn’t have time to do before. So now the spare time is used up by doing those things. Instead, I think a better framing might be something I recently shared with my readers:

What AI actually does—when it works—is give you time back. So the question is: What would you do with four extra hours per day? And then find the way to use AI to allow you to do just that. If you don’t, you risk only adding more work on top: an ultra-productive compressed version of the same day. Instead, make your days equally productive + unique otherwise.

Researchers identified three patterns: “task expansion” (people absorbing work that used to belong to others because AI made it feel accessible), “blurred boundaries” (prompting AI during lunch or before leaving the desk “so the AI could work while they stepped away”), and “compulsive multitasking” dressed up as momentum.

The result was a self-reinforcing cycle: “AI accelerated certain tasks, which raised expectations for speed; higher speed made workers more reliant on AI. Increased reliance widened the scope of what workers attempted, and a wider scope further expanded the quantity and density of work.” TechCrunch’s Connie Loizos framed the finding like this: “The industry bet that helping people do more would be the answer to everything, but it may turn out to be the beginning of a different problem entirely.”

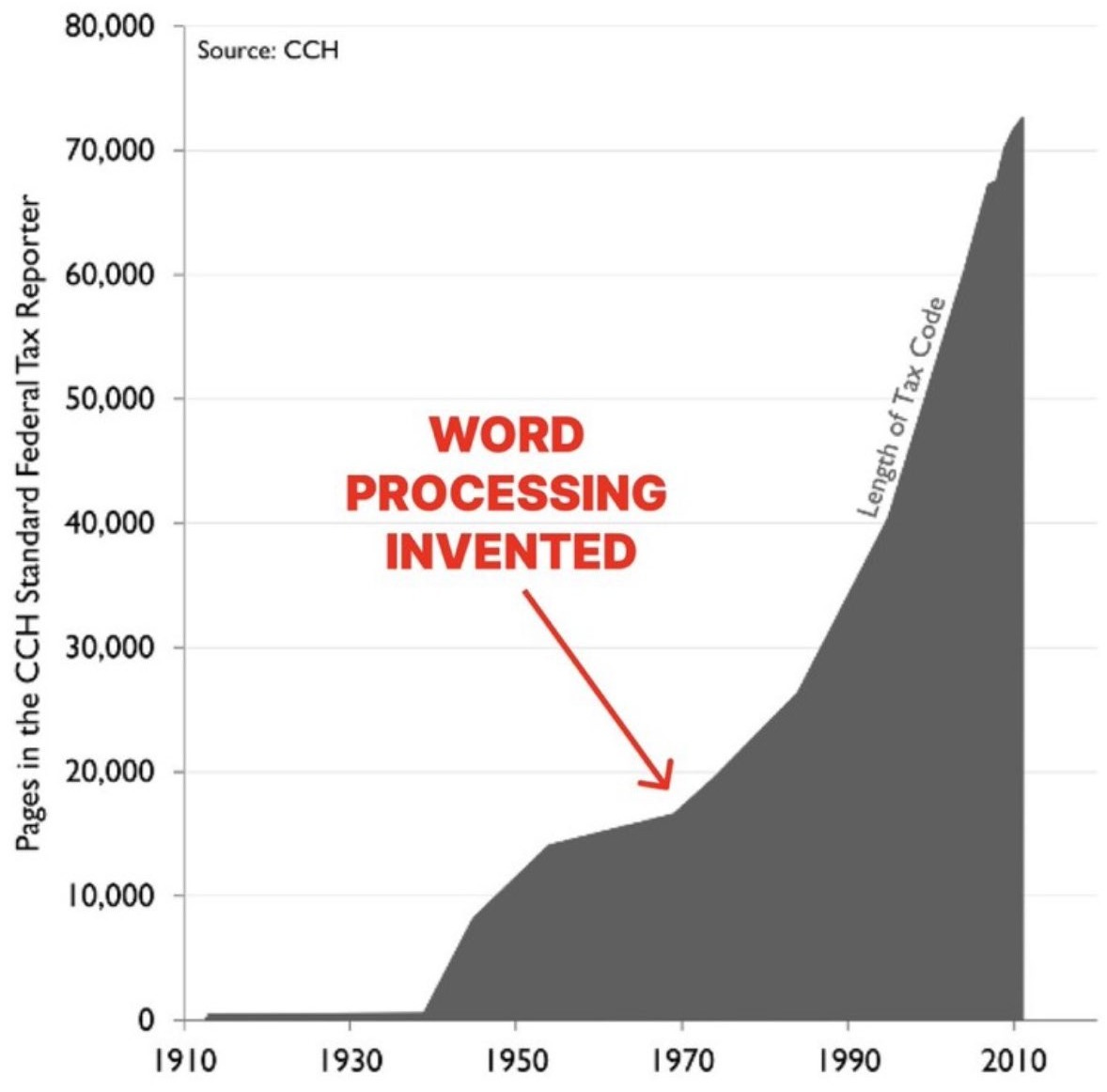

You might recall the finding that word processors didn’t make writing tax code more efficient but instead increased the length of the documents. This is the exact same thing, an adage to Parkinson’s law: Work expands to fill the time available, and if you manage to make yourself more efficient, then you will find something else to do. The questions left to be answered are: 1) Does total productivity increase? 2) Is it advanced technology at odds with constraints-enabled creativity?

Separately, engineer Siddhant Khare published a personal account of AI fatigue that reads like the individual-level version of the same phenomenon. Thankfully, he provides actionable solutions.

Khare, who builds AI agent infrastructure professionally, describes “hitting a wall” (lmao, for those of you who get the reference) despite—or because of—shipping more code than ever. He says that “AI reduces the cost of production but increases the cost of coordination, review, and decision-making. And those costs fall entirely on the human.” When you stop doing the “how” tasks, you realize that the “what” tasks are not easy. He went from being a creator to being “a quality inspector on an assembly line that never stops.”

His fix is boundaries: time-boxed sessions (he doesn’t use AI forever but sets a timer and then writes it himself), a three-prompt rule (if AI doesn’t get to 70% usable in three attempts, he writes it himself), and deliberate morning hours without AI to keep his own reasoning sharp. Perhaps a bit constraining, but definitely a good starting point! His approach to this is personal, but the underlying principle is universal: