Weekly Top Picks #112: Besides Moltbook

Can AI be profitable? / China is ahead is behind / Zero privacy / A new era of AI research / Anthropic's conundrum / The useful power of power users

👋 Hey there, I’m Alberto!

Each week, I publish long-form AI analysis covering culture, philosophy, and business for The Algorithmic Bridge.

Free essays weekly. Paid subscribers get Monday news commentary and Friday how-to guides. You can support my work by sharing and subscribing.

THE WEEK IN AI AT A GLANCE

Money & Power: Frontier models are “rapidly depreciating infrastructure,” and OpenAI is retiring them faster than it can recoup costs.

Geopolitics: One year after DeepSeek’s “Sputnik moment,” who’s winning between the US and China depends on which gap you measure.

Rules & Regulations: When you talk to ChatGPT, someone is always watching.

Work & Workers: Not much happened this week.

Products & Capabilities: The last word hasn’t been said in AI yet: we are in a “new era of research.”

Trust & Safety: “Anthropic is at war with itself.” What do you do when you want the AI race to stop, and that pushes you to run faster?

Culture & Society: The Bernoulli distribution of AI belief: This will be the real cultural story of AI in 2026.

THE WEEK IN THE ALGORITHMIC BRIDGE

(PAID) Weekly Top Picks #111: Davos Edition: All things Davos.

(FREE) Not With a Bang But a Wanker: History rhymes because the constant is not the technology but us. This essay is about Grok’s “put a bikini on her” drama.

(PAID) How to Humanize Your AI Writing in 10 Steps: A guide to humanize AI writing. Each step includes a name for the mistake, an explanation, a prompt to fix it (when applicable), and an example scene with a changelog (plus a PDF!)

(FREE) LEAKED: The Truth Behind Moltbook, Revealed: This one is a leaked document from the m/humanwatching submolt that reveals the truth about Moltbook, the AI agent social network. Authorship unknown. Discretion advised.

MONEY & POWER

The business of intelligence is not (yet) the business of profit

Are AI companies profitable? The usual answer is a resounding “no.” But it actually depends on what you mean by “profitable.” Dario Amodei and Sam Altman—there might be some conflict of interest here, but hear me out—say that each model generates enough revenue to “cover its own R&D costs.” However, that surplus gets swallowed by the cost of building the next model. That’s why they look unprofitable.

AI research firm Epoch AI dug into it. Their case study: the “GPT-5 bundle” (everything OpenAI ran from August to December 2025). Gross margins on running the models on that period were ~48%, lower than typical software (60-80%), but not that bad. Then you add other costs (staff, marketing, etc.) and you get -11%. If this is representative, it means that running AI models is barely a loss-making business rather than a disastrous enterprise as it’s often portrayed to be.

However, this is tricky to measure because the cost of making the model (R&D) belongs to the previous period, i.e., the costs of one model are imposed on the revenue of the previous one! (That’s why Altman and Amodei are so calm about this.) This is good news if new models (that make more money) continue coming as does the investment, at least until net profitability is reached.

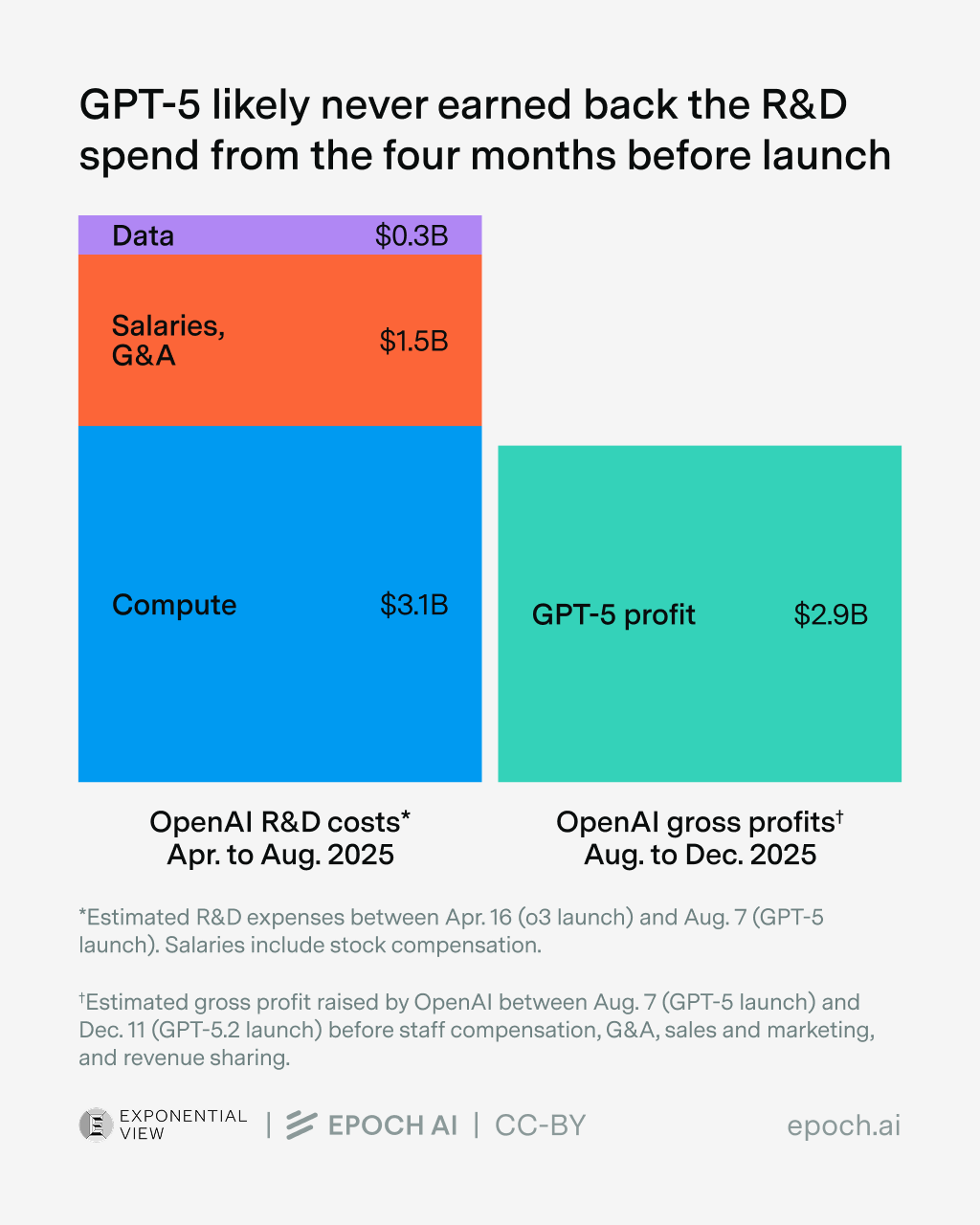

But there’s something else. OpenAI spent roughly $5 billion on R&D in the four months before GPT-5 launched. The model made about $3 billion in gross profit during its four-month run. Then Gemini 3 Pro and Claude 4.5 surpassed it, GPT-5.1/5.2 replaced it, and the cycle restarted. So, at least for GPT-5, R&D costs > revenue.

The value of an AI model must be extracted before competitors—or new versions—render them obsolete. Basically, OpenAI is retiring models faster than they can recoup costs. This is Epoch’s main finding, frontier models are “rapidly-depreciating infrastructure.” (It’s not just the chips, but also the models.)

One last thing before moving on to the next section: What's going on with the Nvidia-OpenAI deal?

In September, Nvidia and OpenAI announced a strategic partnership: up to $100 billion, 10 gigawatts of infrastructure, and a signed letter of intent. But on Friday, the Wall Street Journal reported it had “stalled.”

They claim that Huang has been privately emphasizing that the agreement was non-binding, criticizing OpenAI’s business discipline (ads!), and expressing concern about Anthropic and Google. The companies are now discussing an equity investment of “tens of billions” instead.

Later, Huang called the report “nonsense,” insisting Nvidia would “definitely participate” in OpenAI’s funding round, potentially its largest investment ever.

But, crucially, he didn’t deny the deal had changed shape. The truth probably lies between what the WSJ reported and what Huang denied; both things can be true: the infrastructure megadeal appears dead, even though a large equity bet is still on.

And then…

… Reuters reports that “OpenAl is unsatisfied with some of Nvidia's latest artificial intelligence chips, and it has sought alternatives since last year, eight sources familiar with the matter said, potentially complicating the relationship between the two highest-profile players in the Al boom.”

To which Altman responded: “We love working with NVIDIA and they make the best AI chips in the world. We hope to be a gigantic customer for a very long time. I don't get where all this insanity is coming from.”

I really don't know what's going on!

Sources: Epoch AI, WSJ, Reuters, Bloomberg

GEOPOLITICS

One year after DeepSeek’s “Sputnik moment”

It’s been one year since DeepSeek dropped R1 and sent Nvidia’s stock into freefall (those who deemed this unreasonable by virtue of the Jevons paradox have been vindicated). The consensus at the time: China was closer than anyone thought (correct, because at the time, people didn’t think it was close at all).

The consensus now is harder to pin down, however, because the evidence points in multiple directions at once.

On one hand, China is winning where it matters for the long game. Microsoft research shows DeepSeek has captured 18% market share in Ethiopia, 17% in Zimbabwe, 56% in Belarus, 49% in Cuba. Brad Smith, Microsoft’s president, warned the FT that US AI groups are “being outpaced by Chinese rivals” outside the West, thanks to open-source models and state subsidies that let them undercut on price (where’s Meta when we need them!). “If American tech companies or western governments were to close their eyes to the future in Africa,” Smith said, “they would be closing their eyes to the future of the world. . . . that would be a grave mistake.”

DeepSeek itself is still publishing (you don’t hear from them because the moment of over-hype passed, but, believe it or not, they work). A January paper introduced a new training architecture—”Manifold-Constrained Hyper-Connections”—that analysts called a “striking breakthrough” for scaling models efficiently. The company can “once again, bypass compute bottlenecks and unlock leaps in intelligence,” one researcher told Business Insider. A new flagship model—DeepSeek-V4—is expected within weeks.

On the other hand, elite Chinese researchers are quietly pessimistic.