The Trillion-Dollar AI Bet

There are bubbles of excess and bubbles of pure betting; some bubbles are both

LONDON, England—The year is 1845, and coal smoke drifts through the narrow streets, clinging to wool coats and horsehair. It blackens the windows of busy dockyards and distilleries, and settles on the newly laid railway tracks. The OpenRailway Company has just signed a contract with Parliament worth £4 million. Pioneer of a new type of track that requires half the number of human workers per mile—a rate that falls further the longer the line—the OpenRailway Company promises speed, efficiency, and lower costs. There’s only one caveat, which they call “stochasticity,” a harmless irregularity that may result in modest diversions, gentle delays, or the occasional creative reinterpretation of a passenger’s intended destination. Surely a minor inconvenience to pay in exchange for a bright future!

That, of course, didn’t happen. But picturing the existence of a stochastic railway company is a good perspective to reframe what I can only describe as the biggest news this year coming from the AI industry: OpenAI has struck a five-year $300 billion cloud computing agreement with Oracle (starting 2027) and sealed a partnership under which Nvidia will invest up to $100 billion in its AI infrastructure. The whole tech sector is betting on the economic value of AI using datacenter build-up as a proxy. Fair; sensible, even (the markets loved it!). But the markets can be wrong, especially ahead of tipping point events: those numbers reveal that the AI frenzy has reached its peak in 2025, just like the railroad mania did in 1845.

However, comparing them directly is rather unfair, for there’s a subtle difference between the two: The railroad mania made intuitive sense because railroads are a technology whose contribution to society is clear (as it was in 1845): you go faster, you arrive sooner. The mania wasn’t so much a bet as it was an excess; companies like the Great Western Railway, the South Eastern Railway—and even the Leeds and Thirsk, which bored a three-mile tunnel through solid rock to reach a modest market town—built railroads from everywhere and to nowhere, yet no one doubted, not even at the time, that trains would get you to your destiny. If trains did, inadvertently, change direction or reinvent the route on the fly, then “Sorry, I misunderstood your petition, let’s try again” would not cut it. We wouldn’t have an interconnected world today.

It’s not hard to understand excess bubbles like the railway mania in the mid-1800s, or the canal mania in the late 1700s, or the tulip mania in the mid-1600s (the speculation is about scale: ”We may not need as much of X, but someone will get richer”). It is harder to understand betting bubbles (the speculation is about viability: ”X may or may not work, so let’s gamble”). AI is both.

The excess part is pretty obvious when you look at those figures: $300 billion and $100 billion. (Let’s ignore for now that OpenAI doesn’t have the money to pay Oracle’s 4.5 gigawatts in datacenter capacity, which is, in itself, another bet: on users paying for ChatGPT for a few more years; or perhaps a heads-up about a future ad-based business model.) I wrote at length about the excesses of the AI industry: “. . . Big Tech keeps erecting these barely manned giant buildings, which inflate total GDP numbers while concealing the lack of AI-related productivity gains that should be reflected in the GDP of the entire rest of the US economy.” Indeed, only a few companies are growing in the US (the S&P 500 conceals the absence of growth outside of tech unless you look at the equal-weighted version), and all of them are piling onto the same bet: that AI will pay out.

I wrote that a month ago, when the entire tech sector’s $100 billion spend on datacenters in the span of three months was considered an exorbitant sum. Today, OpenAI is making deals on half a trillion dollars like it’s nothing.

Let’s run some numbers. The Information reported on August 1st that OpenAI hit $12.7 billion in annualized recurring revenue. Nick Turley, OpenAI VP, reported on August 4th that ChatGPT reached 700 million weekly active users. If we assume the ratio of paid to free subscribers remains constant, we get that, even if the total human population used ChatGPT every week—8.2 billion people—OpenAI would be making “only” $148 billion in ARR, which, given the associated increased costs of serving so many people, is still insufficient to pay Oracle. Needless to say, it’s rather unlikely that every single living human will be using ChatGPT anytime soon. So OpenAI has four options besides passive growth:

Increase the paid-to-free ratio by further hyping AI or, alternatively, make the technology better (e.g., more reliable and less hallucinatory).

Raise the price of existing products so that the same number of paid subs provides more revenue (assuming they won’t stop paying altogether).

Expand the offering with exclusive products for the high-paying tiers (this is happening soon, per CEO Sam Altman), or add new tiers for those products.

Change the business model (i.e., adding ads on ChatGPT messages, just like that Black Mirror episode) to complement the subscription revenue.

And that’s just OpenAI. Bloomberg reported yesterday that consulting firm Bain & Co. estimates that, by 2030, the AI industry will need $2 trillion in revenue to “fund the computing power needed to meet projected demand,” and that it will fall short by $800 billion, as “efforts to monetize services like ChatGPT trail the spending requirements for data centers and related infrastructure.” Beating this prediction will be a huge challenge for OpenAI and the industry at large, but, alas, not unfeasible.

There’s an argument against the excesses of the AI industry doing the rounds on social media: these deals are little more than circular financing, i.e., Nvidia invests in OpenAI so that OpenAI can buy more Nvidia chips. But this argument is, at best, a funny meme to mock them. Nvidia investing $100 billion in OpenAI in exchange for equity makes sense for both; part of that money will go back to Nvidia, but part of it won’t; the added value by OpenAI in the form of datacenter infrastructure is not negligible. Calling them out on the grounds of circular financing would be like calling out a construction company that accepts an investment from IKEA because it’s going to build houses that will host IKEA furniture. Yes, IKEA is benefiting twice (equity and sales), but furniture without a house to host it is basically useless; a GPU rack without a modern datacenter is, at best, for video games and, at worst, for mining crypto.

The “bet side” of the AI bubble is without precedent and, in my opinion, more interesting than the “excess side.” Let’s see what that’s about and why I think it will define how history books write about this period, in contrast to past manias.

AI is often described as a ‘general-purpose technology,’ meaning a kind of meta-technology; the kind that creates new technologies or improves existing ones. It’s up to the user to figure out how it works and what it’s for. (We’ve come to think of the chatbot interface and the smartphone form-factor as the only possibilities, but AI could be built into almost any design or device.) AI labs market this so-called “generality” as a virtue—as unbounded scope and infinite potential—but they fail to notice that humans hate the uncertainty that comes with the infinite. It’s great, yes, but also intangible, abstract, nebulous. I imagine people prefer a train that’s clearly a train rather than an all-in-one vehicle that’s a plane, a train, and a boat, and sometimes all of those at once.

But what we’d absolutely loathe is a train that sometimes—unprompted, without knowing a priori when or how—fails to be a train. AI is the ultimate multi-tool and an unreliable one at that, so its success is, in every sense, a bet. A bet for the consumers using it, for the AI companies building it, for the businesses buying it, and a bet for the entire economy.

The Financial Times published a report yesterday analyzing “hundreds of corporate filings and executive transcripts at S&P 500 companies last year.” They put it starkly in an article titled “America’s top companies keep talking about AI—but can’t explain the upsides.” (The FT has a “strategic partnership” with OpenAI, so it’s not incentivized to criticize the industry as much as it is to defend the bet as potentially positive.) It’s not all bad; the authors found novel uses and differentiating value for several industries (e.g., customer service, military suppliers, and manufacturers), but also that risks—cybersecurity, deployment failure, and unreliability—are much clearer than benefits, and that, for companies outside Big Tech, SEC filings rarely reflect the optimism apparent in earnings calls. They are hedging their bet and grappling with how to translate AI’s uncertainty into productivity or revenue gains.

The happiest companies? Those who stand to benefit directly from the datacenter frenzy. Remember: the OpenRailway Company may build lines that stochastically send unwitting passengers to a distant terminus on the opposite coast of Britain, but insofar as they keep buying steel for the rails, coal for the engines, timber for the sleepers, and gravel for the ballast, the enterprise rolls on, and suppliers will remain enthusiastic about the promise of a new empire.

The bet is not just of a technical nature, though (“Does AI work?”), but multifaceted. I find it useful to frame the question of whether AI will pay out in terms of the frictions it encounters as investment grows and adoption surges. I explored this topic in “Even God Can’t Skip the Bureaucrats.” I argued that “there is unassailable friction between superintelligence and revolution. When you solve intelligence, the bottleneck for effective change doesn’t disappear; it moves somewhere else, and frictions that were less important before become significant.” It was a critique of the AI 2027 essay co-authored by ex-OpenAI Daniel Kokotajlo and blogger Scott Alexander, among others.

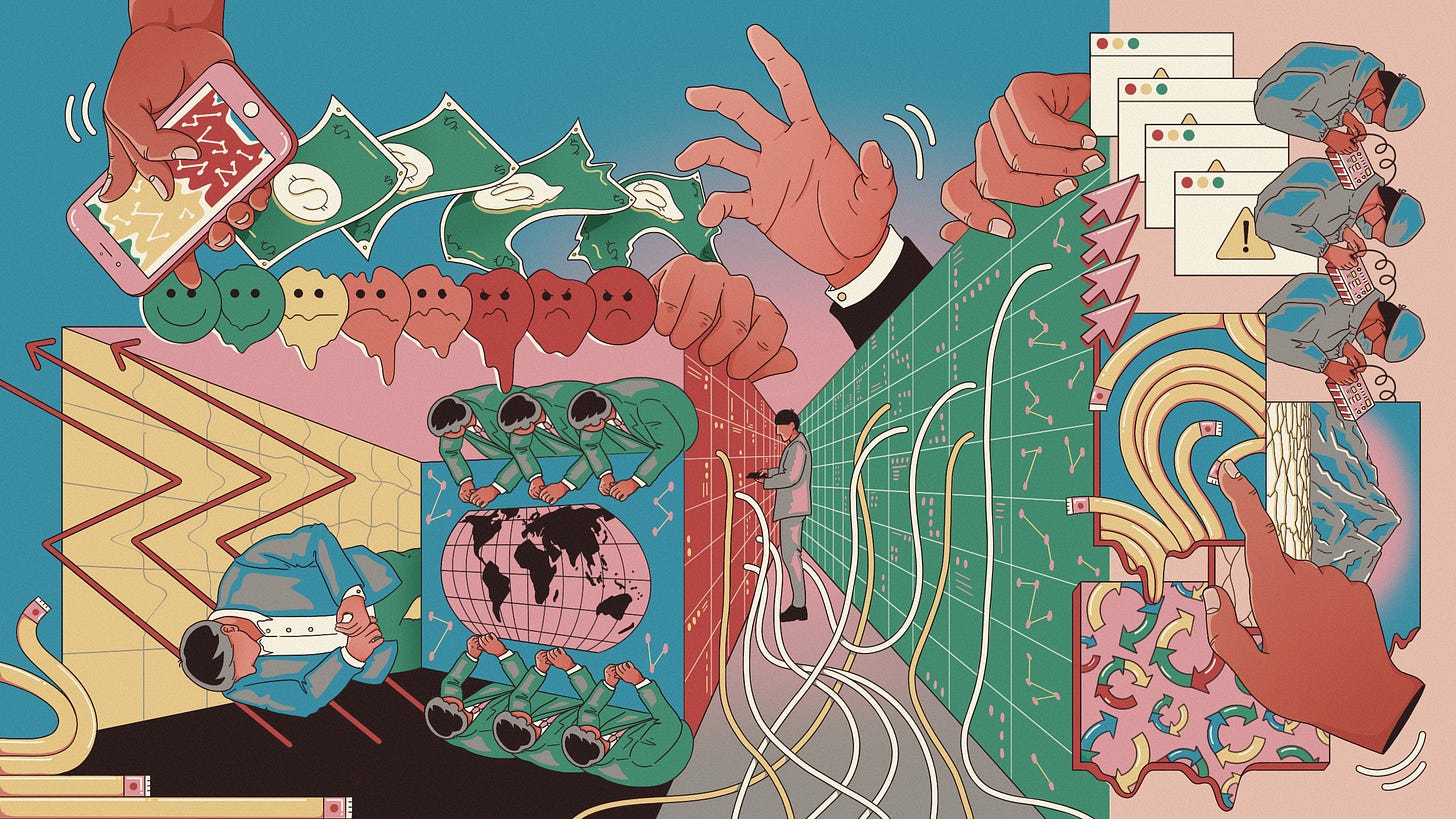

I collected a non-exhaustive list of frictions, which I’ll reframe as the faces of the bet, for they all stem from the fact that it’s not clear how this technology will contribute to society. With that in mind, let’s see how AI is a political, sociocultural, geopolitical, and economic bet. No wonder everyone sees something different when they look at it; no wonder everyone is, by all accounts, uneasy about the imminent future.