Grok 3: Another Win For The Bitter Lesson

Congratulations to the xAI team—and the advocates of the scaling laws

I. The scaling laws govern AI progress

For once, it seems Elon Musk wasn’t exaggerating when he called Grok 3 the “smartest AI on Earth.” Grok 3 is a massive leap forward compared to Grok 2. (You can watch the full presentation here.)

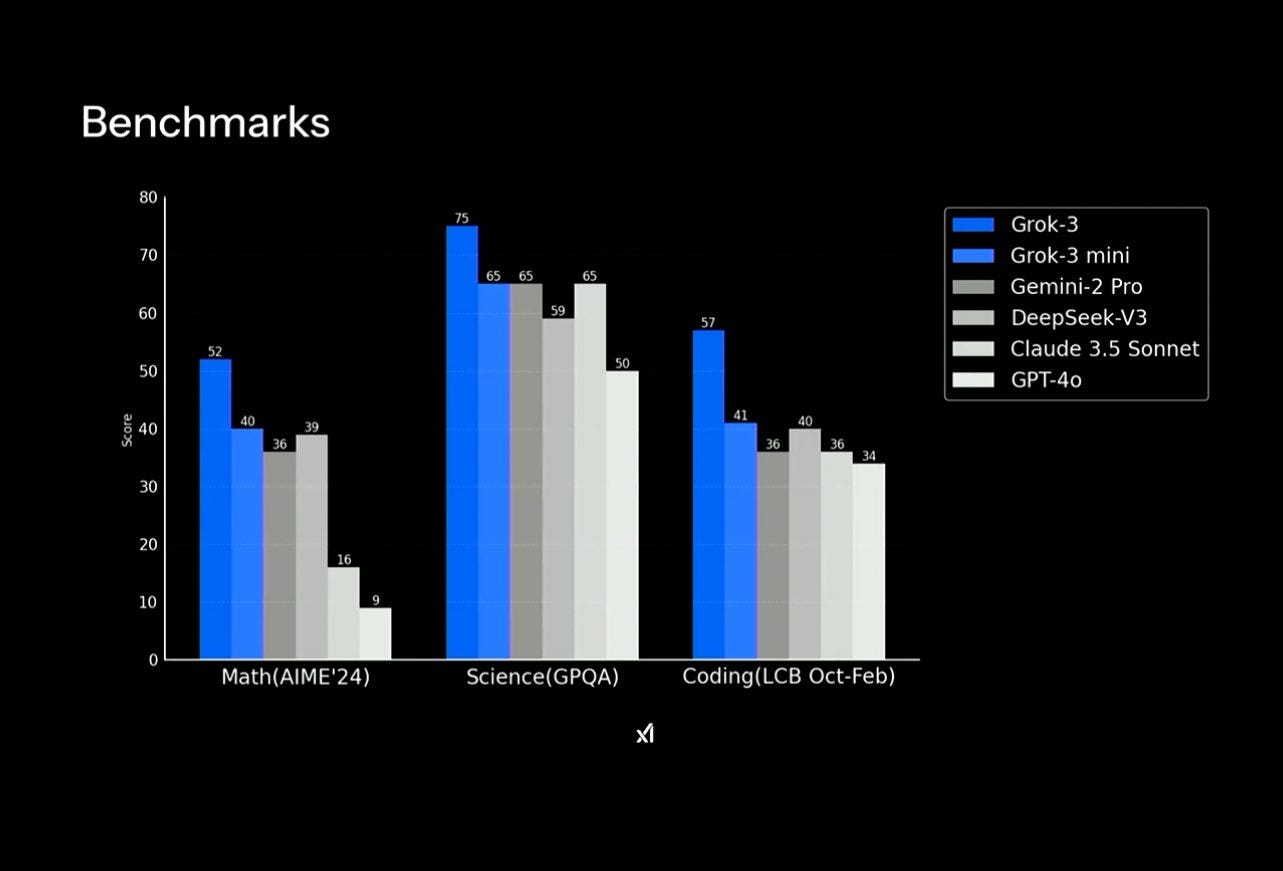

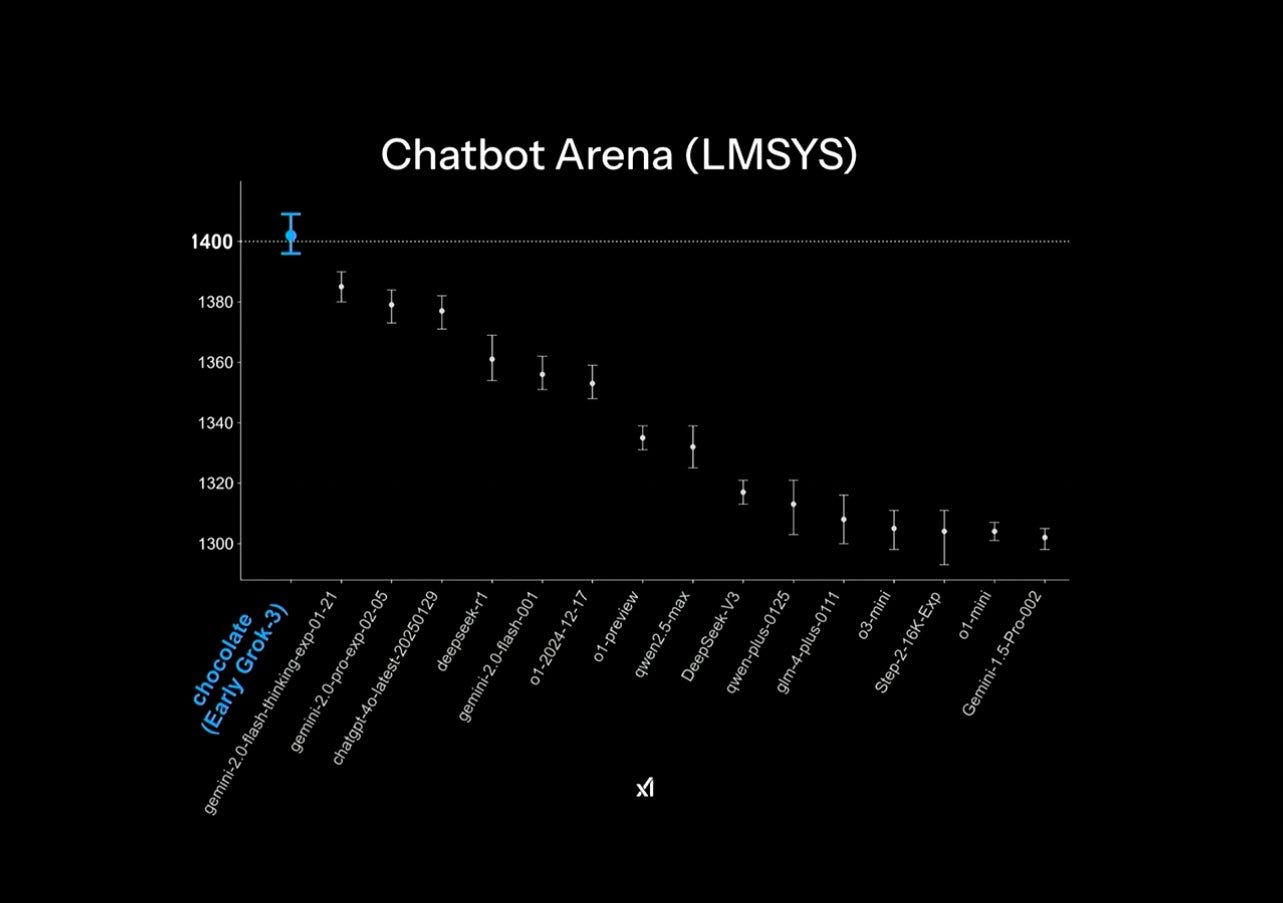

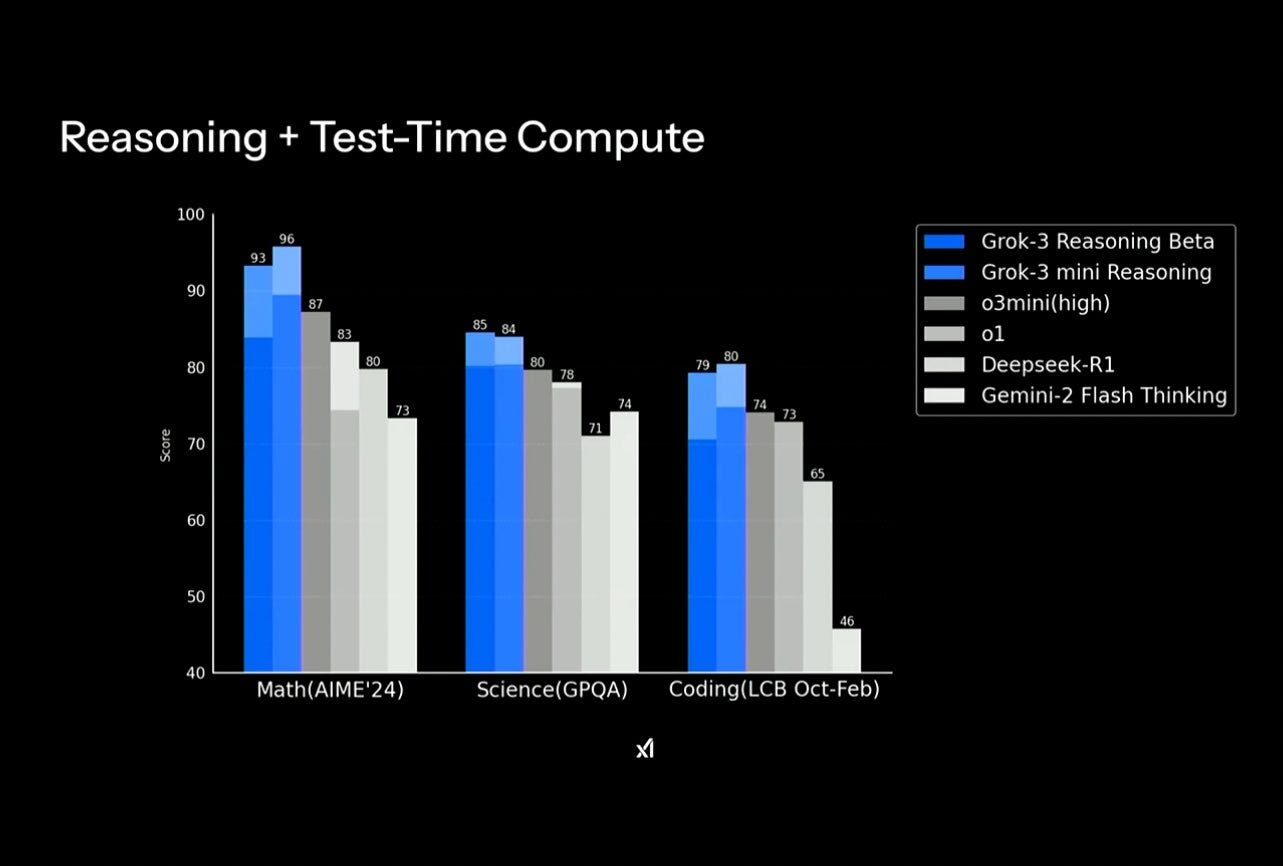

Grok 3 performs at a level comparable to, and in some cases even exceeding, models from more mature labs like OpenAI, Google DeepMind, and Anthropic. It tops all categories in the LMSys arena and the reasoning version shows strong results—o3-level—in math, coding, and science questions (according to benchmarks). By most measures, it is, at the very least, co-state-of-the-art (not at every task, though).

In one word, Grok 3 is great.

But more than just a win for xAI, Grok 3 represents yet another victory for the Bitter Lesson. Perhaps the clearest one so far. Contrary to what the press and critics keep saying, the scaling laws still govern AI progress—more than ever before.

II. DeepSeek: The exception that proves the rule

This article is not about DeepSeek, but I want to revisit a valuable insight to support my argument.

DeepSeek managed to compete with top players despite having a moderate compute disadvantage—50K Nvidia Hoppers vs 100K+ Nvidia H100s for US labs). To compensate, they had to optimize across the entire stack, showing impressive engineering prowess. They succeeded, turning the startup into an exception that could prove misplaced the community’s trust in both the bitter lesson and the scaling paradigm.

That is, at least, what skeptics argued: DeepSeek, with its “tiny” GPU cluster and cheap training runs, built a model at the level of OpenAI o1. To them, this was strong evidence that hand-crafted features, human ingenuity, and algorithmic improvements were more important than using giant clusters to train AI models. It even meant that you should sell all your Nvidia stock because GPUs were not that important.

However, this conclusion emerges from a mistaken understanding of the Bitter Lesson (which isn’t a law of nature but an empirical observation): It doesn’t say that algorithmic improvements don’t work. It very much praises them. What it says is that, if you can choose, scaling learning and search algorithms with more computing power is the better choice over applying heuristic solutions, however clever.

DeepSeek achieved good results doing the latter because they had no option. Had they trained on a 100K GPU cluster like xAI did for Grok 3, they would have obtained much better results. DeepSeek proved that further optimization is possible—not that scaling is useless. Those two realities are uncorrelated.

Indeed, DeepSeek’s CEO, Liang Wenfeng, acknowledged that US export controls are their main bottleneck to make better models. The fact that he said this despite having access to ~50K Hoppers reveals the opposite of “GPUs don’t matter.” While DeepSeek relied on clever optimizations, they still needed scale. DeepSeek CEO said it—why should anyone outside the company think they know better?

DeepSeek’s unprecedented success supports the Bitter Lesson and the scaling paradigm, even if to some degree as an exception to the rule.

III. xAI is proof that scaling > optimization

Back to Grok 3.

I wonder if xAI’s achievement will make skeptics rethink their criticisms of scale. We don’t know whether they changed the architecture or how much they optimized the infrastructure to make Grok 3, that’s true. What we do know is they trained the model in the 100K H100 Colossus supercomputer that xAI built in Memphis, Tennessee. That’s a lot of GPUs. That’s much more GPUs than DeepSeek has.

(Probably both used just a fraction of the total compute available, but it’s a reasonable assumption that having 2x more GPUs allows you to train your models with ~2x more GPUs, whether you’re using all of them or not.)

Unlike DeepSeek, they didn’t need to optimize the infrastructure beyond reasonable standards (so, not touching the CUDA kernels or applying unproven algorithmic tricks). They surely did some optimization, but I highly doubt they went nearly that far. The Bitter Lesson says that if you have the compute it’s better to use it before you fiddle like a scrappy GPU-poor; scaling a bit further eventually brings more benefits than doing some hand-crafting (this cryptic tweet by Musk can mean many things). And remember, xAI is scale-pilled.

So that’s probably what they did: They threw more compute at Grok 3 than even OpenAI could, and the result is a state-of-the-art model.

I want to make a quick digression here because I’m tired of referencing the Bitter Lesson as if it were an AI-exclusive insight. No—it is a fundamental truth. If you have more of the primary resource, you don’t waste time squeezing out another drop out of a secondary resource; you take a full glass from the cascade. Would you rather be like Dune’s Fremen, recycling sweat and wringing moisture from the dead with clever but desperate devices, or live on a planet where it rains?

Improving algorithms and increasing the compute you run them on are both valuable approaches but if the marginal gains you get suddenly shrink as a function of the effort you’re putting in, you’re better off changing your variable of focus rather than being stubborn. And because computing power is mostly available as long as you have money (for now) whereas valid algorithmic tricks require rare Eureka moments that may work today but not tomorrow—and perhaps never scale—you’re almost always better off focusing on growing the power of your computer.

If you “find a wall,” then you just change the thing you scale, but you don’t. stop. scaling.

Constraints do fuel innovation—and struggle builds character; I’m sure DeepSeek’s team is absolutely cracked—but in the end, having more beats doing the same with less. This is an unfair world and I’m sorry.

I wonder if DeepSeek likes to be in the position they are or if they would gladly change places with xAI or OpenAI. Or, conversely, do you think OpenAI and xAI would ditch all their GPUs so they can innovate through their constraints like DeepSeek did?

In a way, being both latecomers, xAI and DeepSeek represent opposite approaches to the same challenge. Brute-force scale vs squeezing out limited resources. Both did great with what they had. But, undoubtedly, xAI is better off and will be in the coming months (as long as DeepSeek remains compute-bottlenecked). That’s thanks to their adherence to a law that albeit empirical—and heavily contested by the Academia—has proven valuable in the arena for more than a decade.

IV. The shift that helped xAI *and* DeepSeek

For a while, a late start seemed like an insurmountable burden in the AI race. When I first assessed their chances, I wasn’t sure xAI could catch up to OpenAI and Anthropic (said that much in the very last sentence of this article).

But between Grok 2 (Aug. 2024) and Grok 3 (Feb. 2025), something—besides the Colossus GPU cluster—helped xAI’s chances: the dominant scaling paradigm shifted.

The pre-training era (2019-2024): Initially, scaling meant building ever-larger models trained on massive datasets and massive computers: GPT-2 (Feb. 2019) had 1.5 billion parameters whereas GPT-4 (Mar. 2023) is estimated at 1.76 trillion. That’s three orders of magnitude larger. This approach naturally favored early movers like OpenAI, who had a multi-year head start in collecting training data, growing models, and buying GPUs. Even without that advantage, if each new model took ~half a year to train—their size slowed down iteration speed between generations—OpenAI would always be at least that far ahead of xAI.

The post-training era (2024-???): The game changed when companies realized that simply making models larger was yielding diminishing returns (the press was quick to misreport this as “scale is over” so I urge you to watch this talk by Ilya Sutskever at NeurIPS 2024 in December). Instead, the focus became scaling test-time compute (i.e., allowing models to use compute to think answers through), spearheaded by OpenAI with o1-preview. Reinforcement learning combined with supervised fine-tuning proved to be highly effective—especially in structured domains like math and coding, where well-defined, verifiable reward functions exist.

This paradigm shift meant that scaling post-training became as important—if not more—than scaling pre-training. AI companies “stopped” making larger models and began to make them better thinkers. This happened last year. Right when DeepSeek and xAI were building their brand-new models. A happy coincidence.

Importantly, post-training is still in its early days, rapid improvements can be achieved cheaply compared to pre-training. That’s how OpenAI jumped from o1 to o3 in just three months. It’s how DeepSeek caught up with R1 despite owning fewer and worse GPUs. It’s how Grok has reached a top-tier level in just two years.

OpenAI still has a moderate advantage, but it is no longer the kind of lead that made catching up impossible. And while Sam Altman has to balance cutting-edge research with the demands of running ChatGPT—a product used by 300 million people weekly—xAI and DeepSeek have more flexibility to focus on breakthroughs (DeepSeek’s app spiked in popularity but went back down because the company doesn’t have enough compute to serve inference to so many users).

A new paradigm; a new rivalry.

V. Putting xAI and DeepSeek wins into context

Acknowledging neither the Bitter Lesson nor this scaling paradigm shift takes away from either company’s achievements. They had it easier but they had to do it anyway. Others tried and failed (e.g. Mistral, Character, Inflection). As I said, Grok 3 is primarily a win for the Bitter Lesson and DeepSeek is primarily an exception that proves the rule, but let’s not reduce them to just that.

Compute alone—or the lack thereof—isn’t everything. Just like the Bitter Lesson doesn’t deny the value of improving algorithms and infrastructure, we shouldn’t ignore the fact that xAI has an outstanding team, now around 1,000 employees—on par with OpenAI (~2,000) and Anthropic (~700). There’s also Elon Musk’s deep connections in tech and finance, which give xAI a massive fundraising advantage. The same goes for DeepSeek, deserving as much praise as it got for the way it navigated its constraints and emerged victorious in a local ecosystem lacking ambition and experienced talent, as well as government support (that might change soon).

But recognizing the victory is just as important as putting it into context.

OpenAI, Google DeepMind, and Anthropic built their models when scaling was harder, slower, and more expensive (pre-training era). No one knew whether something like ChatGPT would work as well as it did (OpenAI almost didn’t launch it and when it did, it was touted as a “low-key research preview”). These startups acted as bold trailblazers moved by an unwavering conviction. Their role, although now slightly overshadowed by the opposition in press headlines, will be recorded in history books.

DeepSeek and xAI, in contrast, stood on the shoulders of those giants, leveraging the lessons painstakingly learned from their early efforts, and benefiting from the sheer luck of building their models at a moment when a paradigm shift made faster, more cost-effective progress possible (post-training era). They didn’t have to navigate as many missteps or endure a massive upfront investment with uncertain returns.

So let’s not downplay their wins but let’s also not forget how we got here.

VI. Post-training is cheap but will be expensive

There’s a final key takeaway from Grok 3 and xAI.

Once companies figure out how to scale post-training to the same investment levels as pre-training—it will happen, they’re stockpiling hundreds of thousands of GPUs and building giant clusters, to the bewildered lamentation of the “GPUs don’t matter” crowd—only those with the money and compute to keep up will remain competitive. (That’s why Dario Amodei and others have written extensively on the value of export controls.)

This is where xAI has positioned itself remarkably well. Better than DeepSeek and, arguably, even better than OpenAI and Anthropic. (Having Elon Musk as CEO helps.) With a 100K H100 cluster—soon expanding to 200K—xAI has secured a major advantage in the next phase of AI development. Meta is following the same playbook, preparing to launch Llama 4 in the coming months, having trained it in a 100K+ H100 cluster.

For DeepSeek, engineering ingenuity alone won’t be enough this time, no matter how good they are at tinkering across the full stack (perhaps Huawei can help them). There comes a point where no amount of optimizations can make up for a 150K-GPU gap. Don’t get me wrong—DeepSeek would have done the same as xAI did (they’re scale-pilled as well) but export restrictions are truly limiting their ability to grow.

Not even OpenAI and Anthropic have their clusters as locked in as xAI—Nvidia’s favoritism means Musk’s company is getting priority access to next-gen hardware.

VII. So, who will be ahead a year from now?

Despite all of this, OpenAI, Google DeepMind, and Anthropic still have a small head start. OpenAI will release GPT-4.5/GPT-5, then o4, and Anthropic is about to launch Claude 4. Google DeepMind keeps improving the Thinking-model versions of Gemini 2.0 and working hard to reduce costs and augment the context window.

My prediction was that Google would be ahead by year’s end but I’m not sure anymore. The landscape has never been more competitive, and the AGI race has no clear winner. The new paradigm favors late entrants while demanding agility—a skill I’m not sure Google has mastered yet. It’s also possible they’re simply terrible at marketing their advances, making their impending successes feel far less tangible than those of their competitors.

My conclusion for this post, however, is not going to be about the AI race. It’s about a lesson that keeps resurfacing, unsettling those who want to believe that human ingenuity will always triumph over simply letting go. I hate to break it to you, dear friends, but some things are simply beyond us.

Grok 3 is impressive. But more than anything, it’s yet another reminder that when it comes to building intelligence, scaling wins over raw cleverness every time.

Another great article. I was able to use Grok 3-early on the chatbot arena today. On a geopolitical question, its response was _amazing_ .

Thoughtful insight thank you.