AI Video Platforms Will Make TikTok Look Tame

On the addictive nature of high-quality AI videos

If you think teens spending 2–3 hours a day on TikTok is insane, you haven’t considered this possibility: Short of skipping sleep, young people with no professional duty have up to 12 free hours to melt their attention spans in the scroll mills. But worry not, AI video platforms will make sure you don’t have to imagine what that looks like.

I’ve written about AI video a few times—from praising the work it must have taken Google engineers to go from this to this (Will Smith spaghetti) in two years, to warning about the societal risks, among them the liar's dividend (passing a real as fake) and the trust flip (you can only trust what you already trust)—but I have seldom mentioned how the existence of in-demand, high-quality, customizable AI videos will affect young teenagers already rotting their brains in the shores—or may I say, sewers—of TikTok and similar places.

They are already dispossessed of any means to resist the wave of AI slop, or worse, of any willingness to do so. As the primary audience of a product that serves no purpose other than perpetual entertainment, these kids ought to haunt the edges of my every vision of the future. They’ve been forsaken, yet they’re our only hope—for one day, they’ll bear the burden of carrying civilization’s fire forward.

So, I ask you and myself: What are we doing to these poor sons and daughters of the 21st century, born inside a darkness that was chosen for them by capitalistic forces, short-sighted greedheads, and unwitting parents?

Since I can't do much else, I will dedicate some words of warning to this wretched demographic, despite knowing that whoever you are, dear reader, you probably already know what I'm about to say. I hope that, if you're in contact with one such dwelling creature—I, thankfully, am not—the brain rot has not yet fully taken hold, and you can pass my message along.

REMINDER: The current Three-Year Birthday Offer—20% off forever—runs from May 30th to July 1st. Lock in your annual subscription now for $80/year.

Starting July 1st, The Algorithmic Bridge will move to $120/year. Existing paid subs, including those of you who redeem this offer, will retain their rates indefinitely.

If you’ve been thinking about upgrading, now is the time.

I contend—and have for a long time—that the most dangerous weapon humanity invented during the 20th century was applied psychology.

Once we unveiled the secrets of the brain—sometimes to enhance it, oftentimes to manipulate it—we wasted no time turning it into a market. The smell of fresh cologne, the colorful toys in showcases, the soft touch of expensive clothes, the sight of a tasty hamburger, all of that is manufactured bliss. Our senses, our perception, our attention, all have been morphed into currency. The slot machine-ification of TikTok and the subsequent TikTok-ification of all other social media platforms—reels on Instagram and shorts on YouTube—is a clear testament to how far companies will go to make money at the expense of our lives.

If they don't make their products more addictive, it’s because they don't know how.

Until now.

AI offers them a clean opportunity—“clean” because the space remains recklessly unregulated, not because their goals are any less putrid—to push watch times from 3 hours a day to 12, draining and hollowing our minds with such quiet violence that neither George Orwell nor Aldous Huxley could have predicted it.

(Please, for the love of everything that’s not yet transformed into a business, don't confuse AI/tech companies with moral entities: however evil you think doing what I suggest would be, they'd happily go further—they’d ruthlessly crush your existence for one more digit in their market valuation. Evil is not a thing for capitalists of this kind; their hand is invisible and their eyes are dead.)

To explain how this bleak prospect has gone from being the wickedest grifter’s wet dream to a tangible reality in less than a decade, I will refer to ex-OpenAI researcher Andrej Karpathy’s explanation:

Until now, video has been all about indexing, ranking and serving a finite set of candidates that are (expensively) created by humans. If you are TikTok and you want to keep the attention of a person, the name of the game is to get creators to make videos, and then figure out which video to serve to which person. Collectively, the system of "human creators learning what people like and then ranking algorithms learning how to best show a video to a person" is a very, very poor optimizer. Ok, people are already addicted to TikTok so clearly it's pretty decent, but it's imo nowhere near what is possible in principle.

The videos coming from Veo 3 and friends are the output of a neural network. This is a differentiable process. So you can now take arbitrary objectives, and crush them with gradient descent. I expect that this optimizer will turn out to be significantly, significantly more powerful than what we've seen so far. Even just the iterative, discrete process of optimizing prompts alone via both humans or AIs (and leaving parameters unchanged) may be a strong enough optimizer. So now we can take e.g. engagement (or pupil dilations or etc.) and optimize generated videos directly against that. Or we take ad click conversion and directly optimize against that.

Why index a finite set of videos when you can generate them infinitely and optimize them directly.

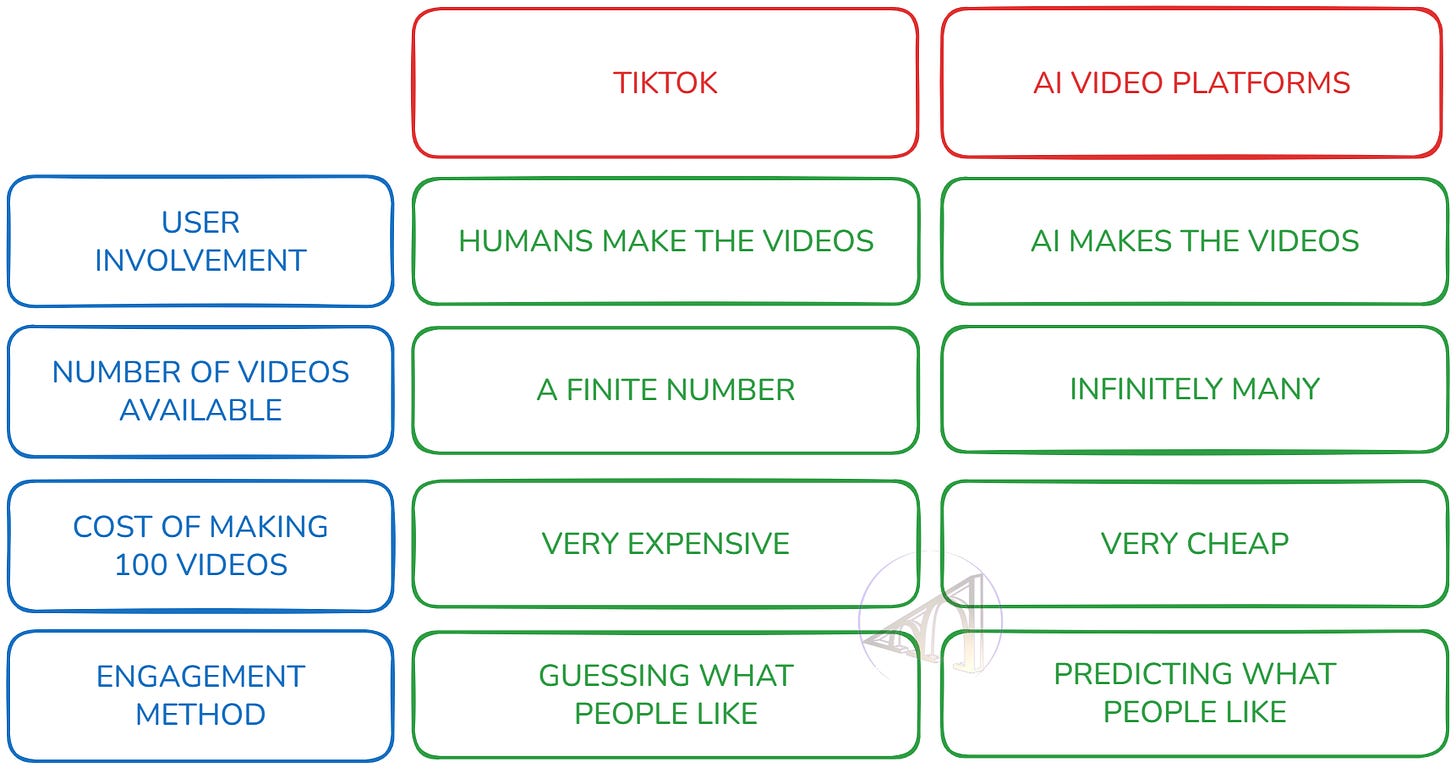

Let me describe what Karpathy sees in precise parallels:

TikTok needs to allure users (not optimal)

to create a finite number of videos (not optimal)

expensively (not optimal)

by guessing what consumers like (not optimal)

and then rank them with a recommender algorithm that has to learn who wants to watch what from behavioral cues like watch times (not optimal)

In contrast, a hypothetical AI video platform—which I assume will be the existing video platforms like TikTok, YouTube, and Instagram, not a new one; Google is the next Google—

doesn't need people (optimal)

to create infinitely many videos (optimal)

cheaply (optimal)

and can ensure people like them (optimal)

by doing gradient descent or prompt engineering directly against people's preferences (optimal).

Here’s a table:

What Karpathy says, in simple terms, is that it’s much easier for an AI to create the videos people will watch for 12 hours a day instead of the current 3 hours kids spend on TikTok than it is for humans to infer what we like from views and comments.

He’s unfortunately right. As he says, “TikTok is nothing compared to what is possible.” Whatever you can come up with right now that you think is highly addictive, a neural network dedicated to that can create something 10x or 100x more addictive.

TikTok is humanity’s best attempt (or worst attempt, it depends on how you look at it) at making audiovisual media into a drug. But humanity's best attempt can look like child’s play compared to what an AI optimized for the task could do.

I wrote about this in August 2022, well before this technology was ready (in my article #15 of this newsletter, we’re now at #475):

. . . companies like Amazon, Google, or Meta could easily develop algorithms 100X more powerful than TikTok’s. Programs unimaginably better at keeping us engaged—or for whatever other training objective they decide to implement.

I wasn’t thinking about AI videos back then but about super-powerful recommender algorithms. Reality has turned out to be worse than I imagined.

Karpathy ends with: “And I'm not so sure that we will like what ‘optimal’ looks like.” For once, I am more sure than he is: One part of us—the one that loves sugary foods and cigarettes—will love it; all the other parts will curse the existence of this technology forever.

Video platform on normal screens then VR? Fully immersive addiction aka the matrix of short-term desires, then someone's/something shuts down the power, and they're back to the friction-full "void" of real life? Kind of inception+matrix+ready player one...

This is truly frightening... and, I'm afraid, inevitable. About a dozen years ago, a very wise man I knew was asked whether the future would be wonderful or terrible, and he replied that society was becoming so fragmented that there would be a multiplicity of futures -- some of us will be living in the Dark Ages, and some of us will be living in the Renaissance. I feel like some of us will create little worlds with education and social structures to resist the addictive muck that will be all around us, while countless others succumb.