7 Implications of DeepSeek’s Victory Over American AI Companies

The Chinese Whale has caused a tsunami in the US

DeepSeek, the hottest AI startup right now, has reached the number one spot on the App Store, surpassing even ChatGPT. I guess you guys are asking me to write another article on the Chinese whale.

For last week’s deep dive, I examined DeepSeek’s first AI reasoning model, R1, and its interesting sibling, R1-Zero. It makes for a rather technical read—I wouldn’t have imagined that the name DeepSeek itself would become so extremely viral in a matter of days. A genuine “ChatGPT moment” for the Chinese AI industry. Yet, the tsunami was felt especially in the West. There’s a lot at stake here: DeepSeek challenges assumptions about who leads AI innovation. It jeopardizes billion-dollar investments and threatens to tear the bottom out of very deep pockets. Not everyone has taken it well.

People—my only shareholders—are eager to understand what’s happening so I will provide. There’s too much noise and not that many people have been following DeepSeek closely enough to know what’s going on and put it in perspective. How did a Chinese startup suddenly rise to the top? Wasn’t the US supposed to be months ahead? What happens next? Will the AI bubble pop? Will the markets crash? Has America lost? Social media is filled with speculation, but few know who DeepSeek’s team is, how they work, or what sets them apart. DeepSeek, its people, and its AI models are as unknown as they’re unique, which demands a thorough analysis.

In this post, I will enumerate the implications of DeepSeek’s victory over US AI labs (geopolitical, business, cultural, etc.) so we can make sense of it all. I will add my reading at the end of each section so you can separate facts from my opinion. (I might write another post where I debunk some of the nonsense claims circulating on social media about DeepSeek—way too many—but it depends on the reception to this one.)

Making sense of a story during an information deluge—without prior context—is like assembling a puzzle blindfolded. Surprise games can be pleasant but not when answers matter. So let’s walk together through this unexpected chain of events, clarifying what’s happening now and what might follow. To do this, I’ve broken this analysis into seven sections:

The greatest geopolitical conflict of our era

DeepSeek models rival the best in the West

Blowing it open through radical transparency

How do you outplay a culture you don’t get?

Export controls failed to stop DeepSeek’s growth

Lower costs to succeed in the US market

DeepSeek is simply an exceptional AI lab

I. The greatest geopolitical conflict of our era

DeepSeek is Chinese. I hand-waved this salient fact in my other article with some questions as “homework for the reader” but it’s undoubtedly the most relevant.

The first point I will make is also the least controversial: there’s an ongoing cold war between the US and China. It’s been for a while. The balance is unstable (e.g. Taiwan) but remains at this precarious equilibrium for one simple reason: Historically, China has been derivative as opposed to innovative in pure science and technology. What this implies for a superpower in the military sphere, we all know.

On the other hand, no serious analyst denies that China has been ahead of the US on a handful of critical dimensions for a while: manufacturing, industrialization, infrastructure, and even applied science and technology. But it was never a powerhouse in bold zero-to-one innovation. Not many Eureka moments. No epiphanic discoveries. Just a meticulously exemplary, country-shaped refinery.

DeepSeek is the first glimpse of China’s paradigm shift toward innovation in AI. The young startup (born in 2023!) is stereotypically closer to OpenAI or DeepMind than its national peers (even tech giants like Alibaba, Tencent, and Baidu). That makes DeepSeek a threat. Not because they will dethrone US AI labs but because it could spark a revolution among the Chinese: It is possible.

In a rare interview for AnYong Waves, a Chinese media outlet, DeepSeek CEO Liang Wenfeng emphasized innovation as the cornerstone of his ambitious vision:

. . . we believe the most important thing now is to participate in the global innovation wave. For many years, Chinese companies are used to others doing technological innovation, while we focused on application monetization—but this isn’t inevitable. In this wave, our starting point is not to take advantage of the opportunity to make a quick profit, but rather to reach the technical frontier and drive the development of the entire ecosystem.

So the answer to “What did DeepSeek do?” is “they innovated.” Well, not everyone agrees.

Some believe DeepSeek stole OpenAI’s IP or copied US labs’ approach or whatever. Others are calling them out on their cope. Incentives are vested and mixed so it’s hard to tell who considers this a genuine possibility (not that industrial espionage is new) and who is quietly shilling for their brother’s venture capital firm that’s about to lose a couple of billions. I won’t enter into those meaningless quarrels. Instead, I’ll share evidence we have that the opposite is actually true: It’s the US companies who will now scramble to derive value from DeepSeek’s innovations.

First, DeepSeek’s talent is fully local. No employee has studied or worked overseas. (This is also a testament to China’s prowess; startups and universities can train top AI models and world-class human talent respectively.) Second, DeepSeek—contrary to Google, OpenAI, and Anthropic—publishes a lot of papers on frontier research, architectures, training regimes, technical decisions, innovative approaches to AI, and even things that didn’t work (not much on data, though).

You can check this yourself. I just googled “deepseek papers arxiv” and got eight detailed technical reports on the first page. Tell me how many do you get from OpenAI. Now go read them and be honest if you think they show even a fraction of the depth and breadth. If we know so many things about how top reasoning AI models work is not thanks to OpenAI or Google. They’re as secretive as you can get. DeepSeek, however, is shouting the sauce from the rooftops.

We know the trajectory they’ve been following because it’s public. Of course, a lot of what they do requires or is based on work by US companies (e.g. Transformer, PyTorch, GPUs, CommonCrawl). DeepSeek didn’t invent the AI field. But they’ve already added their tiny contribution.

Let me ask you this: What do you think will happen to America’s hegemonic power if China, already ahead in relevant areas like industrialization and manufacturing and strategic sectors like aerospace, robotics, and energy, consistently produces AI R&D at the level of Google or OpenAI, and its university graduates enter the field with the ambition, knowledge, and passion to compete at the highest level? I don’t need to say the word “military” again, do I? Look at this cute dog instead.

My take: The US—the West, but let’s be honest, Europe is irrelevant right now—should embrace a collaborative attitude toward China because we’re not winning.

II. DeepSeek models rival the best in the West

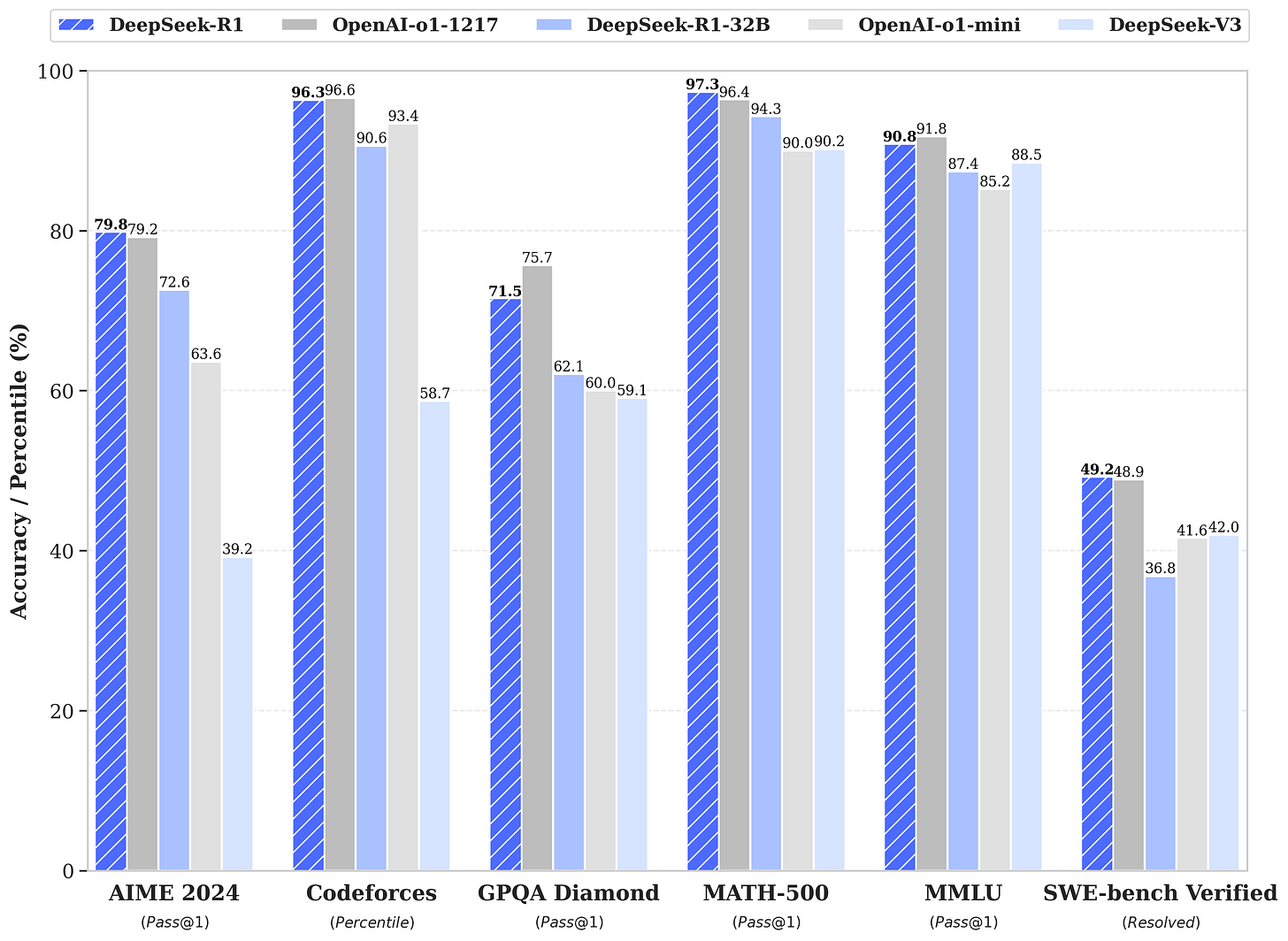

Look at these two charts. V3 (base model) and R1 (reasoning model) in the blue bars, compared to the best US competition (GPT-4o, Sonnet 3.5, Llama-405B, and o1):

Let me repeat it: DeepSeek is 1.5 years old.

Admittedly, it’s hard to know what top US labs have already trained but chosen to keep under wraps. OpenAI o3 was announced in December and o3-mini is being rolled out already (OpenAI is training o4 whereas DeepSeek-R1 was compared to o1). Google’s Gemini 2.0 Flash Thinking is also quite good and super cheap (cheaper than DeepSeek actually). Anthropic reportedly has a better model than o3 internally (although they’ve shown no reasoning model yet).

DeepSeek isn’t the best AI company in the world. Or the company that builds the best models. That wouldn’t be a faithful read of the state of affairs but instead a stretch out of either anti-Big Tech (they’re wasting billions, yay!), anti-US sentiment (China’s winning, yay!), or anti-AI excitement (the bubble’s popped, yay!). Or all three. (They’re trendy takes in certain circles, and honestly, I can’t even tell which one carries more weight anymore.)

DeepSeek’s achievements are surprising and valuable nonetheless. Despite facing significant challenges as a foreign player—export controls, a less innovative ecosystem, reliance on Western advances, and the inherent disadvantages of being a new entrant—those blue bars are real. DeepSeek has systematically hit key milestones, one after another, until catching up to 10-year-old OpenAI and 15-year-old DeepMind, handsomely backed by Microsoft and Google respectively. That’s the surprising part.

The valuable part is not that V3 and R1 rank well on benchmarks. That’s ok but boring. It is that DeepSeek didn’t stick to doing things the way US labs do them. They couldn’t. They say constraints drive innovation. DeepSeek stands as proof of that principle not only in theory but in action. With limited resources, inferior hardware, and less time, they outperformed—delivering similar results at a lower cost. If you could convert these variables into a single currency, DeepSeek would easily be at 10x the level of US labs.

Specifically, circumstances pushed researchers to tinker at the architectural and algorithmic levels, something Google and OpenAI rarely, if ever, do. Just to give you two examples: they alleviated memory bottlenecks in the transformer architecture (they use Multi-head Latent Attention, MLA, introduced for DeepSeek-V2) and simplified the reinforcement learning algorithm (they use Group Relative Policy Optimization, GRPO, introduced for DeepSeekMath and ditched MCTS and PRM), among other things. On top of that, they leverage every tool in the GPU-poor’s toolkit: quantization (8-bit precision), sparsity, Mixture of Experts (not all parameters are active during inference), and multi-token prediction (this doubles inference speeds). (If you didn’t understand this paragraph, believe me: it doesn’t matter.)

My take: By working within their constraints, DeepSeek researchers uncovered new approaches no one else dared venture into before (or didn’t want to; more on this later) and achieved state-of-the-art performance.

III. Blowing it open through radical transparency

All of DeepSeek’s models are open-source (specifically open-weights; they don’t share details about the data they were trained on—provenance, quality, manipulation techniques—arguably the most valuable insight they could provide). The CEO has also stated they actively pursue an open-source AGI—meaning they actually intend to benefit everyone, not just say they do.

DeepSeek’s low-cost, open-source approach effectively commoditizes—whether by design or as a side effect—the core products that companies like OpenAI and Anthropic rely on as their primary revenue streams, evoking echoes of the Apple-Microsoft battles of the 1990s. The simplified idea is this: dominate one layer of the product’s vertical—for example, give away the software app but monetize licensing or support—while applying a price-zero strategy elsewhere in the stack to undermine competitors. This forces them to lower prices on their core offerings, driving down the total cost and increasing demand. Over time, you gain monopoly control as your competitors succumb to a slow, inevitable decline.

That’s Meta’s goal in the US AI space: give away the Llama model weights to recoup their investment through AI-driven integrations within their social media platforms, where they already maintain a near-monopoly. However, DeepSeek’s approach differs from Meta’s. They don’t appear to gain monopolistic advantages anywhere in the tech stack by commoditizing their models as a complement to a related revenue source. Instead, the company’s funding (as far as we know) comes from Wenfeng’s successful hedge fund, High-Flyer. It has nothing to do with AI so it’s completely independent from DeepSeek’s business.

Wenfeng just doesn’t seem to care whether or not he makes any money at all by selling access to the API or through the free, unlimited-access app (he acts like an intellectually driven rich guy pursuing an expensive hobby out of sheer curiosity. Very respectable).

While Meta’s models lag behind those from Google and OpenAI performance-wise, they are much more expensive to operate compared to DeepSeek’s offerings (more on that later). So DeepSeek is a top player in AI (out-competing Google and OpenAI with an open-source approach) and the top open-source player (out-competing Meta and reportedly making them freak out).

But let’s be cynical here for a moment. Is this open-source approach a carefully designed tactic by a Chinese company to upend the American AI market? Could be but it’s weird to say that when Meta is doing the same thing (albeit unsuccessfully). Or is it a long-term strategy by the CCP to render the West dependent on Chinese AI innovation, only to cut off access once US companies have been driven out, crushed by insurmountable competition? It’s hard to say. We can make assumptions but that’s all.

My take: A world-class open-weights model is a good thing for everyone, whether Chinese or American in origin, especially individual consumers.

IV. How do you outplay a culture you don’t get?

The thing about China isn’t just that it’s perceived as the West’s geopolitical adversary. It’s that its culture feels fundamentally alien to us. People who’ve lived there can attest to that. Biologically we’re all children of evolution but culturally, we’re children of our country, traditions, religion, history, and idiosyncratic ways of living. China and the US share none of those things.

I haven’t lived in China or worked for a Chinese tech firm, so I’ll defer to two experts who can speak more insightfully about the intersection of Chinese culture and AI. The following brief excerpt focuses more on Huawei than on DeepSeek but works as a first approximation. It's from a conversation on Dwarkesh Patel’s podcast, featuring Dylan Patel (chief analyst at Semianalysis) and Asianometry:

China has a culture that’s really big on struggle. They go crazy because they think that in five years, they’re going to fight the United States. Literally, everything they do, every second, their country depends on it.

Imagine living that way.

China can seem more like a cultural monolith compared to the West, with the collective often taking precedence over individuality or self-interest. Harmony, duty, and long-term thinking shape everything from their rapid modernization to a tech industry focused on the bigger picture rather than personal recognition. While individuality and ambition are certainly present, the emphasis on societal well-being remains a defining and distinct feature of Chinese culture.

Talking about DeepSeek specifically, I recognize a few traits you won’t find in its American counterparts: They don’t boast about AGI or hype their products or try to “capture the lightcone of the universe” or weird stuff like that. They want to “unravel the mystery of AGI with curiosity,” nothing else. They also avoid anthropomorphizing AI models and don’t think about them as a new species, sentient beings, or the cocoon of an unborn machine God. They don’t let “straight lines on a graph” decide their fate. And they pay no attention to cult-like groups like Yudkowskyan rationalists so they’re not nearly as preoccupied with AI safety and AI alignment.

DeepSeek’s curiosity-first mentality goes beyond culture: US labs spend a lot of money and time with their alignment-focused research and behavioral guardrails so that their models don't go unhinged, don't get easily hacked, are controlled by their creators, etc. If a foreign lab doesn't care about this stuff and still creates great models, what do you think people will choose? No one, be it Chinese or American, likes to be patronized. DeepSeek-R1 feels better when you talk to it—it writes better—because it isn’t as lobotomized as ChatGPT, Gemini, or Claude (even if it has other kinds of safeguards). And you can see it think out loud (awww). (This makes it much slower but… awww.)

We know what companies do when pushed to choose between principles and money (and arguably the security of their nation), so what comes next? This is the beginning of the end of the AI alignment era and the true beginning of a full-blown capabilities race. It takes a single serious disruptor to topple a decades-old house of cards. DeepSeek is, for better or worse, the first great fork in the road ahead. May the Gods of Silicon be merciful to you, fellow traveler.

Back to culture. DeepSeek isn't obligated to shareholders because they don’t have any. Are they obligated to the CCP? We don’t know. People are claiming that DeepSeek can only have achieved this because they’re of course a technological arm of the government. They might be. I don’t know. But I know it’s not impossible to do what they did (really, read the papers). You just have to understand that they’re good researchers and engineers and their motivations differ from ours.

My take: What DeepSeek lacks in technical experience, computing resources, and presence in the industry they compensate with unusual determination and antifragility (and perhaps some extra help).

V. Export controls didn’t stop DeepSeek’s growth

DeepSeek (and by extension High-Flyer) is not as GPU-rich as top American labs. The estimations put them well under the 100,000 H100 GPU mark, which is the size of Elon Musk’s new supercluster (Dylan Patel says they have 50,000 Nvidia Hoppers). Meta, the open-source loser that’s freaking out, will have 1,300,000 million GPUs by the end of 2025. That’s 26x as much. For those spending hundreds of billions on infrastructure, DeepSeek being GPU-poor is of little consolation if they still manage to compete toe-to-toe.

Should OpenAI, Google, and Zuck ditch all their compute so they can innovate through their constraints like DeepSeek did? Jokes aside: Should the US remove the export controls given that they’re not stopping China from getting ahead? Also no: Jordan Schneider at ChinaTalk says that this kind of regulation takes more time than we’ve given it to take effect. We should just wait. Besides, DeepSeek CEO admitted money was never their problem but the lack of Nvidia chips. Export controls aren’t perfect (can be cleverly bypassed) but they’re also not innocuous. They shouldn’t be thought of as a binary choice.

The giga-datacenters US companies are building now and in the coming years (e.g. OpenAI’s Stargate, xAI’s Memphis supercomputer, and Anthropic’s multi-million-chip clusters, not to mention those of cloud hyperscalers like Google, Amazon, and Microsoft) are the kind of thing DeepSeek won’t be able to benefit from. They’re good at making models but have no interest in designing AI hardware as far as I know and, due to the export controls, no chance of getting those quantities. They depend on Nvidia’s sleight of hand or, if things go well, Huawei.

I want to address a misguided take that’s gone viral on social media: DeepSeek’s success in making models more affordable without the massive hardware resources of US companies doesn’t disprove scaling laws (bigger still tends to be better) or eliminate the need for larger supercomputers (whatever the market says). Their achievements highlight the potential of optimization and incremental innovation, not a rejection of scale. The take I’ve read that best captures this compromise between “cheap is possible yet scale works” is Andrej Karpathy’s (as a response to the release of DeepSeek-V3 in December):

Does this mean you don't need large GPU clusters for frontier LLMs? No but you have to ensure that you're not wasteful with what you have, and this looks like a nice demonstration that there's still a lot to get through with both data and algorithms.

Simple as.

DeepSeek’s push to innovate at the algorithmic level is, however, an echo of broader Chinese efforts on the hardware side. They may not be GPU-poors for long. How much time will it take China to have a direct response to the great trinity: Nvidia (GPU designer), TSMC (advanced foundry), and ASML (EUV lithography pioneer)? They’re almost there for TSMC with SMIC (still a multi-year gap). Can they achieve ASML-level EUV lithography in the coming years? Perhaps but not imminent. And, sorry to say this, but Nvidia’s CUDA moat is arguably the easiest to jump over (Huawei is still trying though).

I’ve heard experts argue that export controls are a short-term fix. In the long run, these restrictions could drive China to develop robust national solutions to address its reliance on key players in the semiconductor supply chain. Let Nvidia sell its advanced chips to China and, without incentive to make their own, they will be increasingly dependent on the American industry. Of course, if it’s Nvidia—the chip seller—who says this, color me skeptical. But force them to survive by cornering them against their own flaws and they will survive through them. Remember, antifragile is their last name.

My take: China can and will eventually solve the semiconductor gap with the West but until that happens, DeepSeek will have to scramble to keep up—you can only do so much optimization. Whether you need a $500 billion project to prove a point is a different matter.

VI. Lower costs to succeed in the US market

Let’s talk money.