19 Anti-Populist Takes on AI

An uncomfortable listicle for the end of the year

What is noteworthy about populists is that they do not champion all of the interests of the people, but instead focus on the specific issues where there is the greatest divergence between common sense and elite opinion, in order to champion the views of the people on these issues. . . . People are not rebelling against economic elites, but rather against cognitive elites.

— Joseph Heath, Populism fast and slow

You can support my work by sharing or subscribing here:

The “man vs. machine” narrative is simplistic; man and machine are seldom allies from the onset but virtually never enemies (over time, the allyship strengthens). The enemy is, if anything, man: first, when the status quo is disrupted, the sort who builds the machine and imposes it onto us; then, when the transition is over, the sort that refuses to acknowledge its societal benefits.

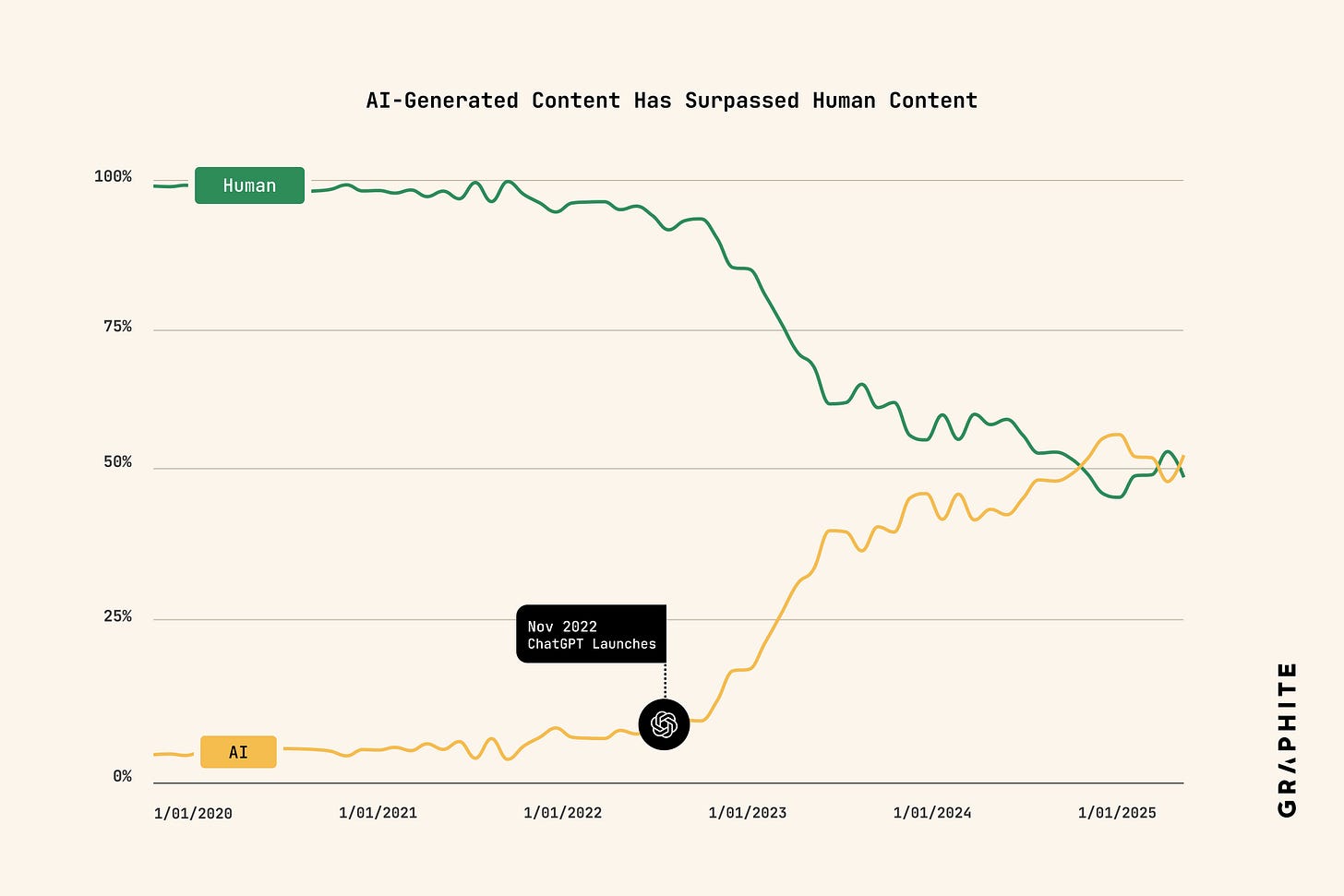

Digital marketing firm Graphite conducted a study concluding that “More articles are now created by AI than humans.” Generative AI destroys the scarcity that gave average professionals a career; this is initially a tragedy for the worker and a miracle for the consumer, but eventually it becomes a miracle solely for those who stand to gain from selling low-quality abundance.

Source: Graphite Culture critics rarely judge derivative art on its condition as derivative but on the means by which that derivation is achieved. Accordingly, the general public tends to resent the derivativeness only when a machine does it. Maybe the true lesson of “AI art,” if we agree such a category exists, is that photography and digital art were never particularly artistic to begin with.

Banning AI in schools (or instructing against its use) is not about protecting standards but rather about preserving an obsolete instruction methodology. The system protects itself, not the student (it will do this with or without AI, but now has a handy scapegoat). AI can be extremely useful, but this, too, requires qualification: people using AI for their benefit are pre-selected, in the sense that AI doesn’t change the disposition of a person but acts instead merely as an enhancer of existing passions. (Again, you are what you are with or without AI.)

Regulation favors incumbents who can afford compliance lawyers; lack of regulation favors scammers who ignore safety. No path favors the “little guy.” The little guy is the consumer, but also the smaller companies that could eventually threaten Big Tech and force them to reduce prices and improve their products. We will miss this temporary stage of fierce competition once AI companies—the ones still alive—put ads and other insidious features into their services.

Existential risk is a valid philosophical concern, but in the mouths of suited executives with incentives to conceal the truth, it sounds like a marketing strategy to build a regulatory moat around their eventual monopoly. Unfortunately, it’s hard to discern when it’s one or the other, so it’s better to assume the latter to the detriment of researchers speaking up in good faith. This is a special case of this maxim: “the general public is never the target of AI hype.”

If you think AI is “woke,” you are just mad that it doesn’t confirm your conservative or libertarian biases; if you think AI is “fascist,” you are just mad that it reflects the inherently unjust reality of a capitalistic society. The machine is a mirror (slightly distorted, just like the internet, to the left): if you hate the reflection, you hate the object being reflected, not the mirror itself.

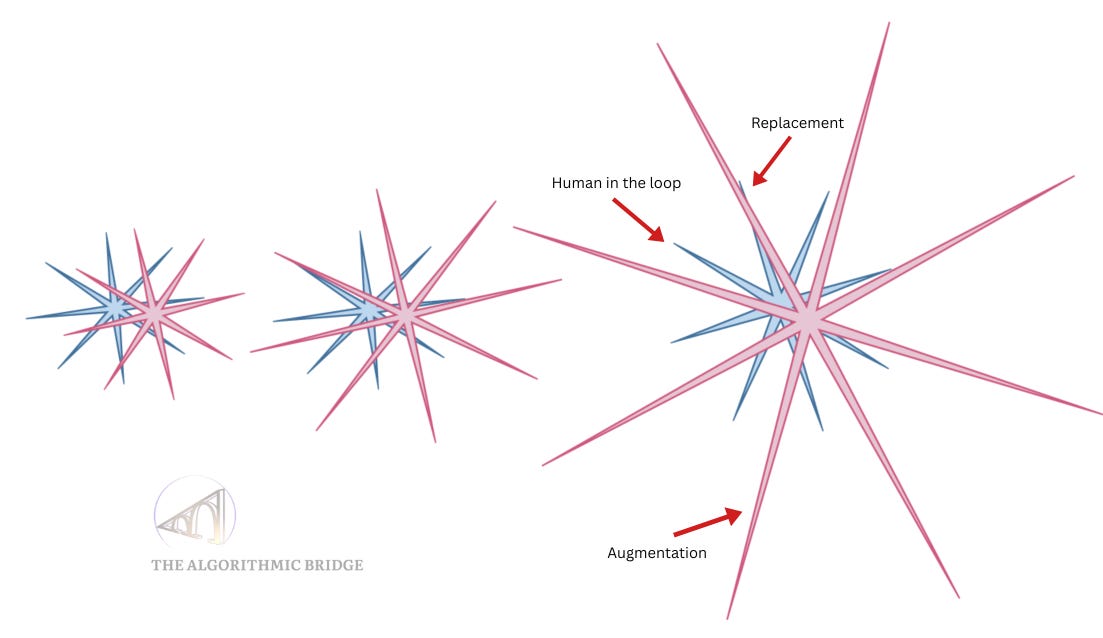

Replacement (AI can do what we do, e.g., boilerplate code), augmentation (AI does what we are bad at, e.g., fast arithmetics), and “human in the loop” (we do what we AI is bad at, e.g., tacit know-how tasks in the real world) scenarios are not mutually exclusive: thanks to the jagged nature of AI, all three will happen simultaneously (I explored this idea in The Shape of Artificial Intelligence). Here’s a symbolic visualization (the blue star covers the territory of human capability, the pink one, the territory of AI’s capability):

The “black box” nature of AI is a technical reality. Even the creators don’t know why it works, only that it works. When it fails, they don’t always know why or how to fix it. However, this can be mistakenly extrapolated into a discharge of accountability: Even if they are not responsible for what AI is, they are 100% responsible for the process by which it becomes what it is. (Social media is the same: the algorithm obeys your revealed preferences—what you like without knowing you like it—but the fact that it’s designed to make it seem that “what you like” is a faithful representation of “what you are” is 100% their fault.)