What You May Have Missed #21

GPT-4 coming this week? / Noam Chomsky: The False Promise of ChatGPT / Midjourney, DALL-E, and Stable Diff / Social media + generative AI / Open-source AI / Human brains = ChatGPT? / PaLM-E / Misc

GPT-4 coming this week?

At the AI kickoff event on Thursday, Microsoft Germany CTO Andreas Braun said: "We will introduce GPT-4 next week, there we will have multimodal models that will offer completely different possibilities—for example videos.”

That’s what journalist Silke Hahn reported for Heise in a piece that soon went viral on social media. After doubts regarding the quality of the source and the plausibility of the information, she confirmed that she presented it faithfully:

If true, it'll be the most important announcement on AI in the short-term, first because it's GPT-4—which everyone has been anticipating for years—and, second, because multimodal language models (MLMs) are the future (Microsoft recently released Kosmos-1, which is another step in this direction).

A multimodal GPT-4, which isn’t implausible, would entail a key milestone for the field (here’s an overview of its potential capabilities as an MLLM, by Dr. Jim Fan).

But, is the news true? First, it's unusual for Microsoft Germany (and not US) to make an announcement of this caliber. Second, it's even weirder because GPT-4 is a model by OpenAI, not Microsoft. And, third, this kind of news isn't normally announced before the official release.

People speculate that Braun might have conflated two different models in his statement (GPT-4 might be coming but not next week and OpenAI/Microsoft might be working on multimodality but apart from GPT-4).

Another possibility is that the news is true but he was not the person appointed to make the announcement. A final—albeit unlikely—explanation is that Braun’s statement was misinterpreted by Silke Hahn.

Whatever the case, we'll know very, very soon.

Noam Chomsky: The False Promise of ChatGPT

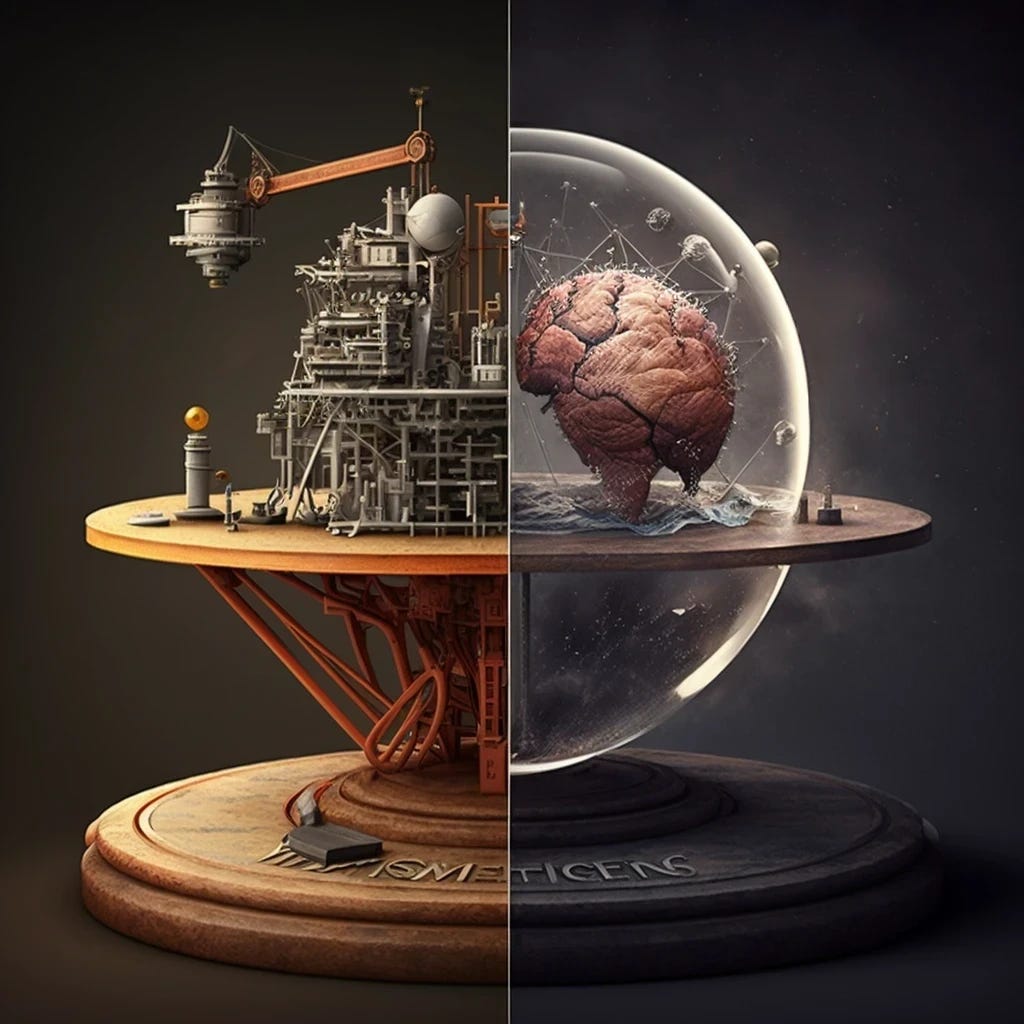

The other AI news that monopolized the conversation last week was Noam Chomsky's NYT essay on “the false promise of ChatGPT.” Chomsky, the father of modern linguistics and pioneer of cognitive science, has been very critical of machine learning, and language models (like GPT-3) in particular, for years. Roughly speaking, he argues that although potentially useful as engineering tools, these AI systems don't advance science or our understanding of language and cognition.

Here's the paragraph from the essay that, in my opinion, best summarizes the essence of Chomsky's view on language models and modern AI (in his view, too reliant on statistical probabilistic models):

"Indeed, such programs are stuck in a prehuman or nonhuman phase of cognitive evolution. Their deepest flaw is the absence of the most critical capacity of any intelligence: to say not only what is the case, what was the case and what will be the case — that’s description and prediction — but also what is not the case and what could and could not be the case. Those are the ingredients of explanation, the mark of true intelligence."

This debate frames science as the means to find adequate descriptions/predictions vs explanations of the world is quite old and evergreen—it’s not a problem to be solved but a reflection of the different views that scientists hold toward the pursuits that humanity should aim for. (If you want to get deeper into the rabbit hole, you can read an illuminating essay by computer scientist Peter Norvig entitled “On Chomsky and the Two Cultures of Statistical Learning,” in response to an earlier take by Chomsky on the same topic at the Brains, Minds, and Machines symposium at MIT (here’s the transcript).)

If we take that paragraph as a faithful summary of the article's main point (which goes beyond ChatGPT), I find it valuable. And if we accept the authors’ perspective of ChatGPT’s limitations we find a reasonable and insightful critique. However, the particular examples the authors used to reinforce their arguments aren't well-selected and the language is “editorialized” to cause a reaction, so most people don’t share my takeaway. There’s been a widespread negative reaction to Chomsky’s harsh take on ChatGPT.

The backlash has come from all corners of the ML and cognitive science communities. Gary Marcus wrote an essay, highlighting the criticisms of professors Emily M. Bender, Scott Aaronson, Tom Dietterich, Geoffrey Hinton, and others. Marcus, as you may guess, agrees with Chomsky’s main criticism of modern AI. Yet, he acknowledges the essay is “overwritten” and “needlessly inflammatory” (I agree with that, although I don’t see it as something bad given the amount of overwritten hype-y claims in the other direction. And given Chomsky’s expressed stance on rhetoric, “one should not try to persuade,” I think the editorial, and maybe his co-authors, had a lot to do with this).

I was able to find several other responses. So many are simply jokes, dismissals, and weak arguments of Chomsky’s view without showing any attempt at debunking his views (you can read these and decide for yourself). Jokes: Togelius, Bubeck (Bing quotes statements from a different piece, with makes this “dunk” funny), Domingos, and xlr8harder. Dismissals: Goldberg, Manning, and Lampinen. Weak arguments: Li, “It took me 30 seconds to fact-check this claim in the Chomsky op-ed,” Mu, and Qureshi.

The reason, I think, for such a generalized reaction (even when there’s no actual engagement with the arguments) is that Chomsky is possibly the most famous and respected scientist/philosopher/linguist alive, which gives his opinions an extreme weight: those who think otherwise feel reasonably threatened by them.