Stable Diffusion Is the Most Important AI Art Model Ever

A state-of-the-art AI model available for everyone through a safety-centric open-source license is unheard of.

Earlier this week the company Stability.ai, founded and funded by Emad Mostaque, announced the public release of the AI art model Stable Diffusion. You may think this is just another day in the AI art world, but it’s much more than that. Two reasons.

First, unlike DALL·E 2 and Midjourney—comparable quality-wise—, Stable Diffusion is available as open-source. This means anyone can take its backbone and build, for free, apps targeted for specific text-to-image creativity tasks.

People are already developing Google Colabs (by Deforum and Pharmapsychotic), a Figma plugin to create designs from prompts, and Lexica.art, a prompt/image/seed search engine. Also, the devs at Midjourney implemented a feature that allowed users to combine it with Stable Diffusion, which led to some amazing results (it’s no longer active, but may soon be once they figure out how to control potentially harmful generations):

As I’m writing these words, not 72 hours have passed since Stable Diffusion was released. Just imagine what’s coming out in the next weeks/months.

Second, unlike DALL·E mini (Craiyon) and Disco Diffusion—comparable openness-wise—, Stable Diffusion can create amazing photorealistic and artistic artworks that have nothing to envy OpenAI’s or Google’s models. People are even claiming it is the new state-of-the-art among “generative search engines,” as Mostaque likes to call them.

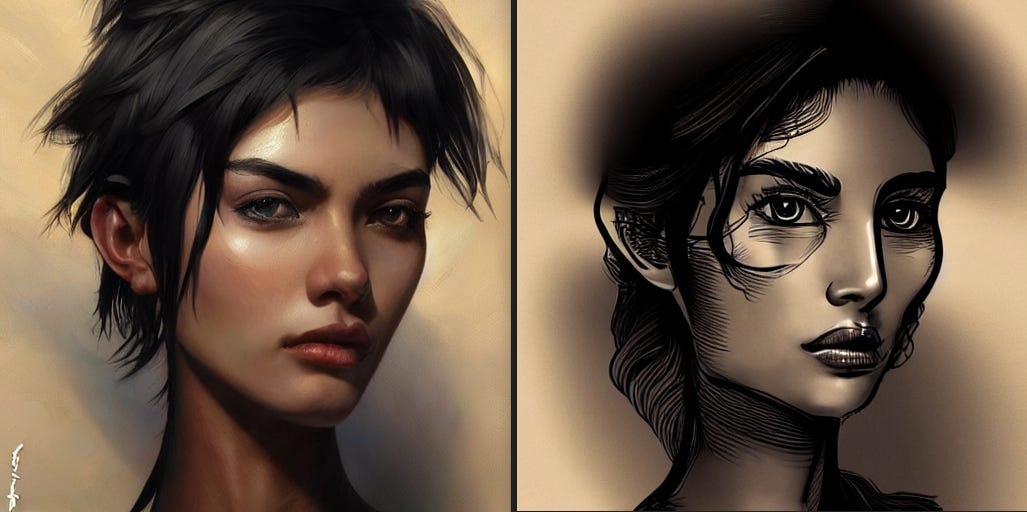

For you to get a sense of Stable Diffusion’s artistic mastery, I’ll sprinkle the article with some of my favorite artworks that I’ve found on the communities of the discord server (unless stated otherwise, all images are created via Stable Diffusion).

Stable Diffusion embodies the best features of the AI art world: it’s arguably the best existing AI art model and open source. That’s simply unheard of and will have enormous consequences.

In this newsletter, I often write about AI that’s at the research stage—years away from being embedded into everyday products. Those articles may be interesting but not very useful. Stable Diffusion is an example of an AI model that’s at the very intersection of research and the real world—interesting and useful. Developers are already building apps you will soon use in your work or for fun.

Interestingly, the news about those services may get to you through the most unexpected sources. Your parents, your children, your partner, your friends, or your work colleagues—people who are often outsiders to what’s happening in AI—are about to discover the latest trend in the field. Art may be the way by which AI technology finally knocks on the door of those who are otherwise oblivious to a future that’s falling upon them. Isn’t it poetic?

Stable Diffusion—more than an open-source DALL·E 2

Stability.ai was born to create “open AI tools that will let us reach our potential.” Not just research models that never get into the hands of the majority, but tools with real-world applications open for me and you to use and explore. That’s a shift from other tech companies like OpenAI, which is jealously guarding the secrets of its best systems (GPT-3 and DALL·E 2), or Google who has never intended to even release its own (PaLM, LaMDA, Imagen, or Parti) as private betas. I heard rumors about Stability.ai a few months back that they wanted to build an alternative to DALL·E 2, and they ended up doing much more.

Emad Mostaque learned from OpenAI’s mistakes. The absolutely viral success of Craiyon—despite its lower quality—put in evidence DALL·E’s shortcomings as a closed beta. People don’t want to see how others create awesome artwork. They want to do it themselves. Stability.ai has gone even further because this public release isn’t just intended to share the model weights and code—which although key for the healthy progress of science and technology, most people don’t care about them. The company has also facilitated a no-code ready-to-use website for those of us who don’t want or know how to code.

That website is DreamStudio Lite. It can be used for free for up to 200 image generations (to get a sense of what Stable Diffusion can do). Like DALL·E 2, it uses a paid subscription model that will get you 1K images for £10 (OpenAI refills 15 credits each month but to get more you have to buy packages of 115 for $15). To compare them apples to apples: DALL·E costs $0.03/image whereas Stable Diffusion costs £0.01/image.

Additionally, you can also use Stable Diffusion at scale through the API (the cost scales linearly, so you get 100K generations for £1000). Beyond image generation, Stability.ai will soon announce DreamStudio Pro (audio/video) and Enterprise (studios).

Another feature that DreamStudio will probably implement soon is the possibility to generate images from other images (plus a prompt), instead of the usual text-to-image setting. Here are some examples:

On the website, there’s also a resource on prompt engineering that you may need if you’re new to this (it isn’t trivial to communicate well with these models). Also, unlike with DALL·E 2 (or even Craiyon), you can control parameters to influence the results and retain more agency over them.

Stability.ai has done everything to facilitate people's access to the model. OpenAI was first and had to go more slowly to assess the potential risks and biases inherent to the model, but they didn’t need to keep the model in closed beta for so long or establish such a creativity-limiting subscription business model. Midjourney and Stable Diffusion both have proven this.

Safety + openness > privacy and control

But open-source technology has its own limitations. As I explained in my article on GPT-4chan ‘the Worst AI Ever,’ openness should go before privacy and tight control, but it shouldn’t go before safety.

Stability.ai has taken this fact very seriously by collaborating with Hugging Face’s ethics and legal teams to release the model under the Creative ML openRAIL-M license (similar to the license of BigScience’s BLOOM model). As the company explains in the announcement, it’s “a permissive license that allows for commercial and non-commercial usage” and focuses on the open and responsible downstream use of the model. It also enforces derivative works to be subjected, at minimum, to the same user-based restrictions.

Opening the model is a great step by itself, but establishing reasonable guardrails is just as important if we don’t want this technology to eventually harm people or add more hubris to the internet in the form of misinformation. But even with the license, it may happen. Emad Mostaque refers to this explicitly in the blog post: “As these models were trained on image-text pairs from a broad internet scrape, the model may reproduce some societal biases and produce unsafe content, so open mitigation strategies as well as an open discussion about those biases can bring everyone to this conversation.” In any case, openness + safety > privacy and control.

The power of open-source to change the world

With a robust foundation of ethical values and openness, Stable Diffusion promises to go beyond its competitors in terms of real-world impact. For those of you who want to download it and run it on their computers, you should know that it takes 6.9Gb of VRAM—which fits in a high-end consumer GPU and makes it comparably less heavy than say DALL·E 2, but can still be out of reach for most users. The rest, like me, can start right away with Dream Studio.

Being generally regarded as the best generative AI art model out there, Stable Diffusion will become the basis for innumerable apps, webs, and services that will redefine how we create and interact with art. Until now you had to use DALL·E 2 or Midjourney if you wanted decent results (Craiyon is good for memes, but it’s nowhere near what most professionals need quality-wise), which are limited in that they’re completely opaque.

But now, apps designed specifically for different use cases will be built from the ground up, for everyone to use. People are enhancing kids’ drawings, making collages with outpainting + inpainting, designing magazine covers, drawing cartoons, creating transformation and animation videos, generating images from images, and much more.

Some of those applications were already possible with DALL·E and Midjourney, but Stable Diffusion can drive the current creative revolution to the next stage. Andrej Karpathy agrees:

Stable Diffusion will force a very needed conversation

But global paradigm shifts aren’t pleasurable for everyone. As I explained in my latest article on AI art, “How Today's AI Art Debate Will Shape the Creative Landscape of the 21st Century,” we’re getting into a situation—now accelerated with the open-source nature of the model—that’s extremely complex. Artists and other creative professionals are raising concerns and not without reason. Many will lose their jobs, unable to compete with the new apps. Companies like OpenAI, Midjourney, and Stability.ai, although superpowered by the work of many creative workers, haven’t compensated them in any way. And AI users are standing on their shoulders, but without asking for permission first.

As I argued there, AI art models like Stable Diffusion pertain to a new category of tools and should be understood with new frameworks of thought adapted to the new realities we’re living in. We can’t simply make analogies or parallelisms with other epochs and expect to be able to explain or predict what it’s going to happen accurately. Some things will be similar and others won’t. We have to treat this impending future as uncharted territory.

Final thoughts

The public release of Stable Diffusion is, without a doubt, the most significant and impactful event to ever happen in the field of AI art models, and this is just the beginning. Emad Mostaque said on Twitter that “as we release faster and better and specific models expect the quality to continue to rise across the board. Not just in image, audio next month, then we move on to 3D, video. Language, code, and more training right now.”

We’re on the verge of a several-year revolution in the way we interact, relate, and understand art in particular and creativity in general. And not just in the philosophical, intellectual domain, but as something now shared and experienced by everyone. The creative world is going to change forever and we have to have open and respectful conversations to create a better future for all. Only open source technology used responsibly can create that change we want to see.

I love the hype around this! Twitter was exploding.

Great article, thanks 🙏