OpenAI Is in Trouble (For Real This Time)

Facing Meta and Microsoft at once is not the best idea

Hello friends—it’s Alberto.

Today’s post is about OpenAI’s talent war with Meta and its growing tensions with Microsoft, and whether it will emerge stronger from these conflicts—or fractured.

I’ve realized a bit too late (three years in!) that I never properly introduce myself, and a friend at Substack told me that I should. I’m a writer who once tried to be an engineer. I care about AI and technology, but also about culture, philosophy, and the complicated business of being human. This newsletter is where I bring those worlds together. If I had to say what I’m best at, I’d say: stubborn common sense. Come in!

GENTLE REMINDER: LAST DAY FOR 20% DISCOUNT OFFER BEFORE PRICE RISE!!

The current Three-Year Birthday Offer gets you a yearly subscription at 20% off forever, and runs from May 30th to July 1st. Lock in your annual subscription now for $80/year. Starting July 1st, The Algorithmic Bridge will move to $120/year. If you’ve been thinking about upgrading, now is the time.

Every year for the past six years, OpenAI has been in trouble. Every year, it has emerged stronger.

OpenAI ran into trouble in 2019 when it alienated supporters by refusing to open-source GPT-2, and by partnering with Microsoft to become a capped-profit startup. Then again in 2020 when the academic success of GPT-3 attracted the attention of AI ethicists, who revealed the representational harms and lack of transparency baked into the model’s outputs and training process. In 2021, the trouble came from the inside, when the Amodei siblings—along with several other key employees—left to found rival company Anthropic. Trouble resurfaced at the end of 2022 when ChatGPT’s popular success pushed it to prioritize products rather than research. OpenAI found itself in trouble again in late 2023, when the boardroom coup took place and Sam Altman was fired for not being “consistently candid.” Then, finally, serious internal trouble returned in 2024 when heavyweights Ilya Sutskever and Mira Murati and Alec Radford and John Schulman—along with several other key employees—left to join or found competitors. (So many employees have left OpenAI over the years—more than 60 people—that they’re known as the OpenAI Mafia.)

Now, in 2025, OpenAI finds itself in trouble again because it has antagonized the one industry incumbent that sponsors its expensive ambitions—tensions with Microsoft are growing—and because the other industry incumbents that were already not friendly, have ratcheted up the aggressiveness: Meta has been poaching key talent recently, Google and DeepMind awakened and lead in several areas, and Apple—once a potential key ally—likely feels betrayed now that Altman is working closely with iPhone designer Jony Ive to take on the entire iSuite.

Will OpenAI, the poster child for corporate controversy in the AI industry, survive these new threats? Let’s see what is going on.

It all started when CEO Altman—always so inclined to dominate the public discourse and always so careful to curate his image—revealed in his brother’s podcast two weeks ago that Meta CEO Mark Zuckerberg was trying to allure OpenAI employees with $100 million bonuses (probably to match the value of their unvested stock options, which is peanuts for Meta). But, he quickly pointed out, “so far none of our best people have decided to take them up on that.”

As anon X account, Signull (whom I advise you to follow) says: this is “narrative warfare.” If the 9-figure sum is real, Meta's current AI researchers and engineers will be mad. If it's fake news (as it seemingly is), then Meta will be forced to call Altman out (as it happened), allowing him to frame the conversation. And, by labelling those who stay at OpenAI “our best people,” he's implying that whoever leaves OpenAI to go work for Zuck is both a sellout and not a key asset. (Whether this is true or even the impression that Altman's words actually left on public opinion is unclear.)

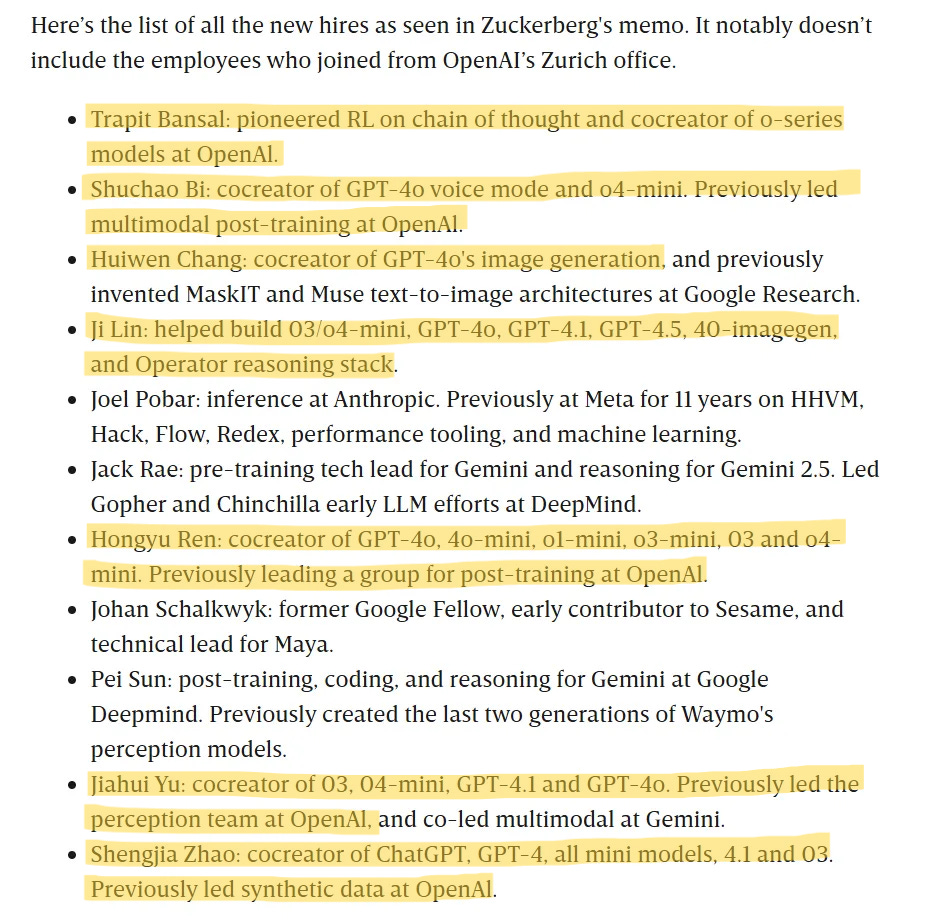

What is certain is that Zuck has eventually managed to poach up to 10 OpenAI employees at the time of writing (as far as I know, two remain unconfirmed). First, the Zurich office leadership trio left (I guess the further you live from Silicon Valley, the less “AGI-pilled” you are). But then, four more people from the Silicon Valley headquarters also took Zuck up on his offer. This is all good, you may think, because Altman preemptively controlled the story—those who left are not stellar talent, remember? Well, that worked, except it's trivial to prove that those who left were indeed stellar talent. (This image below is from an internal memo about Meta’s new superintelligence team, obtained by Wired.):

OpenAI has lost people who worked on or led GPT-4o, the most used AI model in ChatGPT, and other GPT-4.X models, as well as people who worked on or led the o-series (including Trapit Bansal, the co-creator with Ilya Sutskever), which is the cornerstone of ChatGPT’s reasoning capabilities. Not looking good.

I will admit, to deter any melodramatic claim that “OpenAI is dead,” that 10 is not a worrisome number; OpenAI has 3,000+ employees—if the 2024 brain drain didn’t kill it, this smaller batch of ship-jumping won’t. However, employees who remain at OpenAI, who may already be barely comfortable with Altman’s shenanigans when they work, might be flat-out frustrated that his words on the podcast backfired.

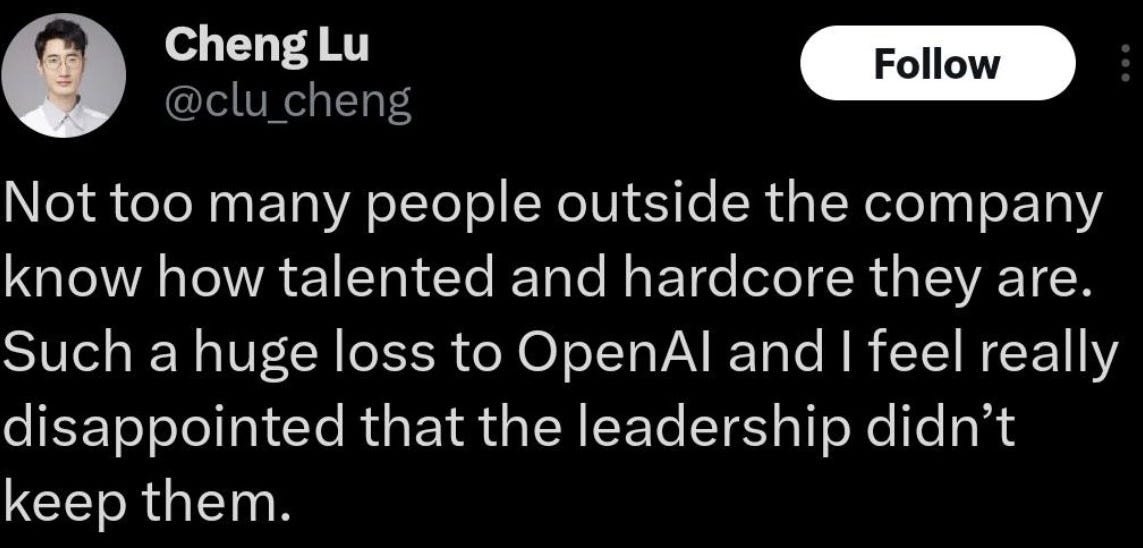

Cheng Lu, a research scientist at OpenAI, published and quickly deleted the tweet below. Now that we know that those hearts that OpenAI employees subserviently displayed on their socials when Altman was fired in 2023—and that the open letter almost all of them signed demanding the board to reinstate Altman—were motivated by fear of losing their unvested equity and not out of genuine devotion to him, it’s fair to extrapolate Lu’s stance to others inside the company—they just won’t say it out loud because too much money is on the table. (You can also read testimonies of former OpenAI employees in the OpenAI Files or Empire of AI by journalist Karen Hao.)

If no one dares speak openly about their true feelings toward OpenAI’s leadership, the company risks becoming an army of yes-men, uncritically rubber-stamping Altman’s unilateral decisions. In a way, Altman’s past behavior—non-candor and such—pushed away everyone who either was good enough to form their own thing or disagreed with him strongly enough to sacrifice the cash. Those immune to his manipulative demeanor are no longer at OpenAI. Those who stayed are either naturally aligned with him or psychologically vulnerable to his character, and I’m not sure what’s worse.

But Altman, probably delighted that his company and his board are under his absolute control, seems to forget that OpenAI was once a great place where great work was done because people could have conflicting opinions. Open debate about safety and the mission was the norm, and employees could freely raise ideas that didn't exist directly in pursuit of flashy announcements and mass-targeted products. Any good leader listens to those who know more than he does.

If Altman always gets his way now because he’s instilled fear in those who would otherwise be willing to contradict him, and those who were unafraid simply left, then what awaits OpenAI is a long, slow descent into irrelevance. Perhaps not to financial irrelevance, but innovative irrelevance.