Generative AI Is Not a Magic Wand

It is time to update expectations

I wrote this article on May 26th, 2023—more than a year ago and just half a year after the release of ChatGPT. I chose to repost it today because it’s quite prescient. At the time, many people called generative AI revolutionary. I warned: “Very few things in history have withstood the test of time to be called ‘revolutionary’. Generative AI doesn’t seem to be one of them.” So far, I’m not wrong. In any case, it makes no sense to preemptively qualify as revolutionary an innovation that has not yet proven itself worthy of the burden of such responsibility. It is time to update expectations.

There’s a gap between what we imagined we could do with generative AI and what we can actually do. A divergence between expectations and reality. François Chollet says that people love to imagine what AI can create but “not so much the actual reality of using it.”

This wasn’t at all clear a couple of months ago. Only firsthand experience with state-of-the-art tools contrasted with our preconceptions provides such a powerful insight.

We can now check if generative AI passes the bar we set up.

Generative AI has been portrayed as mystically unsettling, almost like a breed of alien creatures. For months, this depiction fueled great anticipation of what it could do (or, to be precise, what we could do with it).

It’s been half a year since ChatGPT entered public awareness. A year since OpenAI released DALL-E. And three years since GPT-3 convinced techies that something big was coming (for the record, in my view GPT-2 → GPT-3 was a more impressive leap than GPT-3 → GPT-4).

Looking back at the most ambitious predictions people defended, we should readjust our views going forward. (I’ll focus on ChatGPT because it’s the flagship tool.)

It was December 1 and suddenly Google and teachers were dead. College essays were dead. ChatGPT would substitute search engines as “a source of consultation” and replace our minds as a source of generation. That was the mainstream take.

Today, six months later, Google is better off than pre-ChatGPT (just like OpenAI and Microsoft). It’s doing well financially and it’s rapidly integrating this tech into its widely used suite of products. Incumbents’ moat is undeniable. Neither ChatGPT nor Bing killed Google for one simple reason: you can’t use a language model-powered chatbot to give you accurate information. If something could kill Google Search, it’s teens using TikTok and Instagram and young adults using Reddit for searches. Not generative AI.

Professors are tinkering with ChatGPT and learning to introduce it in their classes (or simply banning it). Humans are flexible. We adapt. Writers and amateurs find ChatGPT mediocre at best (they remain hopeful of future versions, but I have my doubts; averageness is imbued into GPT’s design). ChatGPT didn’t displace teachers because learning and teaching are more than reading words on a screen. It didn’t kill essays because writing is more than typing words on a page. Humans are creative; we’re the source of the creativity AI appears to have.

Soon ChatGPT wasn’t interesting anymore. Generative agents like AutoGPT and BabyAGI were making it obsolete (!) Jarvis was no longer science fiction. “The future’s here” but, where are the millionaire entrepreneurs who delegated their finances to their AI servants? If Jackson’s experiment worked it wasn’t because of GPT-4, but because of the publicity he got on Twitter. Social media virality, not generative AI was the reason. Generative agents are a promise—nothing more.

There are other claims that I can’t falsify for now—or forever, in some cases—like the magnitude of generative AI’s threat to the workforce, whether GPT-4 is sparking AGI, whether neural networks are conscious, or, ridiculous I know, whether we should start to think about how to govern superintelligence. You see the trend: Each new thing coming out of the generative AI factory is exaggerated—for good and bad—so that it becomes, in the collective imagination, the best or worst thing ever.

I see Generative AI differently; as a set of tools that, like any other, has perks and downsides. It’s but another component—a singular piece to be sure—in the huge machinery that governs our digital lives.

It needs the web and smartphones for people to access the models. It needs cloud servers to train and run them. It’s a compendium of complex algorithms (some older than me) invented with scarce human ingenuity. And it’s built on top of vast datasets, which we’ve collectively created. It needs social media to disseminate information—and disinformation. Jobs—tasks—have been ceaselessly automated by technology for ages. Interestingly, some of the most pressing shortcomings, like confabulation and prompt injections in language models, are pervasive and won’t be solved without a paradigm change.

Generative AI is a cog. A new one in a well-geared and perpetual world that won’t stop moving and won’t break apart because of it (humanity already faces bugs in its operating system and breaches in the foundational pillars that sustain our societies that have nothing to do with this new wave of technology).

Generative AI is not the panacea or the apocalypse. There’s been both good and bad and will continue to be but neither the good has been so great nor the bad so horrible: It’s not turning the world upside down except to the degree we’re making it look like so. Magic appears when we disconnect our imagination from reality—once we ground it back in the real world, hyperboles deflate. In the end, only the adjacent is possible.

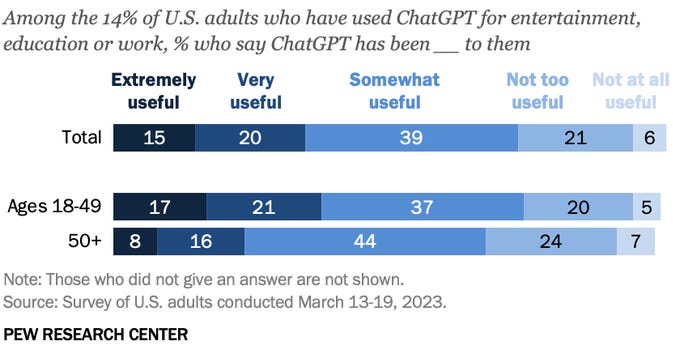

To close, let me give you a recent piece of evidence that was published two days ago and further reinforces my thesis. It was shared by OpenAI’s co-founder, Greg Brockman: “58% of US adults have heard of ChatGPT; 14% have tried it.”

Here’s my response after reading the study; there’s more:

Here’s the chart:

Let me repeat that: 2% of US adults find ChatGPT “extremely useful” for entertainment, work, or education. 1 in 50! If we add the “very useful” group, they barely reach 5% in total (“somewhat useful,” the next point in the scale, is too ambiguous to reveal anything).

ChatGPT—the undisputed superstar among generative AI tools—is working well (which I assume is a good proxy for “as useful, or more, than expected”) for 1 person in 20.

With these shocking numbers in mind, think about the degree to which generative AI is capturing the conversation everywhere. Think about all the wild predictions and exaggerated portrayals of ChatGPT and its ilk on social media, the news, government comms, industry PR, and academia papers.

While we are, just for this brief instant, out of our AI bubble, let me ask you this—that I also ask myself: Is the attention generative AI receives warranted?

Generative AI is many things—useful, interesting, entertaining, and even problematic—but it’s not a world-shaking revolution. Very few things in history have withstood the test of time to be called “revolutionary.” Generative AI doesn’t seem to be one of them.

Let’s not call a magic wand what’s better described as a wooden stick. And I don’t mean it in a derogatory way.

This is like watching an implosion occur in real time.

The fact that average can't see its use or value has been what was expected the entire time. It's there to replace average. Its the same as when mass production replaced all the artisans Or all the assemby jobs for shipped over seas and the service industry replaced the manual jobs.

You have to be a creative to realize it's value. You have to be an adhder, big picture creative. Then its the greatest gift you have ever been given.

Generative AI is like Donald Trump - a great attention getter even though it hallucinates.