AI Influencers From the Post-ChatGPT Era

A new stage in the history of AI marked by the emergence of a new type of hype

An unsurprising side-effect of ChatGPT going viral is that generative AI has become an attractor for people who care about it exclusively as a means to gain money, social media presence, or business opportunities: The new class of AI influencers.

Let me start by making it clear that I don't think there’s anything wrong with using what’s hot to grow a business. As the space of AI influencers grows, they give the field more visibility to people who would’ve never heard of it otherwise (as incredible as it sounds, most people are unaware of how AI is changing the world). That's great—more people knowing about AI means fewer consequences due to ignorance.

But there’s a second-order effect of this phenomenon. AI influencers’ incentives to talk, write, and inform about AI are not always aligned with people’s desire to know what AI is, how it works, or what makes sense to use it for. Often they just want to take advantage of the new trend, which translates into lower-quality knowledge sources.

And, to the frustration of people who try to educate others about AI in a balanced way, they will, with time, become the most common—and most followed—resources on AI. That’s what inevitably comes in this post-ChatGPT era.

A new kind of hype that feeds from superficial narratives

A few weeks ago, Google’s François Chollet posted a Twitter thread that nicely captures this feeling:

He's referring to the general climate of unfounded optimism and excessive hype more than about these AI influencers and their practices, but his arguments apply just as well.

As Chollet notes, people (i.e. users and investors) are expecting a lot from AI—the hype bubble is bigger than ever. ChatGPT isn’t just friendly and intuitive (and, for now, completely free), it also is, for most people, the first active, direct contact they’ve ever had with a powerful AI system. For them, it’s truly a revolution.

For me (us) it isn't. I agree with Yann LeCun, Meta's chief AI scientist, in that “revolutionary” may not be the best adjective to define ChatGPT. As he told tech reporter Tiernan Ray in an interview for ZDNet:

“In terms of underlying techniques, ChatGPT is not particularly innovative … It's nothing revolutionary, although that's the way it's perceived in the public … It's just that, you know, it's well put together, it's nicely done.”

Most people don’t see it that way. Nathan Benaich (co-author of the State of AI Report) responded that LeCun has “lost the plot” given that “tons of people find [ChatGPT] actually useful.”

What Benaich is omitting, though, is that people aren’t finding it useful because it’s qualitatively better than previous iterations, like GPT-3—that’s LeCun’s argument—but simply because they’re aware it exists.

Imagine if you came across ChatGPT and the only thing you knew about AI was Siri’s ability to schedule a date in the calendar. That’d be quite the leap, wouldn’t it? You’d perceive ChatGPT's impact as “civilization-altering,” as Chollet remarks. I sure would.

And the hype worsens when people treat the narratives as “self-evident”. Those GPT-4 visual graphs with millions of views don’t provide sources or data to support the claims. They’re purely emotional: “Oh my god, a revolution is coming. Brace yourselves, you’ve seen nothing yet. The Singularity is near…” That's, like Chollet says, the perfect “bait tweet” for the marketers.

AI has been hyped since forever but always because of its potential and, more recently (10 years ago), due to the unprecedented success of the deep learning (DL) paradigm.

AI being the mainstream topic everyone is talking about gives raise to a different type of hype. A hype that comes from outsiders who don’t know—nor care—about the history of the field, the underlying tech that fuels those fancy models, or the limitations and social repercussions that go implicit with the development of any powerful new technology.

Instead, these outsiders—the marketers, the AI influencers—go around making baseless claims and predictions that lack credibility. And it doesn’t matter. Credibility, rigor, and evidence are words that pale next to the bright magic of AI (to borrow Arthur C. Clark’s popular expression).

AI influencers transform and multiply the hype: It’s not about “DL works for X so it’ll work for Y” (which can be a reasonable—and debatable—claim) but about “wow, that GPT-4 circle is sooo big.” Rational arguments no longer work. How do you fight that?

Going back to Chollet’s arguments, it’s important to note that he qualified his comparison between AI and web3 (I agree they’re not the same):

ChatGPT has aggravated—with the help of its large flock of AI influencers—the hype around AI but it’s nowhere near web3 in hollowness, even if only because AI has actual applications that people find useful (although there’s a suspicious overlap between AI and web3 influencers).

If generative AI is tangibly useful, then the hype is less problematic, right? Well, no. Web3 was easily dismissed as scam-ish. AI influencers, in contrast, talk about something real—AI hype seems to be justified. But it isn’t: They add an embellishment layer on top of the truth to beautify and hyperbolize their discourse. Exaggerations that lead to confusion are much more difficult to combat than outright lies that fall under their own weight.

That’s what we face today. I—and many others—as informants attempting to provide level-headed takes on AI, and you, who expect reliable and high-quality information. We both will have to push away the garbage while navigating a sea of AI now flooded with influencer and marketer bullshit.

AI influencers have conquered social media

Such a rant requires evidence on my part. You may have probably seen most of these posts before, but it's worth gathering them here.

There’s a whole spectrum of tonalities between correct and incorrect information. Between true and false knowledge. AI influencers often get dangerously close to the wrong side (although not always).

I’m sharing below the most obvious examples, but there are subtler ones (e.g. investors and marketers talking about AI as if they were already insiders before the DL boom in 2012).

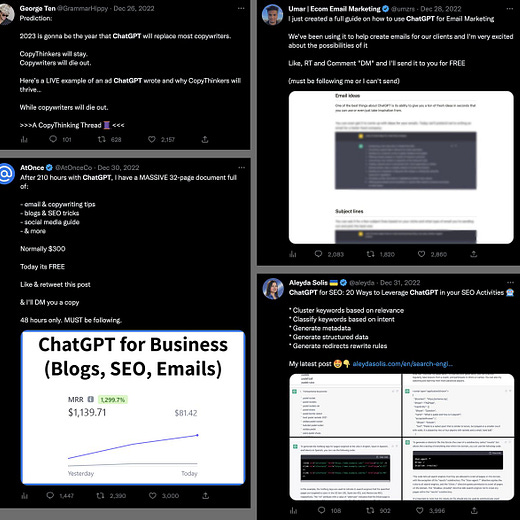

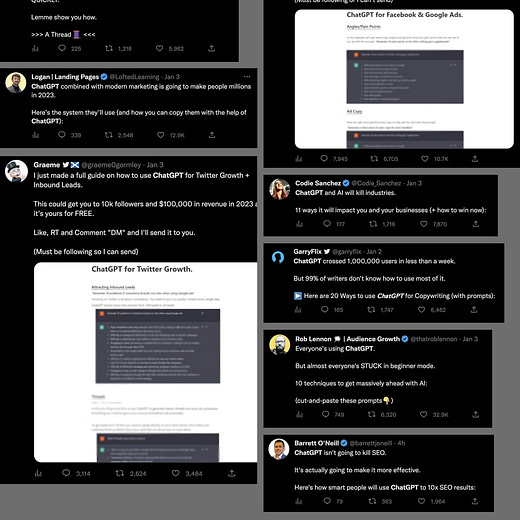

I started to notice the AI influencer vibe about ChatGPT from a Tweet of the form “everyone uses ChatGPT, but…” These kinds of posts try to make you feel FOMO. Even if you know about ChatGPT, there’s room for improvement and, if you don’t keep reading, you’ll fall behind. Maybe you’re “stuck in beginner mode,” you’re “not using it to its full potential,” or you need “some hacks” to use it right.

Another favorite by Tweetstars is the “how to get 10x [fill in the blank with anything] with ChatGPT.” You can’t not use ChatGPT if others may be 10x-ing their jobs. Do you want to get “10x better responses,” 10x easier content, “10x your design,” “10x your programming productivity,” or 10x your sales? You choose, it seems ChatGPT can literally 10x whatever you want. YouTubers also like the 10x hook. For productivity, excel skills, or the generic—but always welcome—“10x your results.”

Others go for the financial benefit: How to make money with ChatGPT? Do you need “7 ways to make money online”? Maybe “4 GENIUS ways” to do it? You can always go for the more specific “+$850 per day” ChatGPT-based side hustles. AI-savvy Medium writers can also help you make a “shit-ton of money” with ChatGPT “before it’s too late.”

And then there's LinkedIn, probably the most targetable social media for these people. Just as an example, here’s what I get when I search for “ChatGPT” companies:

None of those is official. The first two (100K+ and 50K+ followers) send you to a Facebook group and to a website to create courses, respectively.

As Collet puts it, rather lightly, the current climate in AI would make anyone “uncomfortable”.

Two caveats. First, not all of those seemingly similar resources are of the same quality. You may find the gold hidden in there. It’s the form, not the substance (although usually, they go hand in hand), that throws me off—and all are quite similar in that regard.

Second, the fact that an AI resource (e.g. a newsletter) was born after ChatGPT doesn’t imply it’s of low quality. Leveraging ChatGPT’s attractiveness to increase traffic isn’t bad, what’s bad is doing it at the cost of the quality of the information.

What I criticize in this piece is that the virality of ChatGPT encourages these people to prioritize talking about AI in any way, over doing it conscientiously. That’s the recipe for an AI winter—the hype won't pay off.

The bitter realization

I was watching Sam Altman’s interview with Connie Loizos the other day and read a comment that left me thinking: “Sam's interviews getting 1000x less engagement than clickbait ChatGPT videos is quite astounding.”

Even if I understand exactly why it happens, it’ll always amaze me that this observation is 100% correct. As I perceive it, the only way AI had to go mainstream was through this path. There was hype in AI before ChatGPT—there always has been—but what we’re living now is unheard of. It doesn’t reflect ChatGPT’s value or potential, but merely its attractiveness.

If we wanted AI to reach everyone, I can't help but think this was the way. We love clickbait. We love hype. We love easy content. We love shortcuts. We don't like hard stuff that requires energy, time, and effort. That’s why AI influencers even exist in the first place.

Personally, I feel I have to reject daily this forbidden fruit when deciding what to write about (writing about ChatGPT is crucial, it’s how and why we do it that matters). My work consists in writing good articles as much as it consists in restraining myself from falling into that appealing trap and contaminating my content in exchange for traffic and visibility.

Despite knowing I could take the fast route, I won't become an AI influencer (in the sense I'm using here). I won’t jump on this bandwagon. I hope you don’t either.

Haha I must admit that I’ve considered hopping on the grift with an offering of like a one-off paid zoom workshop for authors on “How to speed up your author workflow using AI.”

For some, less tech-savvy authors, I think this might actually be a useful workshop.

"Influencers" exist in any area with money and excitement.

From the low end of "Work at home making $86 an hour" ads, to the high end of "Investment bank/medschool interview tips". They exist because of informational asymmetry, so people selling bad advice that catches eyeballs can survive and thrive just by prying a small portion of paying customers.

There's no way to eliminate them, they can only be suppressed.

They'll be suppressed, when AI companies become household names, and credible brands in AI information space go from "AI expert/researcher" (trivially fakable credentials) to "Worked at OpenAI/deepmind/stability/some not yet famous but eventually worldwide fame AI company". People will filter out the noise from people without credible brands.

You'll also have real celebrities from AI companies. 10 years ago, Elon Musk was still a nobody to the general public, despite founding Paypal and a billionaire. Now he is an automatic credible voice for the space and EV industries with incredible reach. Sam Altman is still a nobody to the general public, but in 10 years he will be widely known. Then instead of random hype articles, people will actually flock to Joe Rogan vs Sam Altman.

The problem with AI right now is that little real cash is being made. For the media and layman, unless they can see you are a multi-millionaire from AI, they have no reason to listen to you, and they should not be expected to understand and differentiate the technical aspects of AI models. Once real money is made, then they have a signal of who to listen to and trust.