Large language models have a lot of challenges ahead.

They’re costly and contaminating. They need huge amounts of data and compute to learn. They tend to engage in stereotypes and biases that could potentially harm already discriminated minorities. The larger they are, the harder it is to make them safe and the people behind them accountable. In some cases, the only reason companies build them is simply to signal they’re too in the game. Companies tend to overemphasize merits and dismiss deficiencies. To just name a few.

But there’s one other problem that, although we’re aware of, can be extremely hard to detect: AI-based misinformation.

Misinformation is a tricky problem in AI, particularly in language models, because it can be difficult to tell the difference between a piece of information that is simply inaccurate and one that has been deliberately created to mislead. This is a problem that has been exacerbated by the rise of deep learning, which has made it easier to generate fake data that is very difficult to distinguish from real data.

Although language models don’t have intent, they can be guided by humans with malicious interests to attain a goal. For instance, political actors can use language models to generate fake news articles or social media posts that spread false information. This type of AI-based misinformation can be extremely difficult to detect and can have a significant impact on society.

Tom Hinsen, a well-known New York Times journalist, recently covered a story in which a group of hackers had used the system GPT-3 to create a series of targeted posts they published on Facebook with the intention to add confusion about the Russia-Ukraine war.

Hinsen explained that the group had fed the system information about the conflict, and then the system had generated posts that appeared to be from real people living in the conflict zone. The posts were designed to create confusion and sow discord.

This is just one example of how AI-based misinformation can be used to manipulate public opinion and create chaos. “They were able to do this because the system is so good at generating realistic-looking text,” said Hinsen. “It’s a scary example of how AI can be used to create fake news.”

Not only in the US

The problem of misinformation is particularly acute in China, where the government has decided to use AI to control the narrative. The Chinese government has been investing heavily in AI, and it is now using that technology to censor the internet and to control the information that its citizens have access to.

The Chinese government has also been using AI to generate fake news articles that paint the government in a positive light and to spread propaganda. This is a serious problem because it means that the Chinese people are not getting accurate information about what is happening in their country.

Wen Qingzhao, a professor at the University of Science & Technology of China and renowned expert on the social and political effects of AI misinformation, says that “China is not the only country where AI is being used to control the narrative. In fact, it is happening all over the world. AI is a new tool that can be used to spread propaganda and disinformation. And we need to be very careful about how we use it.”

She argues that the AI community has to work hand in hand with regulators and policy-makers to find the best approaches to tackle this extremely delicate issue, “we need to make sure that AI is used for good and not for evil, for democracy and not for dictatorship, and for peace and not for war.”

What can we do individually?

AI is only going to be more ubiquitous in the future. Whether we’re aware of its effects or not is irrelevant to the damage AI-based misinformation can do. However, there are some strategies we can adapt to combat the influence of AI on our beliefs and behavior. Google AI ethics researcher Arjun Raghavan explains the two main approaches, step by step, in his latest paper “Best Approaches Against AI-Based Misinformation.”

“First, we have to make sure that the information we consume is coming from a credible source. This can be done by verifying the source of the information and checking for other signs of credibility, such as whether the source has a good reputation, whether the information has been fact-checked by other sources, and whether the source has a history of publishing accurate information,” explains Raghavan.

“Second, we need to be aware of our own confirmation biases and be willing to update our beliefs in the face of new evidence. This means being open to the possibility that we may be wrong and being willing to change our minds when new evidence contradicts our current beliefs.”

What the future holds

Although misinformation is as old as information, the future will be harder to navigate — and more so for those not well-versed in the new technologies. Generative text models are getting cheaper and more powerful. The degree to which it’s become easier to convert falsehood into a viral news story makes these models extremely dangerous.

In the near future, we will see more and more AI-generated fake news stories, which will be difficult to distinguish from real news. This will have a number of consequences, including:

People will become more distrustful of the news, and media organizations will find it increasingly difficult to gain and maintain public trust.

Fake news will proliferate on social media, further polarizing society and making it more difficult to have constructive public discourse.

Political leaders will increasingly use AI-generated fake news to manipulate public opinion, leading to even more distrust in the political process.

Ultimately, the spread of AI-generated fake news will erode trust in society as a whole, and make it more difficult for people to believe in anything.

A revelation

Maybe you already knew, but this story was almost entirely written by OpenAI’s language model, GPT-3.

In case you don't know what GPT-3 is, it’s a language model whose sole objective is predicting what’s the most likely next word given a history of previous ones. It’s so good at this task, that it can easily fool people into thinking its generations are written by a human. As incredible as it may appear to you, GPT-3 is one of the oldest (and now smallest) language models with this ability. More recent ones like LaMDA, Gopher, Chinchilla, or PaLM are generally better. All of these are in hands of a few tech companies, like OpenAI, DeepMind, or Google, and probably won’t be released to the general public.

OpenAI announced a few months back that they’d release GPT-3 through an open API. Now, anyone can use it to write poetry, songs, conversations, code, ads, emails, or even ponder deep philosophical questions. People can also use it to write a fake article as I just did. I, of course, disclosed my use of GPT-3, but many people aren’t doing the same. Imagine the possibilities. Anyone could use these systems to create endless content and, with minimal editing, publish it via blog posts worth of sites like Substack or Medium.

I decided to co-write this article about misinformation with GPT-3 as an experiment to further emphasize what I’m trying to convey — showing, not telling, is often the best way to do it. Apart from the initial paragraphs of each section, I helped GPT-3 with a couple of interleaved sentences. GPT-3 wrote the bulk — creating this coherent and believable article.

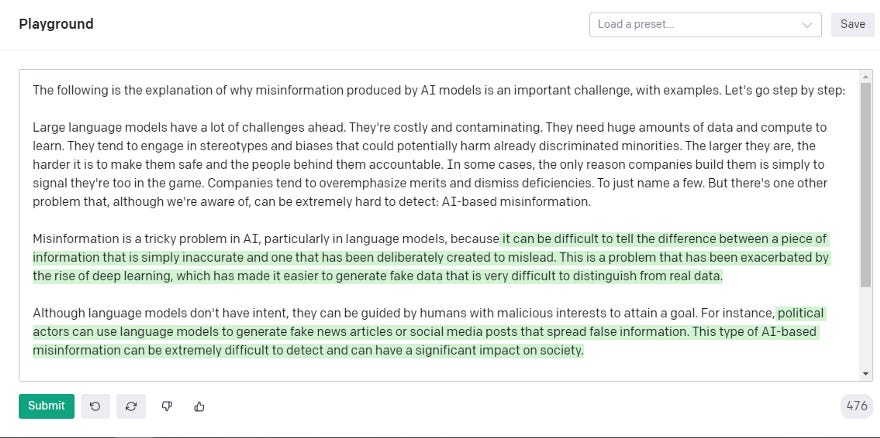

For full disclosure, here are the screenshots of OpenAI’s API. In green its GPT-3 completions:

This article — which was of course my idea, not GPT-3’s — is both about misinformation and misinformation in itself. A meta-misinformation article. The intention is to underscore just how invisible AI-based misinformation can be. Even articles you would never think are written by an AI — a Substack essay on AI misinformation, for instance — are extremely easy to fake. I spent literally an hour crafting this article and it cost only $0.32 on OpenAI’s API.

NYT journalist Tom Hinsen, USTC professor Wen Qingzhao, and Google AI researcher Arjun Raghavan don’t exist. GPT-3 didn’t mind at all that I invented the name and the titles and still created a sensible narrative, even adding verbatim references. Just by writing “a well-known New York Times journalist,” and “renowned expert on the social and political effects of AI misinformation,” GPT-3 made it look real.

GPT-3 also creates fake examples. There’s no evidence that the Chinese government is using AI “to control the narrative” or that “the Chinese people are not getting accurate information.” I also made up the story about the hackers and the Russia-Ukraine war, but GPT-3 continued it with absolute certainty. We can’t assess the factuality and reliability of the system just by reading what it outputs. It’s pretty clear what it writes can be true or made up and we’d have no way to tell.

Of course, by doing precise prompt engineering (carefully selecting the inputs), we can guide these models to output better generations — “sampling can prove the presence of knowledge but not the absence” type of arguments — but that misses the point. It doesn’t matter if we can force GPT-3 to write factual stories because we’d be assuming the person behind has good intentions. Not everyone does and it’s simply too easy to create a completely false but seemingly coherent story.

And there’s also the problem that most people won’t know you can get more out of the system by engineering it better. If GPT-3-like systems are embedded in everyday applications, most people will use them in a significantly sub-optimal way. If that means the outputs are quantitatively worse (e.g. the story it writes has slightly worse quality, then it’s just a matter of degree. But if the outputs are qualitatively worse (e.g. from factual info to non-factual info), then we have a real issue. That’s the reality we’re facing.

This tech, as it is right now, is the Holy Grail of fake news perpetrators and internet trolls. That makes it dangerous, especially for people who don’t even know it exists or how it works. I have no answer to the question of how we can combat this increasingly worrisome issue, but I know one thing for sure: being aware is better than being blind.

Most welcome to join our discord for business and non-fiction writers: https://discord.com/invite/daYdgWyGuF